Navigating the Path to CyberPeace: Insights and Strategies

Featured #factCheck Blogs

Executive Summary

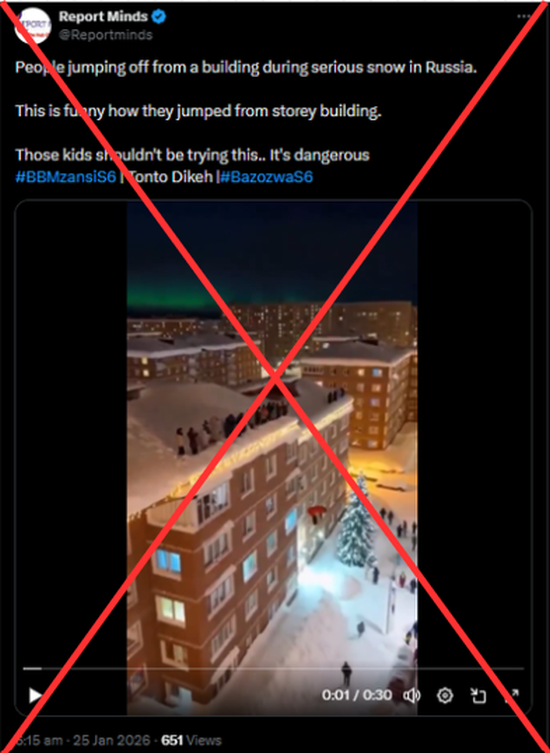

A dramatic video showing several people jumping from the upper floors of a building into what appears to be thick snow has been circulating on social media, with users claiming that it captures a real incident in Russia during heavy snowfall. In the footage, individuals can be seen leaping one after another from a multi-storey structure onto a snow-covered surface below, eliciting reactions ranging from amusement to concern. The claim accompanying the video suggests that it depicts a reckless real-life episode in a snow-hit region of Russia.

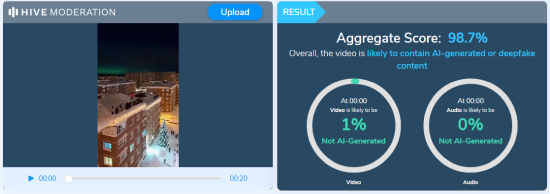

A thorough analysis by CyberPeace confirmed that the video is not a real-world recording but an AI-generated creation. The footage exhibits multiple signs of synthetic media, including unnatural human movements, inconsistent physics, blurred or distorted edges, and a glossy, computer-rendered appearance. In some frames, a partial watermark from an AI video generation tool is visible. Further verification using the Hive Moderation AI-detection platform indicated that 98.7% of the video is AI-generated, confirming that the clip is entirely digitally created and does not depict any actual incident in Russia.

Claim:

The video was shared on social media by an X (formerly Twitter) user ‘Report Minds’ on January 25, claiming it showed a real-life event in Russia. The post caption read: "People jumping off from a building during serious snow in Russia. This is funny, how they jumped from a storey building. Those kids shouldn't be trying this. It's dangerous." Here is the link to the post, and below is a screenshot.

Fact Check:

The Desk used the InVid tool to extract keyframes from the viral video and conducted a reverse image search, which revealed multiple instances of the same video shared by other users with similar claims. Upon close visual examination, several anomalies were observed, including unnatural human movements, blurred and distorted sections, a glossy, digitally-rendered appearance, and a partially concealed logo of the AI video generation tool ‘Sora AI’ visible in certain frames. Screenshots highlighting these inconsistencies were captured during the research .

- https://x.com/DailyLoud/status/2015107152772297086?s=20

- https://x.com/75secondes/status/2015134928745164848?s=20

The video was analyzed on Hive Moderation, an AI-detection platform, which confirmed that 98.7% of the content is AI-generated.

The viral video showing people jumping off a building into snow, claimed to depict a real incident in Russia, is entirely AI-generated. Social media users who shared it presented the digitally created footage as if it were real, making the claim false and misleading.

Executive Summary

A video circulating on social media shows Uttar Pradesh Chief Minister Yogi Adityanath and Gorakhpur MP Ravi Kishan walking with a group of people. Users are claiming that the two leaders were participating in a protest against the University Grants Commission (UGC). Research by CyberPeace has found the viral claim to be misleading. Our research revealed that the video is from September 2025 and is being shared out of context with recent events. The video was recorded when Chief Minister Yogi Adityanath undertook a foot march in Gorakhpur on a Monday. Ravi Kishan, MP from Gorakhpur, was also present. During the march, the Chief Minister visited local markets, malls, and shops, interacting with traders and gathering information on the implementation of GST rate cuts.

Claim Details:

On Instagram, a user shared the viral video on 27 January 2026. The video shows the Chief Minister and the MP walking with a group of people. The text “UGC protest” appears on the video, suggesting that it is connected to a protest against the University Grants Commission.

Fact Check:

To verify the claim, we searched Google using relevant keywords, but found no credible media reports confirming it.Next, we extracted key frames from the video and searched them using Google Lens. The video was traced to NBT Uttar Pradesh’s X (formerly Twitter) account, posted on 22 September 2025.

According to NBT Uttar Pradesh, CM Yogi Adityanath undertook a foot march in Gorakhpur, visiting malls and shops to interact with traders and check the implementation of GST rate cuts.

Conclusion:

The viral video is not related to any recent UGC guidelines. It dates back to September 2025, showing CM Yogi Adityanath and MP Ravi Kishan on a foot march in Gorakhpur, interacting with traders about GST rate cuts.The claim that the video depicts a protest against the University Grants Commission is therefore false and misleading.

Executive Summary:

A photo circulating on the web that claims to show the future design of the Bhabha Atomic Research Center, BARC building, has been found to be fake after fact checking has been done. Nevertheless, there is no official notice or confirmation from BARC on its website or social media handles. Through the AI Content Detection tool, we have discovered that the image is a fake as it was generated by an AI. In short, the viral picture is not the authentic architectural plans drawn up for the BARC building.

Claims:

A photo allegedly representing the new outlook of the Bhabha Atomic Research Center (BARC) building is reigning over social media platforms.

Fact Check:

To begin our investigation, we surfed the BARC's official website to check out their tender and NITs notifications to inquire for new constructions or renovations.

It was a pity that there was no corresponding information on what was being claimed.

Then, we hopped on their official social media pages and searched for any latest updates on an innovative building construction, if any. We looked on Facebook, Instagram and X . Again, there was no information about the supposed blueprint. To validate the fact that the viral image could be generated by AI, we gave a search on an AI Content Detection tool by Hive that is called ‘AI Classifier’. The tool's analysis was in congruence with the image being an AI-generated computer-made one with 100% accuracy.

To be sure, we also used another AI-image detection tool called, “isitai?” and it turned out to be 98.74% AI generated.

Conclusion:

To conclude, the statement about the image being the new BARC building is fake and misleading. A detailed investigation, examining BARC's authorities and utilizing AI detection tools, proved that the picture is more probable an AI-generated one than an original architectural design. BARC has not given any information nor announced anything for such a plan. This makes the statement untrustworthy since there is no credible source to support it.

Claim: Many social media users claim to show the new design of the BARC building.

Claimed on: X, Facebook

Fact Check: Misleading

Executive Summary:

In the context of the recent earthquake in Taiwan, a video has gone viral and is being spread on social media claiming that the video was taken during the recent earthquake that occurred in Taiwan. However, fact checking reveals it to be an old video. The video is from September 2022, when Taiwan had another earthquake of magnitude 7.2. It is clear that the reversed image search and comparison with old videos has established the fact that the viral video is from the 2022 earthquake and not the recent 2024-event. Several news outlets had covered the 2022 incident, mentioning additional confirmation of the video's origin.

Claims:

There is a news circulating on social media about the earthquake in Taiwan and Japan recently. There is a post on “X” stating that,

“BREAKING NEWS :

Horrific #earthquake of 7.4 magnitude hit #Taiwan and #Japan. There is an alert that #Tsunami might hit them soon”.

Similar Posts:

Fact Check:

We started our investigation by watching the videos thoroughly. We divided the video into frames. Subsequently, we performed reverse search on the images and it took us to an X (formally Twitter) post where a user posted the same viral video on Sept 18, 2022. Worth to notice, the post has the caption-

“#Tsunami warnings issued after Taiwan quake. #Taiwan #Earthquake #TaiwanEarthquake”

The same viral video was posted on several news media in September 2022.

The viral video was also shared on September 18, 2022 on NDTV News channel as shown below.

Conclusion:

To conclude, the viral video that claims to depict the 2024 Taiwan earthquake was from September 2022. In the course of the rigorous inspection of the old proof and the new evidence, it has become clear that the video does not refer to the recent earthquake that took place as stated. Hence, the recent viral video is misleading . It is important to validate the information before sharing it on social media to prevent the spread of misinformation.

Claim: Video circulating on social media captures the recent 2024 earthquake in Taiwan.

Claimed on: X, Facebook, YouTube

Fact Check: Fake & Misleading, the video actually refers to an incident from 2022.

Executive Summary

A recent viral message on social media such as X and Facebook, claims that the Indian Government will start charging an 18% GST on "good morning" texts from April 1, 2024. This news is misinformation. The message includes a newspaper clipping and a video that was actually part of a fake news report from 2018. The newspaper article from Navbharat Times, published on March 2, 2018, was clearly intended as a joke. In addition to this, we also found a video of ABP News, originally aired on March 20, 2018, was part of a fact-checking segment that debunked the rumor of a GST on greetings.

Claims:

The claim circulating online suggests that the Government will start applying a 18% of GST on all "Good Morning" texts sent through mobile phones from 1st of April, this year. This tax would be added to the monthly mobile bills.

Fact Check:

When we received the news, we first did some relevant keyword searches regarding the news. We found a Facebook Video by ABP News titled Viral Sach: ‘Govt to impose 18% GST on sending good morning messages on WhatsApp?’

We have watched the full video and found out that the News is 6 years old. The Research Wing of CyberPeace Foundation also found the full version of the widely shared ABP News clip on its website, dated March 20, 2018. The video showed a newspaper clipping from Navbharat Times, published on March 2, 2018, which had a humorous article with the saying "Bura na mano, Holi hain." The recent viral image is a cutout image from ABP News that dates back to the year 2018.

Hence, the recent image that is spreading widely is Fake and Misleading.

Conclusion:

The viral message claiming that the government will impose GST (Goods and Services Tax) on "Good morning" messages is completely fake. The newspaper clipping used in the message is from an old comic article published by Navbharat Times, while the clip and image from ABP News have been taken out of context to spread false information.

Claim: India will introduce a Goods and Services Tax (GST) of 18% on all "good morning" messages sent through mobile phones from April 1, 2024.

Claimed on: Facebook, X

Fact Check: Fake, made as Comic article by Navbharat Times on 2 March 2018

.webp)

Executive Summary:

A widely used news on social media is that a 3D model of Chanakya, supposedly made by Magadha DS University matches with MS Dhoni. However, fact-checking reveals that it is a 3D model of MS Dhoni not Chanakya. This MS Dhoni-3D model was created by artist Ankur Khatri and Magadha DS University does not appear to exist in the World. Khatri uploaded the model on ArtStation, calling it an MS Dhoni similarity study.

Claims:

The image being shared is claimed to be a 3D rendering of the ancient philosopher Chanakya created by Magadha DS University. However, people are noticing a striking similarity to the Indian cricketer MS Dhoni in the image.

Fact Check:

After receiving the post, we ran a reverse image search on the image. We landed on a Portfolio of a freelance character model named Ankur Khatri. We found the viral image over there and he gave a headline to the work as “MS Dhoni likeness study”. We also found some other character models in his portfolio.

Subsequently, we searched for the mentioned University which was named as Magadha DS University. But found no University with the same name, instead the name is Magadh University and it is located in Bodhgaya, Bihar. We searched the internet for any model, made by Magadh University but found nothing. The next step was to conduct an analysis on the Freelance Character artist profile, where we found that he has a dedicated Instagram channel where he posted a detailed video of his creative process that resulted in the MS Dhoni character model.

We concluded that the viral image is not a reconstruction of Indian philosopher Chanakya but a reconstruction of Cricketer MS Dhoni created by an artist named Ankur Khatri, not any University named Magadha DS.

Conclusion:

The viral claim that the 3D model is a recreation of the ancient philosopher Chanakya by a university called Magadha DS University is False and Misleading. In reality, the model is a digital artwork of former Indian cricket captain MS Dhoni, created by artist Ankur Khatri. There is no evidence of a Magadha DS University existence. There is a university named Magadh University in Bodh Gaya, Bihar despite its similar name, we found no evidence in the model's creation. Therefore, the claim is debunked, and the image is confirmed to be a depiction of MS Dhoni, not Chanakya.

Executive Summary:

A fake photo claiming to show the cricketer Virat Kohli watching a press conference by Rahul Gandhi before a match, has been widely shared on social media. The original photo shows Kohli on his phone with no trace of Gandhi. The incident is claimed to have happened on March 21, 2024, before Kohli's team, Royal Challengers Bangalore (RCB), played Chennai Super Kings (CSK) in the Indian Premier League (IPL). Many Social Media accounts spread the false image and made it viral.

Claims:

The viral photo falsely claims Indian cricketer Virat Kohli was watching a press conference by Congress leader Rahul Gandhi on his phone before an IPL match. Many Social media handlers shared it to suggest Kohli's interest in politics. The photo was shared on various platforms including some online news websites.

Fact Check:

After we came across the viral image posted by social media users, we ran a reverse image search of the viral image. Then we landed on the original image posted by an Instagram account named virat__.forever_ on 21 March.

The caption of the Instagram post reads, “VIRAT KOHLI CHILLING BEFORE THE SHOOT FOR JIO ADVERTISEMENT COMMENCE.❤️”

Evidently, there is no image of Congress Leader Rahul Gandhi on the Phone of Virat Kohli. Moreover, the viral image was published after the original image, which was posted on March 21.

Therefore, it’s apparent that the viral image has been altered, borrowing the original image which was shared on March 21.

Conclusion:

To sum up, the Viral Image is altered from the original image, the original image caption tells Cricketer Virat Kohli chilling Before the Jio Advertisement commences but not watching any politician Interview. This shows that in the age of social media, where false information can spread quickly, critical thinking and fact-checking are more important than ever. It is crucial to check if something is real before sharing it, to avoid spreading false stories.

Executive Summary:

In the age of virtuality, misinformation and misleading techniques shape the macula of the internet, and these threaten human safety and well-being. Recently, an alarming fake information has surfaced, intended to provide a fake Government subsidy scheme with the name of Indian Post. This serves criminals, who attack people's weaknesses, laying them off with proposals of receiving help in exchange for info. In this informative blog, we take a deep dive into one of the common schemes of fraud during this time. We will go through the stages involved which illustrates how one is deceived and offer practical tips to avoid the fall.

Introduction:

Digital communication reaches individuals faster, and as a result, misinformation and mails have accelerated their spread globally. People, therefore, are susceptible to online scams as they add credibility to phenomena. In India, the recently increased fake news draws its target with the deceptive claims of being a subsidy from the Government mainly through the Indian post. These fraudulent schemes frequently are spread via social networks and messaging platforms, influence trust of the individual’s in respectable establishments to establish fraud and collect private data.

Understanding the Claim:

There is a claim circulating on the behalf of the Government at the national level of a great subsidy of $1066 for deserving residents. The individual will be benefited with the subsidy when they complete the questionnaire they have received through social media. The questionnaire may have been designed to steal the individual’s confidential information by way of taking advantage of naivety and carelessness.

The Deceptive Journey Unveiled:

Bogus Offer Presentation: The scheme often appeals to people, by providing a misleading message or a commercial purposely targeted at convincing them to act immediately by instilling the sense of an urgent need. Such messages usually combine the mood of persuasion and highly evaluative material to create an illusion of being authentic.

Questionnaire Requirement: After the visitors land on attractive content material they are directed to fill in the questionnaire which is supposedly required for processing the economic assistance. This questionnaire requests for non private information in their nature.

False Sense of Urgency: Simultaneously, in addition to the stress-causing factor of it being a fake news, even the false deadline may be brought out to push in the technique of compliance. This data collection is intended to put people under pressure and influence them to make the information transfer that immediate without thorough examination.

Data Harvesting Tactics: Despite the financial help actually serving, you might be unaware but lies beneath it is a vile motive, data harvesting. The collection of facts through questionnaires may become something priceless for scammers that they can use for a good while to profit from identity theft, financial crimes and other malicious means.

Analysis Highlights:

- It is important to note that at this particular point, there has not been any official declaration or a proper confirmation of an offer made by the India Post or from the Government. So, people must be very careful when encountering such messages because they are often employed as lures in phishing attacks or misinformation campaigns. Before engaging or transmitting such claims, it is always advisable to authenticate the information from trustworthy sources in order to protect oneself online and prevent the spread of wrongful information

- The campaign is hosted on a third party domain instead of any official Government Website, this raised suspicion. Also the domain has been registered in very recent times.

- Domain Name: ccn-web[.]buzz

- Registry Domain ID: D6073D14AF8D9418BBB6ADE18009D6866-GDREG

- Registrar WHOIS Server: whois[.]namesilo[.]com

- Registrar URL: www[.]namesilo[.]com

- Updated Date: 2024-02-27T06:17:21Z

- Creation Date: 2024-02-11T03:23:08Z

- Registry Expiry Date: 2025-02-11T03:23:08Z

- Registrar: NameSilo, LLC

- Name Server: tegan[.]ns[.]cloudflare[.]com

- Name Server: nikon[.]ns[.]cloudflare[.]com

Note: Cybercriminal used Cloudflare technology to mask the actual IP address of the fraudulent website.

CyberPeace Advisory:

Verification and Vigilance: It makes complete sense in this case that you should be cautious and skeptical. Do not fall prey to this criminal act. Examine the arguments made and the facts provided by either party and consult credible sources before disclosures are made.

Official Channels: Governments usually invoke the use of reliable channels which can as well be by disseminating subsidies and assistance programs through official websites and the legal channels. Take caution for schemes that are not following the protocols previously established.

Educational Awareness: Providing awareness through education and consciousness about on-line scams and the approaches which are fraudulent has to be considered a primary requirement. Through empowering individuals with capabilities and targets we, as a collective, can be armed with information that will prevent erroneous scheme spreading.

Reporting and Action: In a case of mission suspicious and fraudulent images, let them understand immediately by making the authorities and necessary organizations alert. Your swift actions do not only protect yourself but also help others avoid the costs of related security compromises.

Conclusion:

The rise of the ‘Indian Post Countrywide - government subsidy fake news’ poses a stern warning of the present time that the dangers within the virtual ecosystem are. The art of being wise and sharp in terms of scams always reminds us to show a quick reaction to the hacks and try to do the things that we should identify as per the CyberPeace advisories; thereby, we will contribute to a safer Cyberspace for everyone. Likewise, the ability to critically judge, and remain alert, is important to help defeat the variety of tricks offenders use to mislead you online.

Executive Summary:

A circulating picture which is said to be of United States President Joe Biden wearing military uniform during a meeting with military officials has been found out to be AI-generated. This viral image however falsely claims to show President Biden authorizing US military action in the Middle East. The Cyberpeace Research Team has identified that the photo is generated by generative AI and not real. Multiple visual discrepancies in the picture mark it as a product of AI.

Claims:

A viral image claiming to be US President Joe Biden wearing a military outfit during a meeting with military officials has been created using artificial intelligence. This picture is being shared on social media with the false claim that it is of President Biden convening to authorize the use of the US military in the Middle East.

Similar Post:

Fact Check:

CyberPeace Research Team discovered that the photo of US President Joe Biden in a military uniform at a meeting with military officials was made using generative-AI and is not authentic. There are some obvious visual differences that plainly suggest this is an AI-generated shot.

Firstly, the eyes of US President Joe Biden are full black, secondly the military officials face is blended, thirdly the phone is standing without any support.

We then put the image in Image AI Detection tool

The tool predicted 4% human and 96% AI, Which tells that it’s a deep fake content.

Let’s do it with another tool named Hive Detector.

Hive Detector predicted to be as 100% AI Detected, Which likely to be a Deep Fake Content.

Conclusion:

Thus, the growth of AI-produced content is a challenge in determining fact from fiction, particularly in the sphere of social media. In the case of the fake photo supposedly showing President Joe Biden, the need for critical thinking and verification of information online is emphasized. With technology constantly evolving, it is of great importance that people be watchful and use verified sources to fight the spread of disinformation. Furthermore, initiatives to make people aware of the existence and impact of AI-produced content should be undertaken in order to promote a more aware and digitally literate society.

- Claim: A circulating picture which is said to be of United States President Joe Biden wearing military uniform during a meeting with military officials

- Claimed on: X

- Fact Check: Fake

Executive Summary:

A number of false information is spreading across social media networks after the users are sharing the mistranslated video with Indian Hindus being congratulated by Italian Prime Minister Giorgia Meloni on the inauguration of Ram Temple in Ayodhya under Uttar Pradesh state. Our CyberPeace Research Team’s investigation clearly reveals that those allegations are based on false grounds. The true interpretation of the video that actually is revealed as Meloni saying thank you to those who wished her a happy birthday.

Claims:

A X (Formerly known as Twitter) user’ shared a 13 sec video where Italy Prime Minister Giorgia Meloni speaking in Italian and user claiming to be congratulating India for Ram Mandir Construction, the caption reads,

“Italian PM Giorgia Meloni Message to Hindus for Ram Mandir #RamMandirPranPratishta. #Translation : Best wishes to the Hindus in India and around the world on the Pran Pratistha ceremony. By restoring your prestige after hundreds of years of struggle, you have set an example for the world. Lots of love.”

Fact Check:

The CyberPeace Research team tried to translate the Video in Google Translate. First, we took out the transcript of the Video using an AI transcription tool and put it on Google Translate; the result was something else.

The Translation reads, “Thank you all for the birthday wishes you sent me privately with posts on social media, a lot of encouragement which I will treasure, you are my strength, I love you.”

With this we are sure that it was not any Congratulations message but a thank you message for all those who sent birthday wishes to the Prime Minister.

We then did a reverse Image Search of frames of the Video and found the original Video on the Prime Minister official X Handle uploaded on 15 Jan, 2024 with caption as, “Grazie. Siete la mia” Translation reads, “Thank you. You are my strength!”

Conclusion:

The 13 Sec video shared by a user had a great reach at X as a result many users shared the Video with Similar Caption. A Misunderstanding starts from one Post and it spreads all. The Claims made by the X User in Caption of the Post is totally misleading and has no connection with the actual post of Italy Prime Minister Giorgia Meloni speaking in Italian. Hence, the Post is fake and Misleading.

- Claim: Italian Prime Minister Giorgia Meloni congratulated Hindus in the context of Ram Mandir

- Claimed on: X

- Fact Check: Fake

Executive Summary:

Old footage of Indian Cricketer Virat Kohli celebrating Ganesh Chaturthi in September 2023 was being promoted as footage of Virat Kohli at the Ram Mandir Inauguration. A video of cricketer Virat Kohli attending a Ganesh Chaturthi celebration last year has surfaced, with the false claim that it shows him at the Ram Mandir consecration ceremony in Ayodhya on January 22. The Hindi newspaper Dainik Bhaskar and Gujarati newspaper Divya Bhaskar also displayed the now-viral video in their respective editions on January 23, 2024, escalating the false claim. After thorough Investigation, it was found that the Video was old and it was Ganesh Chaturthi Festival where the cricketer attended.

Claims:

Many social media posts, including those from news outlets such as Dainik Bhaskar and Gujarati News Paper Divya Bhaskar, show him attending the Ram Mandir consecration ceremony in Ayodhya on January 22, where after investigation it was found that the Video was of Virat Kohli attending Ganesh Chaturthi in September, 2023.

The caption of Dainik Bhaskar E-Paper reads, “ क्रिकेटर विराट कोहली भी नजर आए ”

Fact Check:

CyberPeace Research Team did a reverse Image Search of the Video where several results with the Same Black outfit was shared earlier, from where a Bollywood Entertainment Instagram Profile named Bollywood Society shared the same Video in its Page, the caption reads, “Virat Kohli snapped for Ganapaati Darshan” the post was made on 20 September, 2023.

Taking an indication from this we did some keyword search with the Information we have, and it was found in an article by Free Press Journal, Summarizing the article we got to know that Virat Kohli paid a visit to the residence of Shiv Sena leader Rahul Kanal to seek the blessings of Lord Ganpati. The Viral Video and the claim made by the news outlet is false and Misleading.

Conclusion:

The recent Claim made by the Viral Videos and News Outlet is an Old Footage of Virat Kohli attending Ganesh Chaturthi the Video back to the year 2023 but not of the recent auspicious day of Ram Mandir Pran Pratishtha. To be noted that, we also confirmed that Virat Kohli hadn’t attended the Program; there was no confirmation that Virat Kohli attended on 22 January at Ayodhya. Hence, we found this claim to be fake.

- Claim: Virat Kohli attending the Ram Mandir consecration ceremony in Ayodhya on January 22

- Claimed on: Youtube, X

- Fact Check: Fake

Executive Summary:

A photographer breaking down in tears in a viral photo is not connected to the Ram Mandir opening. Social media users are sharing a collage of images of the recently dedicated Lord Ram idol at the Ayodhya Ram Mandir, along with a claimed shot of the photographer crying at the sight of the deity. A Facebook post that posts this video says, "Even the cameraman couldn't stop his emotions." The CyberPeace Research team found that the event happened during the AFC Asian Cup football match in 2019. During a match between Iraq and Qatar, an Iraqi photographer started crying since Iraq had lost and was out of the competition.

Claims:

The photographer in the widely shared images broke down in tears at seeing the icon of Lord Ram during the Ayodhya Ram Mandir's consecration. The Collage was also shared by many users in other Social Media like X, Reddit, Facebook. An Facebook user shared and the Caption of the Post reads,

Fact Check:

CyberPeace Research team reverse image searched the Photographer, and it landed to several memes from where the picture was taken, from there we landed to a Pinterest Post where it reads, “An Iraqi photographer as his team is knocked out of the Asian Cup of Nations”

Taking an indication from this we did some keyword search and tried to find the actual news behind this Image. We landed at the official Asian Cup X (formerly Twitter) handle where the image was shared 5 years ago on 24 Jan, 2019. The Post reads, “Passionate. Emotional moment for an Iraqi photographer during the Round of 16 clash against ! #AsianCup2019”

We are now confirmed about the News and the origin of this image. To be noted that while we were investigating the Fact Check we also found several other Misinformation news with the Same photographer image and different Post Captions which was all a Misinformation like this one.

Conclusion:

The recent Viral Image of the Photographer claiming to be associated with Ram Mandir Opening is Misleading, the Image of the Photographer was a 5 years old image where the Iraqi Photographer was seen Crying during the Asian Cup Football Competition but not of recent Ram Mandir Opening. Netizens are advised not to believe and share such misinformation posts around Social Media.

- Claim: A person in the widely shared images broke down in tears at seeing the icon of Lord Ram during the Ayodhya Ram Mandir's consecration.

- Claimed on: Facebook, X, Reddit

- Fact Check: Fake

Executive Summary:

A video purporting to be from Lal Chowk in Srinagar, which features Lord Ram's hologram on a clock tower, has gone popular on the internet. The footage is from Dehradun, Uttarakhand, not Jammu and Kashmir, the CyberPeace Research Team discovered.

Claims:

A Viral 48-second clip is getting shared over the Internet mostly in X and Facebook, The Video shows a car passing by the clock tower with the picture of Lord Ram. A screen showcasing songs about Lord Ram is shown when the car goes forward and to the side of the road.

The Claim is that the Video is from Kashmir, Srinagar

Similar Post:

Fact Check:

The CyberPeace Research team found that the Information is false. Firstly we did some keyword search relating to the Caption and found that the Clock Tower in Srinagar is not similar to the Video.

We found an article by NDTV mentioning Srinagar Lal Chowk’s Clock Tower, It's the only Clock Tower in the Middle of Road. We are somewhat confirmed that the Video is not From Srinagar. We then ran a reverse image search of the Video by breaking down into frames.

We found another Video that visualizes a similar structure tower in Dehradun.

Taking a cue from this we then Searched for the Tower in Dehradun and tried to see if it matches with the Video, and yes it’s confirmed that the Tower is a Clock Tower in Paltan Bazar, Dehradun and the Video is actually From Dehradun but not from Srinagar.

Conclusion:

After a thorough Fact Check Investigation of the Video and the originality of the Video, we found that the Visualisation of Lord Ram in the Clock Tower is not from Srinagar but from Dehradun. Internet users who claim the Visual of Lord Ram from Srinagar is totally Baseless and Misinformation.

- Claim: The Hologram of Lord Ram on the Clock Tower of Lal Chowk, Srinagar

- Claimed on: Facebook, X

- Fact Check: Fake

.webp)

Executive Summary:

In the end of January 2024, India sees an inauguration of Ram Mandir that is a historical event to which people came culturally and spiritually. All communities in the world acknowledge this point of life as a victory and also understand how it unites people. In the midst of this genuine joy over success, there has been a disconcerting increase in malpractices designed to exploit people’s enthusiasm. This report aims at providing awareness and guidelines on how one can avoid the fraud activities that could be circulating as a celebration of Ram Mandir inauguration. An example cited here is on scams that give fake free recharge to users making them connect with the Prime Minister of India and UP Chief Minister Yogi Adityanath.

False Claim:

According to the message passed in WhatsApp, as a commemoration of the inauguration of Ram Mandir in Ayodhya in January 2024, free Rs.749 mobile recharge for three months would be offered to all Indians across India by both the PM and UP CM. The message prompts the recipients to click on the blue link provided and then recharge their numbers.

The Deceptive Scheme:

We have been informed of a circulating link (https://mahacashhback[.]in/#1705296887543) stating that it offers ₹719 recharge in honor of the Ram Mandir inauguration. It is worth mentioning that this link does not belong to any legitimate movement concerning the inauguration; public excitement and trust were used for personal gain.

Analyzing the Fraudulent Campaign:

- Exploiting Emotional Significance:Scammers are using the cultural and religious significance of Ram mandir inauguration as a cover to fool people into participating in its fraudulent scheme.

- Fake Recharge Offers:The broadcasted link is offering a recharge pretending that they celebrate it’s inauguration. Such offers should be handled with care and established through authorized avenues.

- Bogus Landing Pages and Comments:The landing page linked to the link typically shows images of Ram Mandir and fake comments succeeding in a make-believe appearance. Legitimate projects linked to major events rely on official and trustworthy communication mechanisms.

- Data Collection Attempts:However, users may be asked for personal details like mobile numbers under the false pretext of winning a fake recharge. Legitimate organizations practice secure protocols for data collection and communication.

- Sharing for Activation:After the data entry, users are prompted to share a link in other people’s posts; it is said that this will help “activate” recharge. This is a popular trick among swindlers to keep the fraud going on due to sending misleading messages.

What do we Analyze?

- It is important to note that at this particular point, there has not been any official declaration or a proper confirmation of such offers on any official channel.

- The campaign is hosted on a third party domain instead of any official Government Website, this raised suspicion. Also the domain has been registered in very recent times.

- Domain Name: mahacashhback[.]in

- Registry Domain ID: D1FCF1B5751244310A2FA723B62CE83E9-IN

- Registrar URL: https://publicdomainregistry[.]com/

- Registrar: Endurance Digital Domain Technology LLP

- Registrar IANA ID: 801217

- Updated Date: 2024-01-18T08:09:00Z

- Creation Date: 2023-05-27T12:01:17Z

- Registry Expiry Date: 2024-05-27T12:01:17Z

- Registrant Organization: Sachin Kumar

- Registrant State/Province: Bihar

- Name Server: ns2.suspended-domain[.]com

- Name Server: ns1.suspended-domain[.]com

CyberPeace Advisory and Best Practices:

- Verify Authenticity:Authenticate any offers or promotions linked to the Ram Mandir inauguration through official channels.

- Exercise Caution with Links:Do not engage with questionable URLs, in particular those without secure encryption (HTTPS). Official announcements and initiatives are disseminated through secure outlets.

- Protect Personal Information:Do not provide personal information and do not respond to unsolicited offers on nonofficial platforms. Genuine organizations employ safe and official routes for communication.

- Report Fraudulent Activity:When you see scams or fraudulent activities, immediately report them to authorities and platforms so that no one falls into their trap.

Conclusion:

In the coming days, let us be cautious from such cheating strategies which would be misutilized or create false situations. Individuals should stay informed, verify sources and defend their personal information to ensure a safer world wide web. Official and secure channels are used to communicate authentic initiatives linked with notable events. When an offer sounds too favorable or attractive, exercise due caution and check its genuineness to avoid being defrauded. Thus by undertaking the research we found this campaign to be fake.