#FactCheck - Viral Claim About Nitish Kumar’s Resignation Over UGC Protests Is Misleading

Executive Summary

A news video is being widely circulated on social media with the claim that Bihar Chief Minister Nitish Kumar has resigned from his post in protest against the ongoing UGC-related controversy. Several users are sharing the clip while alleging that Kumar stepped down after opposing the issue. However, CyberPeace research has found the claim to be false. The researchrevealed that the video being shared is from 2022 and has no connection whatsoever with the UGC or any recent protests related to it. An old video has been misleadingly linked to a current issue to spread misinformation on social media.

Claim:

An Instagram user shared a video on January 26 claiming that Bihar Chief Minister Nitish Kumar had resigned. The post further alleged that the news was first aired on Republic channel and that Kumar had submitted his resignation to then-Governor Phagu Chauhan. The link to the post, its archived version, and screenshots can be seen below. (Links as provided)

Fact Check:

To verify the claim, CyberPeace first conducted a keyword-based search on Google. No credible or established media organisation reported any such resignation, clearly indicating that the viral claim lacked authenticity.

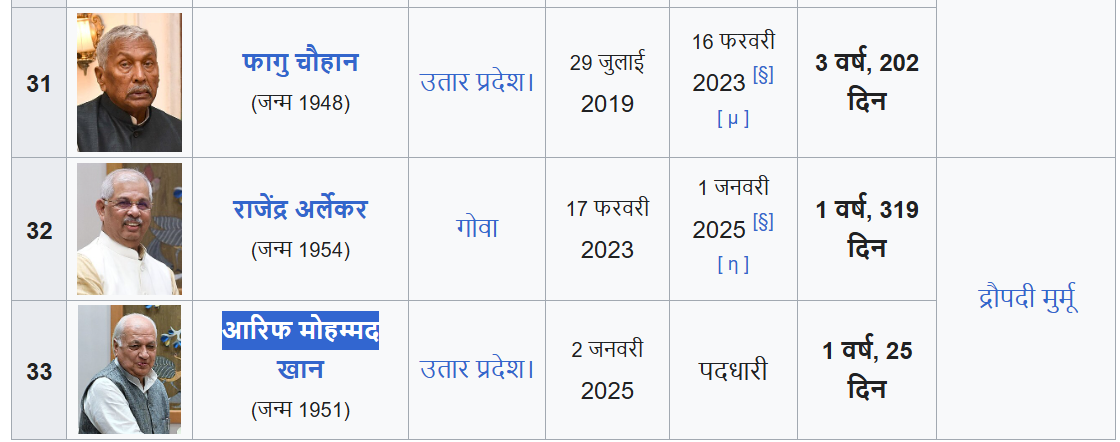

Further, the voiceover in the viral video states that Nitish Kumar handed over his resignation to Governor Phagu Chauhan. However, Phagu Chauhan ceased to be the Governor of Bihar in February 2023. The current Governor of Bihar is Arif Mohammad Khan, making the claim in the video factually incorrect and misleading.

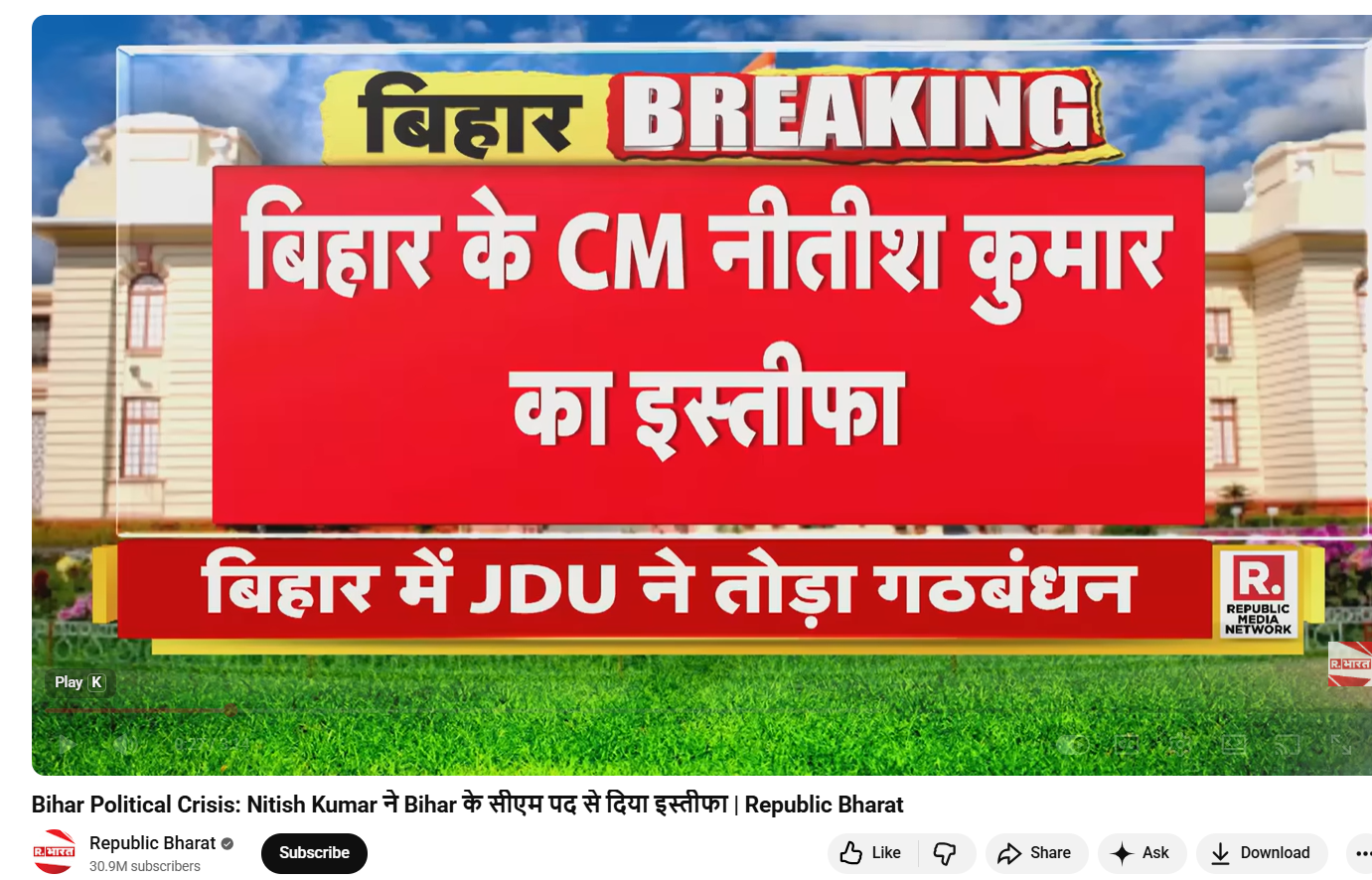

In the next step, keyframes from the viral video were extracted and reverse-searched using Google Lens. This led to the official YouTube channel of Republic Bharat, where the full version of the same video was found. The video was uploaded on August 9, 2022. This clearly establishes that the clip circulating on social media is not recent and is being shared out of context.

Conclusion

CyberPeace’s research confirms that the viral video claiming Nitish Kumar resigned over the UGC issue is false. The video dates back to 2022 and has no link to the current UGC controversy. An old political video has been deliberately circulated with a misleading narrative to create confusion on social media.

.webp)