#FactCheck: AI-Generated Audio Falsely Claims COAS Admitted to Loss of 6 Jets and 250 Soldiers

Executive Summary:

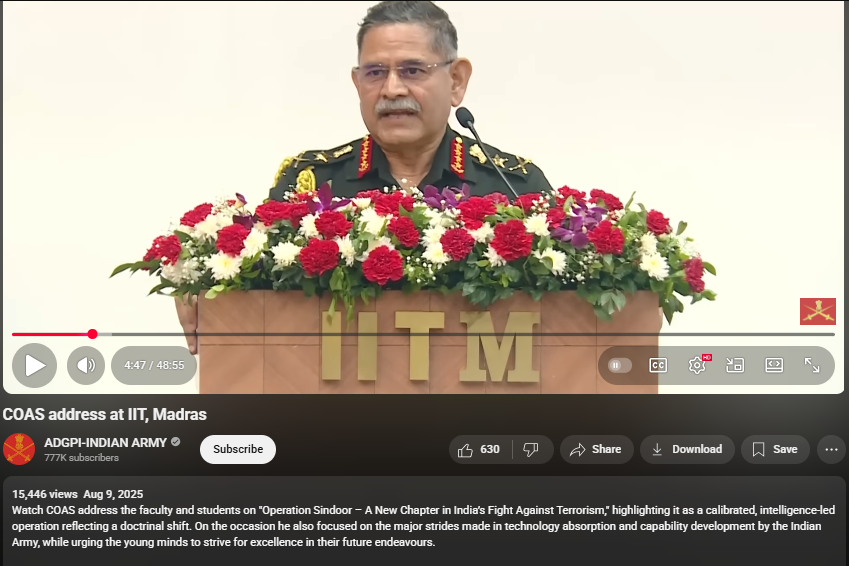

A viral video (archive link) claims General Upendra Dwivedi, Chief of Army Staff (COAS), admitted to losing six Air Force jets and 250 soldiers during clashes with Pakistan. Verification revealed the footage is from an IIT Madras speech, with no such statement made. AI detection confirmed parts of the audio were artificially generated.

Claim:

The claim in question is that General Upendra Dwivedi, Chief of Army Staff (COAS), admitted to losing six Indian Air Force jets and 250 soldiers during recent clashes with Pakistan.

Fact Check:

Upon conducting a reverse image search on key frames from the video, it was found that the original footage is from IIT Madras, where the Chief of Army Staff (COAS) was delivering a speech. The video is available on the official YouTube channel of ADGPI – Indian Army, published on 9 August 2025, with the description:

“Watch COAS address the faculty and students on ‘Operation Sindoor – A New Chapter in India’s Fight Against Terrorism,’ highlighting it as a calibrated, intelligence-led operation reflecting a doctrinal shift. On the occasion, he also focused on the major strides made in technology absorption and capability development by the Indian Army, while urging young minds to strive for excellence in their future endeavours.”

A review of the full speech revealed no reference to the destruction of six jets or the loss of 250 Army personnel. This indicates that the circulating claim is not supported by the original source and may contribute to the spread of misinformation.

Further using AI Detection tools like Hive Moderation we found that the voice is AI generated in between the lines.

Conclusion:

The claim is baseless. The video is a manipulated creation that combines genuine footage of General Dwivedi’s IIT Madras address with AI-generated audio to fabricate a false narrative. No credible source corroborates the alleged military losses.

- Claim: AI-Generated Audio Falsely Claims COAS Admitted to Loss of 6 Jets and 250 Soldiers

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Executive Summary:

A viral claim circulated in social media that Anant Ambani and Radhika Merchant wore clothes made of pure gold during their pre-wedding cruise party in Europe. Thorough analysis revealed abnormalities in image quality, particularly between the face, neck, and hands compared to the claimed gold clothing, leads to possible AI manipulation. A keyword search found no credible news reports or authentic images supporting this claim. Further analysis using AI detection tools, TrueMedia and Hive Moderator, confirmed substantial evidence of AI fabrication, with a high probability of the image being AI-generated or a deep fake. Additionally, a photo from a previous event at Jio World Plaza matched with the pose of the manipulated image, further denying the claim and indicating that the image of Anant Ambani and Radhika Merchant wearing golden outfit during their pre-wedding cruise was digitally altered.

Claims:

Anant Ambani and Radhika Merchant wore clothes made of pure gold during their pre-wedding cruise party in Europe.

Fact Check:

When we received the posts, we found anomalies that were usually found in edited images or AI manipulated images, particularly between the face, neck, and hands.

It’s very unusual in any image. So we then checked in AI Image detection software named Hive Moderation detection tool and found it to be 95.9% AI manipulated.

We also checked with another widely used AI detection tool named True Media. True Media also found it to be 100% to be made using AI.

This implies that the image is AI-generated. To find the original image that has been edited, we did keyword search. We found an image with the same pose as in the manipulated image, with the title "Radhika Merchant, Anant Ambani pose with Mukesh Ambani at Jio World Plaza opening”. The two images can be compared to verify that the digitally altered image is the same.

Hence, it’s confirmed that the viral image is digitally altered and has no connection with the 2nd Pre-wedding cruise party in Europe. Thus the viral image is fake and misleading.

Conclusion:

The claim that Anant Ambani and Radhika Merchant wore clothes made of pure gold at their pre-wedding cruise party in Europe is false. The analysis of the image showed signs of manipulation, and a lack of credible news reports or authentic photos supports that it was likely digitally altered. AI detection tools confirmed a high probability that the image was fake, and a comparison with a genuine photo from another event revealed that the image had been edited. Therefore, the claim is false and misleading.

- Claim: Anant Ambani and Radhika Merchant wore clothes made of pure gold during their pre-wedding cruise party in Europe.

- Claimed on: YouTube, LinkedIn, Instagram

- Fact Check: Fake & Misleading

Executive Summary:

A widely circulated social media post claims that the Government of India has reportedly opened an account—Army Welfare Fund Battle Casualty—at Canara Bank to support the modernization of the Indian Army and assist injured or martyred soldiers. Citizens can voluntarily contribute starting from ₹1, with no upper limit. The fund is said to have been launched based on a suggestion by actor Akshay Kumar, which was later acknowledged by the Prime Minister of India through Mann Ki Baat and social media platforms. However, the fact is that no such decision has been taken by the cabinet recently, and no such decision has been officially announced.

Claim:

A viral social media post claims that the Government of India has launched a new initiative aimed at modernizing the Indian Army and supporting battle casualties through public donations. According to the post, a special bank account has been created to enable citizens to contribute directly toward the procurement of arms and equipment for the armed forces.

It further states that this initiative was introduced following a Cabinet decision and was inspired by a suggestion from Bollywood actor Akshay Kumar, which was reportedly acknowledged by the Prime Minister during his Mann Ki Baat address.

The post encourages individuals to donate any amount starting from ₹1, with no upper limit, and estimates that widespread public participation could generate up to ₹36,000 crore annually to support the armed forces. It also lists two bank accounts—one at Canara Bank (Account No: 90552010165915) and another at State Bank of India (Account No: 40650628094)—allegedly designated for the "Armed Forces Battle Casualties Welfare Fund."

The statement said,” The government established a range of welfare schemes for soldiers killed or disabled while undertaking military operations in recent combat. In 2020, the government established the 'Armed Forces Battle Casualty Welfare Fund (AFBCWF)', which is used to provide immediate financial assistance to families of soldiers, sailors and airmen who lose their lives or sustain grievous injury as a result of active military service.”

We also found a similar post from the past, which can be seen here.

Fact Check:

The Press Information Bureau (PIB) have responded to the viral post stating that it is misleading, and the Government has not launched any message inviting public donations towards the modernisation of the Indian Army or for purchasing Weapons for the army. The only known official initiative by the Ministry of Defence is the "Armed Forces Battle Casualties Welfare Fund", which is an initiative set up to support the families of our soldiers who have been marshalled or grievously disabled in the line of duty, not for buying military equipment.

In addition, the bank account details mentioned in the Viral post are false, and donations and charitable donations submitted to the account have been dishonoured.

The other false claim says that actor Akshay Kumar is promoting or heading this message-there is no official/disclosure record or announcement related to him leading or sponsoring this project. Having said that in 2017, Akshay Kumar encouraged public contributions of just one rupee per month to support the armed forces, through a web portal called “Bharat Ke Veer”. The platform was developed in partnership with the Ministry of Home Affairs

Citizens have to rely on only official government sources and ignore misleading messages on such social media platforms.

Conclusion:

The viral social media post suggesting that the Government of India has initiated a donation drive for the modernisation of the Indian Army and the purchase of weapons is misleading and inaccurate. According to the Press Information Bureau (PIB), no such initiative has been launched by the government, and the bank account details provided in the post are false, with reported cases of dishonoured transactions. The only legitimate initiative is the Armed Forces Battle Casualties Welfare Fund (AFBCWF), which provides financial assistance to the families of soldiers who are martyred or seriously injured in the line of duty. While actor Akshay Kumar played a key role in launching the Bharat Ke Veer portal in 2017 to support paramilitary personnel, he has no official connection to the viral claims.

- Claim: The government has launched a public donation message to fund Army weapon purchases.

- Claimed On: Social Media

- Fact Check: False and Misleading

The World Economic Forum reported that AI-generated misinformation and disinformation are the second most likely threat to present a material crisis on a global scale in 2024 at 53% (Sept. 2023). Artificial intelligence is automating the creation of fake news at a rate disproportionate to its fact-checking. It is spurring an explosion of web content mimicking factual articles that instead disseminate false information about grave themes such as elections, wars and natural disasters.

According to a report by the Centre for the Study of Democratic Institutions, a Canadian think tank, the most prevalent effect of Generative AI is the ability to flood the information ecosystem with misleading and factually-incorrect content. As reported by Democracy Reporting International during the 2024 elections of the European Union, Google's Gemini, OpenAI’s ChatGPT 3.5 and 4.0, and Microsoft’s AI interface ‘CoPilot’ were inaccurate one-third of the time when engaged for any queries regarding the election data. Therefore, a need for an innovative regulatory approach like regulatory sandboxes which can address these challenges while encouraging responsible AI innovation is desired.

What Is AI-driven Misinformation?

False or misleading information created, amplified, or spread using artificial intelligence technologies is AI-driven misinformation. Machine learning models are leveraged to automate and scale the creation of false and deceptive content. Some examples are deep fakes, AI-generated news articles, and bots that amplify false narratives on social media.

The biggest challenge is in the detection and management of AI-driven misinformation. It is difficult to distinguish AI-generated content from authentic content, especially as these technologies advance rapidly.

AI-driven misinformation can influence elections, public health, and social stability by spreading false or misleading information. While public adoption of the technology has undoubtedly been rapid, it is yet to achieve true acceptance and actually fulfill its potential in a positive manner because there is widespread cynicism about the technology - and rightly so. The general public sentiment about AI is laced with concern and doubt regarding the technology’s trustworthiness, mainly due to the absence of a regulatory framework maturing on par with the technological development.

Regulatory Sandboxes: An Overview

Regulatory sandboxes refer to regulatory tools that allow businesses to test and experiment with innovative products, services or businesses under the supervision of a regulator for a limited period. They engage by creating a controlled environment where regulators allow businesses to test new technologies or business models with relaxed regulations.

Regulatory sandboxes have been in use for many industries and the most recent example is their use in sectors like fintech, such as the UK’s Financial Conduct Authority sandbox. These models have been known to encourage innovation while allowing regulators to understand emerging risks. Lessons from the fintech sector show that the benefits of regulatory sandboxes include facilitating firm financing and market entry and increasing speed-to-market by reducing administrative and transaction costs. For regulators, testing in sandboxes informs policy-making and regulatory processes. Looking at the success in the fintech industry, regulatory sandboxes could be adapted to AI, particularly for overseeing technologies that have the potential to generate or spread misinformation.

The Role of Regulatory Sandboxes in Addressing AI Misinformation

Regulatory sandboxes can be used to test AI tools designed to identify or flag misinformation without the risks associated with immediate, wide-scale implementation. Stakeholders like AI developers, social media platforms, and regulators work in collaboration within the sandbox to refine the detection algorithms and evaluate their effectiveness as content moderation tools.

These sandboxes can help balance the need for innovation in AI and the necessity of protecting the public from harmful misinformation. They allow the creation of a flexible and adaptive framework capable of evolving with technological advancements and fostering transparency between AI developers and regulators. This would lead to more informed policymaking and building public trust in AI applications.

CyberPeace Policy Recommendations

Regulatory sandboxes offer a mechanism to predict solutions that will help to regulate the misinformation that AI tech creates. Some policy recommendations are as follows:

- Create guidelines for a global standard for including regulatory sandboxes that can be adapted locally and are useful in ensuring consistency in tackling AI-driven misinformation.

- Regulators can propose to offer incentives to companies that participate in sandboxes. This would encourage innovation in developing anti-misinformation tools, which could include tax breaks or grants.

- Awareness campaigns can help in educating the public about the risks of AI-driven misinformation and the role of regulatory sandboxes can help manage public expectations.

- Periodic and regular reviews and updates to the sandbox frameworks should be conducted to keep pace with advancements in AI technology and emerging forms of misinformation should be emphasized.

Conclusion and the Challenges for Regulatory Frameworks

Regulatory sandboxes offer a promising pathway to counter the challenges that AI-driven misinformation poses while fostering innovation. By providing a controlled environment for testing new AI tools, these sandboxes can help refine technologies aimed at detecting and mitigating false information. This approach ensures that AI development aligns with societal needs and regulatory standards, fostering greater trust and transparency. With the right support and ongoing adaptations, regulatory sandboxes can become vital in countering the spread of AI-generated misinformation, paving the way for a more secure and informed digital ecosystem.

References

- https://www.thehindu.com/sci-tech/technology/on-the-importance-of-regulatory-sandboxes-in-artificial-intelligence/article68176084.ece

- https://www.oecd.org/en/publications/regulatory-sandboxes-in-artificial-intelligence_8f80a0e6-en.html

- https://www.weforum.org/publications/global-risks-report-2024/

- https://democracy-reporting.org/en/office/global/publications/chatbot-audit#Conclusions