#FactCheck: Viral video claims BSF personnel thrashing a person selling Bangladesh National Flag in West Bengal

Executive Summary:

A video circulating online claims to show a man being assaulted by BSF personnel in India for selling Bangladesh flags at a football stadium. The footage has stirred strong reactions and cross border concerns. However, our research confirms that the video is neither recent nor related to the incident that occurred in India. The content has been wrongly framed and shared with misleading claims, misrepresenting the actual incident.

Claim:

It is being claimed through a viral post on social media that a Border Security Force (BSF) soldier physically attacked a man in India for allegedly selling the national flag of Bangladesh in West Bengal. The viral video further implies that the incident reflects political hostility towards Bangladesh within Indian territory.

Fact Check:

After conducting thorough research, including visual verification, reverse image searching, and confirming elements in the video background, we determined that the video was filmed outside of Bangabandhu National Stadium in Dhaka, Bangladesh, during the crowd buildup prior to the AFC Asian Cup. A match featuring Bangladesh against Singapore.

Second layer research confirmed that the man seen being assaulted is a local flag-seller named Hannan. There are eyewitness accounts and local news sources indicating that Bangladeshi Army officials were present to manage the crowd on the day under review. During the crowd control effort a soldier assaulted the vendor with excessive force. The incident created outrage to which the Army responded by identifying the officer responsible and taking disciplinary measures. The victim was reported to have been offered reparations for the misconduct.

Conclusion:

Our research confirms that the viral video does not depict any incident in India. The claim that a BSF officer assaulted a man for selling Bangladesh flags is completely false and misleading. The real incident occurred in Bangladesh, and involved a local army official during a football event crowd-control situation. This case highlights the importance of verifying viral content before sharing, as misinformation can lead to unnecessary panic, tension, and international misunderstanding.

- Claim: Viral video claims BSF personnel thrashing a person selling Bangladesh National Flag in West Bengal

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

.webp)

Introduction

Cyber slavery is a form of modern exploitation that begins with online deception and evolves into physical human trafficking. In recent times, cyber slavery has emerged as a serious threat that involves exploiting individuals through digital means under coercive or deceptive conditions. Offenders target innocent individuals and lure them by giving fake promises to offer them employment or alike. Cyber slavery can occur on a global scale, targeting vulnerable individuals worldwide through the internet and is a disturbing continuum of online manipulation that leads to real-world abuse and exploitation, where individuals are entrapped by false promises and subjected to severe human rights violations. It can take many different forms, such as coercive involvement in cybercrime, forced employment in online frauds, exploitation in the gig economy, or involuntary slavery. This issue has escalated to the highest level where Indians are being trafficked for jobs in countries like Laos and Cambodia. Recently over 5,000 Indians were reported to be trapped in Southeast Asia, where they are allegedly being coerced into carrying out cyber fraud. It was reported that particularly Indian techies were lured to Cambodia for high-paying jobs and later they found themselves trapped in cyber fraud schemes, forced to work 16 hours a day under severe conditions. This is the harsh reality for thousands of Indian tech professionals who are lured under false pretences to employment in Southeast Asia, where they are forced into committing cyber crimes.

Over 5,000 Indians Held in Cyber Slavery and Human Trafficking Rings

India has rescued 250 citizens in Cambodia who were forced to run online scams, with more than 5,000 Indians stuck in Southeast Asia. The victims, mostly young and tech-savvy, are lured into illegal online work ranging from money laundering and crypto fraud to love scams, where they pose as lovers online. It was reported that Indians are being trafficked for jobs in countries like Laos and Cambodia, where they are forced to conduct cybercrime activities. Victims are often deceived about where they would be working, thinking it will be in Thailand or the Philippines. Instead, they are sent to Cambodia, where their travel documents are confiscated and they are forced to carry out a variety of cybercrimes, from stealing life savings to attacking international governmental or non-governmental organizations. The Indian embassy in Phnom Penh has also released an advisory warning Indian nationals of advertisements for fake jobs in the country through which victims are coerced to undertake online financial scams and other illegal activities.

Regulatory Landscape

Trafficking in Human Beings (THB) is prohibited under the Constitution of India under Article

23 (1). The Immoral Traffic (Prevention) Act, of 1956 (ITPA) is the premier legislation for the prevention of trafficking for commercial sexual exploitation. Section 111 of the Bharatiya Nyaya Sanhita (BNS), 2023, is a comprehensive legal provision aimed at combating organized crime and will be useful in persecuting people involved in such large-scale scams. India has also ratified certain bilateral agreements with several countries to facilitate intelligence sharing and coordinated efforts to combat transnational organized crime and human trafficking.

CyberPeace Policy Recommendations

● Misuse of Technology has exploited the new genre of cybercrimes whereby cybercriminals utilise social media platforms as a tool for targeting innocent individuals. It requires collective efforts from social media companies and regulatory authorities to time to time address the new emerging cybercrimes and develop robust preventive measures to counter them.

● Despite the regulatory mechanism in place, there are certain challenges such as jurisdictional challenges, challenges in detection due to anonymity, and investigations challenges which significantly make the issue of cyber human trafficking a serious evolving threat. Hence International collaboration between the countries is encouraged to address the issue considering the present situation in a technologically driven world. Robust legislation that addresses both national and international cases of human trafficking and contains strict penalties for offenders must be enforced.

● Cybercriminals target innocent people by offering fake high-pay job opportunities, building trust and luring them. It is high time that all netizens should be aware of such tactics deployed by bad actors and recognise the early signs of them. By staying vigilant and cross-verifying the details from authentic sources, netizens can safeguard themselves from such serious threats which even endanger their life by putting them under restrictions once they are being trafficked. It is a notable fact that the Indian government and its agencies are continuously making efforts to rescue the victims of cyber human trafficking or cyber slavery, they must further develop robust mechanisms in place to conduct specialised operations by specialised government agencies to rescue the victims in a timely manner.

● Capacity building and support mechanisms must be encouraged by government entities, cyber security experts and Non-Governmental Organisations (NGOs) to empower the netizens to follow best practices while navigating the online landscape, providing them with helpline or help centres to report any suspicious activity or behaviour they encounter, and making them empowered to feel safe on the Internet while simultaneously building defenses to stay protected from cyber threats.

References:

2. https://www.bbc.com/news/world-asia-india-68705913

3. https://therecord.media/india-rescued-cambodia-scam-centers-citizens

4. https://www.the420.in/rescue-indian-tech-workers-cambodia-cyber-fraud-awareness/

7. https://www.dyami.services/post/intel-brief-250-indian-citizens-rescued-from-cyber-slavery

8. https://www.mea.gov.in/human-trafficking.htm

9. https://www.drishtiias.com/blog/the-vicious-cycle-of-human-trafficking-and-cybercrime

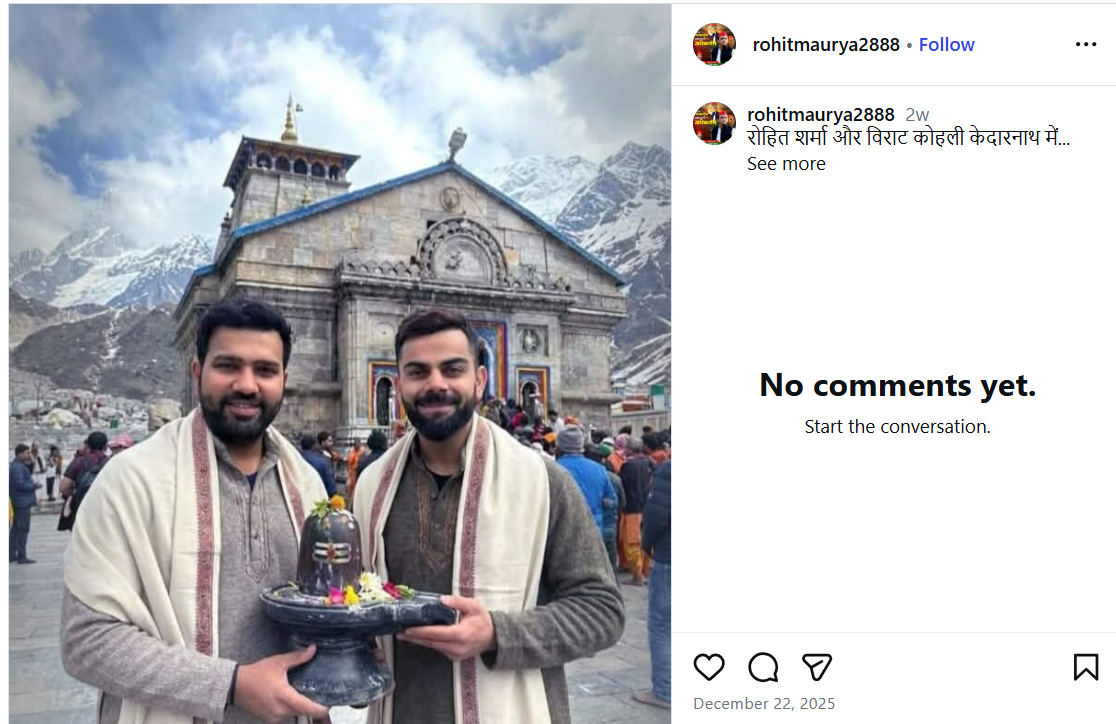

A photo featuring Indian cricketers Virat Kohli and Rohit Sharma is being widely shared on social media. In the image, both players are seen holding a Shivling, with the Kedarnath temple visible in the background. Users sharing the image claim that Virat Kohli and Rohit Sharma recently visited Kedarnath.

However, CyberPeace Foundation’s investigation found the claim to be false. Our verification established that the viral image is not real but has been created using Artificial Intelligence (AI) and is being circulated with a misleading narrative.

The Claim

An Instagram user shared the viral image on December 22, 2025, with the caption stating that Rohit Sharma and Virat Kohli are in Kedarnath. The post has since been widely reshared by other users, who assumed the image to be authentic. Link, archive link, screenshot:

Fact Check

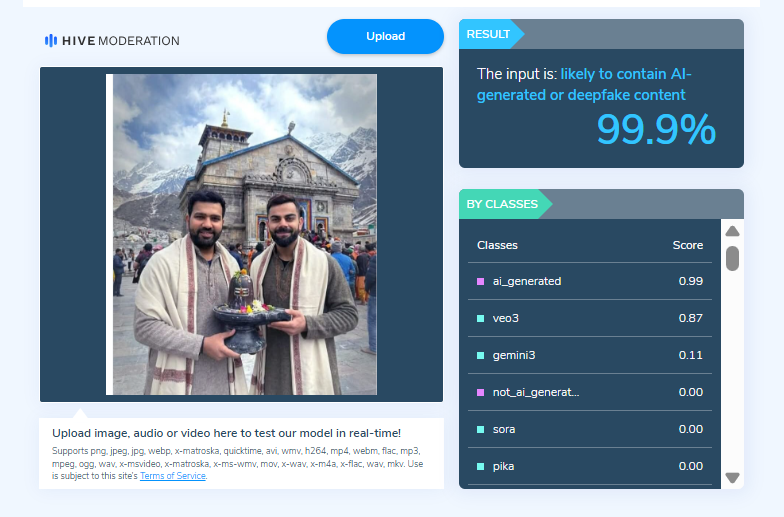

On closely examining the viral image, the Desk noticed visual inconsistencies suggesting that it may be AI-generated. To verify this, the image was scanned using the AI detection tool HIVE Moderation. According to the results, the image was found to be 99 per cent AI-generated.

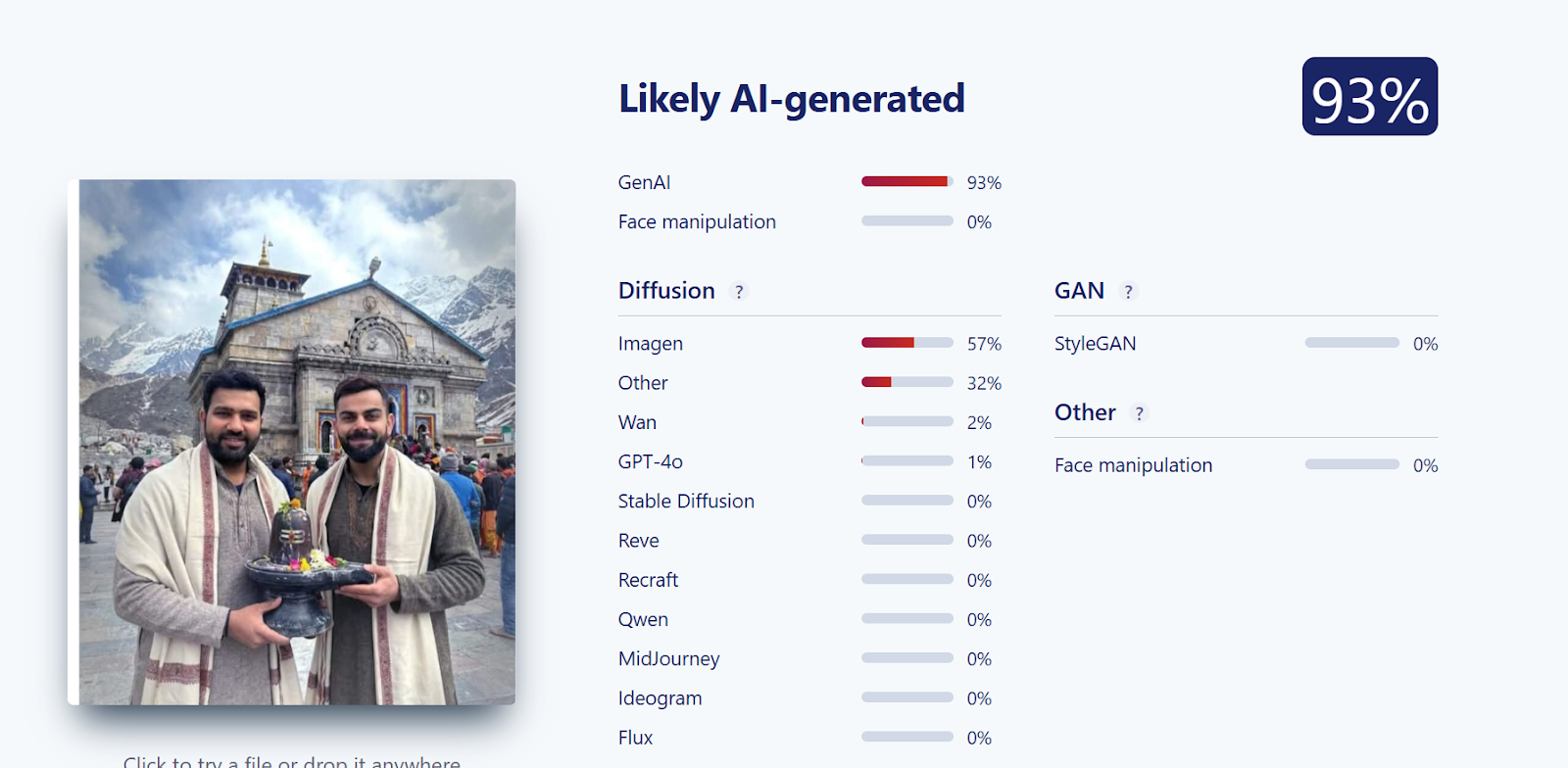

Further verification was conducted using another AI detection tool, Sightengine. The analysis revealed that the image was 93 per cent likely to be AI-generated, reinforcing the findings from the previous tool.

Conclusion

CyberPeace Foundation’s research confirms that the viral image claiming Virat Kohli and Rohit Sharma visited Kedarnath is fabricated. The image has been generated using AI technology and is being falsely shared on social media as a real photograph.

Introduction

Over the past few years, the virtual space has been an irreplaceable livelihood platform for content creators and influencers, particularly on major social media platforms like YouTube and Instagram. Yet, if this growth in digital entrepreneurship is accompanied by anything, it is a worrying trend, a steep surge in account takeover (ATO) attacks against these actors. In recent years, cybercriminals have stepped up the quantity and level of sophistication of such attacks, hacking into accounts, jeopardising the follower base, and incurring economic and reputational damage. They don’t just take over accounts to cause disruption. Instead, they use these hijacked accounts to run scams like fake livestreams and cryptocurrency fraud, spreading them by pretending to be the original account owner. This type of cybercrime is no longer a nuisance; it now poses a serious threat to the creator economy, digital trust, and the wider social media ecosystem.

Why Are Content Creators Prime Targets?

Content creators hold a special place on the web. They are prominent users who live for visibility, public confidence, and ongoing interaction with their followers. Their social media footprint tends to extend across several interrelated platforms, e.g., YouTube, Instagram, X (formerly Twitter), with many of these accounts having similar login credentials or being managed from the same email accounts. This interconnectivity of their online presence crosses multiple platforms and benefits workflow, but makes them appealing targets for hackers. One entry point can give access to a whole chain of vulnerabilities. Attackers, once they control an account, can wield its influence and reach to share scams, lead followers to phishing sites, or spread malware, all from the cover of a trusted name.

Popular Tactics Used by Attackers

- Malicious Livestream Takeovers and Rebranding - Cybercriminals hijack high-subscriber channels and rebrand them to mimic official channels. Original videos are hidden or deleted, replaced with scammy streams using deep fake personas to promote crypto schemes.

- Fake Sponsorship Offers - Creators receive emails from supposed sponsors that contain malware-infected attachments or malicious download links, leading to credential theft.

- Malvertising Campaigns - These involve fake ads on social platforms promoting exclusive software like AI tools or unreleased games. Victims download malware that searches for stored login credentials.

- Phishing and Social Engineering on Instagram - Hackers impersonate Meta support teams via DMs and emails. They direct creators to login pages that are cloned versions of Instagram's site. Others pose as fans to request phone numbers and trick victims into revealing password reset codes.

- Timely Exploits and Event Hijacking - During major public or official events, attackers often escalate their activity. Hijacked accounts are used to promote fake giveaways or exclusive live streams, luring users to malicious websites designed to steal personal information or financial data.

Real-World Impact and Case Examples

The reach and potency of account takeover attacks upon content creators are far-reaching and profound. In a report presented in 2024 by Bitdefender, over 9,000 malicious live streams were seen on YouTube during a year, with many having been streamed from hijacked creator accounts and reassigned to advertise scams and fake content. Perhaps the most high-profile incident was a channel with more than 28 million subscribers and 12.4 billion total views, which was totally taken over and utilised for a crypto fraud scheme live streaming. Additionally, Bitdefender research indicated that over 350 scam domains were utilised by cybercriminals, directly connected via hijacked social media accounts, to entice followers into phishing scams and bogus investment opportunities. Many of these pieces of content included AI-created deep fakes impersonating recognisable personalities like Elon Musk and other public figures, providing the illusion of authenticity around fake endorsements (CCN, 2024). Further, attackers have exploited popular digital events such as esports events, such as Counter-Strike 2 (CS2), by hijacking YouTube gaming channels and livestreaming false giveaways or referring viewers to imitated betting sites.

Protective Measures for Creators

- Enable Multi-Factor Authentication (MFA)

Adds an essential layer of defence. Even if a password is compromised, attackers can't log in without the second factor. Prefer app-based or hardware token authentication.

- Scrutinize Sponsorships

Verify sender domains and avoid opening suspicious attachments. Use sandbox environments to test files. In case of doubt, verify collaboration opportunities through official company sources or verified contacts.

- Monitor Account Activity

Keep tabs on login history, new uploads, and connected apps. Configure alerts for suspicious login attempts or spikes in activity to detect breaches early. Configure alerts for suspicious login attempts or spikes in activity to detect breaches early.

- Educate Your Team

If your account is managed by editors or third parties, train them on common phishing and malware tactics. Employ regular refresher sessions and send mock phishing tests to reinforce awareness.

- Use Purpose-Built Security Tools

Specialised security solutions offer features like account monitoring, scam detection, guided recovery, and protection for team members. These tools can also help identify suspicious activity early and support a quick response to potential threats.

Conclusion

Account takeover attacks are no longer random events, they're systemic risks that compromise the financial well-being and personal safety of creators all over the world. As cybercriminals grow increasingly sophisticated and realistic in their scams, the only solution is a security-first approach. This encompasses a mix of technical controls, platform-level collaboration, education, and investment in creator-centric cybersecurity technologies. In today's fast-paced digital landscape, creators not only need to think about content but also about defending their digital identity. As digital platforms continue to grow, so do the threats targeting creators. However, with the right awareness, tools, and safeguards in place, a secure and thriving digital environment for creators is entirely achievable.

References

- https://www.bitdefender.com/en-au/blog/hotforsecurity/account-takeover-attacks-on-social-media-a-rising-threat-for-content-creators-and-influencers

- https://www.arkoselabs.com/account-takeover/social-media-account-takeover/

- https://www.imperva.com/learn/application-security/account-takeover-ato/

- https://www.security.org/digital-safety/account-takeover-annual-report/

- https://www.niceactimize.com/glossary/account-takeover/