#FactCheck: Fake Viral Video Claiming Vice Admiral AN Pramod saying that next time if Pakistan Attack we will complain to US and Prez Trump.

Executive Summary:

A viral video (archived link) circulating on social media claims that Vice Admiral AN Pramod stated India would seek assistance from the United States and President Trump if Pakistan launched an attack, portraying India as dependent rather than self-reliant. Research traced the extended footage to the Press Information Bureau’s official YouTube channel, published on 11 May 2025. In the authentic video, the Vice Admiral makes no such remark and instead concludes his statement with, “That’s all.” Further analysis using the AI Detection tool confirmed that the viral clip was digitally manipulated with AI-generated audio, misrepresenting his actual words.

Claim:

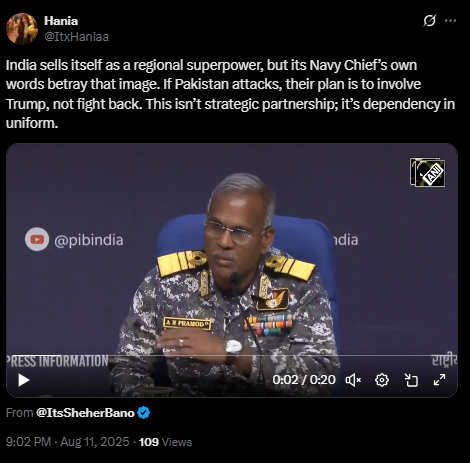

In the viral video an X user posted with the caption

”India sells itself as a regional superpower, but its Navy Chief’s own words betray that image. If Pakistan attacks, their plan is to involve Trump, not fight back. This isn’t strategic partnership; it’s dependency in uniform”.

In the video the Vice Admiral was heard saying

“We have worked out among three services, this time if Pakistan dares take any action, and Pakistan knows it, what we are going to do. We will complain against Pakistan to the United States of America and President Trump, like we did earlier in Operation Sindoor.”

Fact Check:

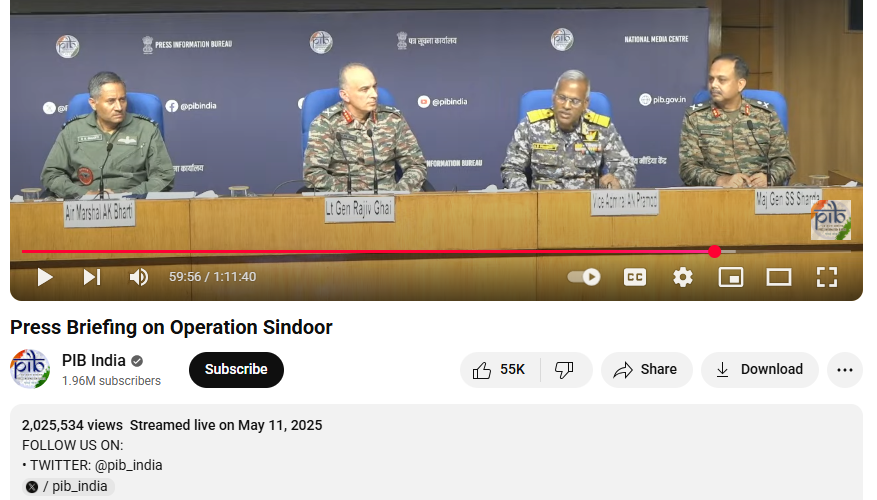

Upon conducting a reverse image search on key frames from the video, we located the full version of the video on the official YouTube channel of the Press Information Bureau (PIB), published on 11 May 2025. In this video, at the 59:57-minute mark, the Vice Admiral can be heard saying:

“This time if Pakistan dares take any action, and Pakistan knows it, what we are going to do. That’s all.”

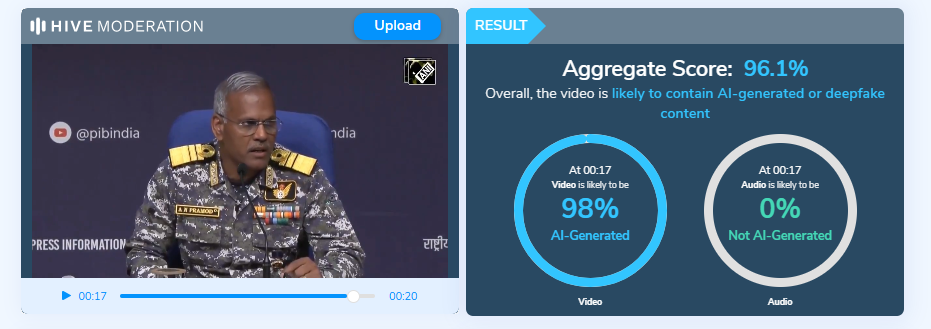

Further analysis was conducted using the Hive Moderation tool to examine the authenticity of the circulating clip. The results indicated that the video had been artificially generated, with clear signs of AI manipulation. This suggests that the content was not genuine but rather created with the intent to mislead viewers and spread misinformation.

Conclusion:

The viral video attributing remarks to Vice Admiral AN Pramod about India seeking U.S. and President Trump’s intervention against Pakistan is misleading. The extended speech, available on the Press Information Bureau’s official YouTube channel, contained no such statement. Instead of the alleged claim, the Vice Admiral concluded his comments by saying, “That’s all.” AI analysis using Hive Moderation further indicated that the viral clip had been artificially manipulated, with fabricated audio inserted to misrepresent his words. These findings confirm that the video is altered and does not reflect the Vice Admiral’s actual remarks.

Claim: Fake Viral Video Claiming Vice Admiral AN Pramod saying that next time if Pakistan Attack we will complain to US and Prez Trump.

Claimed On: Social Media

Fact Check: False and Misleading