A few of us were sitting together, talking shop - which, for moms, inevitably circles back to children, their health and education. Mothers of teenagers were concerned that their children seemed to spend an excessive amount of time online and had significantly reduced verbal communication at home.

Reena shared that she was struggling to understand her two boys, who had suddenly transformed from talkative, lively children into quiet, withdrawn teenagers.

Naaz nodded. “My daughter is glued to her device. I just can’t get her off it! What do I do, girls? Any suggestions?”

Mou sighed, “And what about the rising scams? I keep warning my kids about online threats, but I’m not sure I’m doing enough.”

Not just scams, those come later. What worries me more are the videos and photos of unsuspecting children being edited and misused on digital platforms,” added Reena.

The Digital Parenting Dilemma

For parents, it’s a constant challenge—allowing children internet access means exposing them to potential risks while restricting it invites criticism for being overly strict.

‘What do I do?’ is a question that troubles many parents, as they know how addictive phones and gaming devices can be. (Fun fact: Even parents sometimes struggle to resist endlessly scrolling through social media!)

‘What should I tell them, and when?’ This becomes a pressing concern when parents hear about cyberbullying, online grooming, or even cyberabduction.

‘How do I ensure they stay cybersafe?’ This remains an ongoing worry, as children grow and their online activities evolve.

Whether it’s a single-child, dual-income household, a two-child, single-income family, or any other combination, parents have their hands full managing work, chores, and home life. Sometimes, children have to be left alone—with grandparents, caregivers, or even by themselves for a few hours—making it difficult to monitor their digital lives. While smartphones help parents stay connected and track their child’s location, they can also expose children to risks if not used responsibly.

Breaking It Down

Start cybersafety discussions early and tailor them to your child’s age.

For simplicity, let’s categorize learning into five key age groups:

- 0 – 2 years

- 3 – 7 years

- 8 – 12 years

- 13 – 16 years

- 16 – 19 years

Let’s explore the key safety messages for each stage.

Reminder:

Children will always test boundaries and may resist rules. The key is to lead by example—practice cybersafety as a family.

0 – 2 Years: Newborns & Infants

Pediatricians recommend avoiding screen exposure for children under two years old. If you occasionally allow screen time (for example, while changing them), keep it to a minimum. Children are easily distracted—use this to your advantage.

What can you do?

- Avoid watching TV or using mobile devices in front of them.

- Keep activity books, empty boxes, pots, and ladles handy to engage them.

3 – 7 Years: Toddlers & Preschoolers

Cybersafety education should ideally begin when a child starts engaging with screens. At this stage, parents have complete control over what their child watches and for how long.

What can you do?

- Keep screen time limited and fully supervised.

- Introduce basic cybersecurity concepts, such as stranger danger and good picture vs. bad picture.

- Encourage offline activities—educational toys, books, and games.

- Restrict your own screen time when your child is awake to set a good example.

- Set up parental controls and create child-specific accounts on devices.Secure all devices with comprehensive security software.

8 – 12 Years: Primary & Preteens

Cyber-discipline should start now. Strengthen rules, set clear boundaries, and establish consequences for rule violations.

What can you do?

- Increase screen time gradually to accommodate studies, communication, and entertainment.

- Teach them about privacy and the dangers of oversharing personal information.

- Continue stranger-danger education, including safe/unsafe websites and apps.

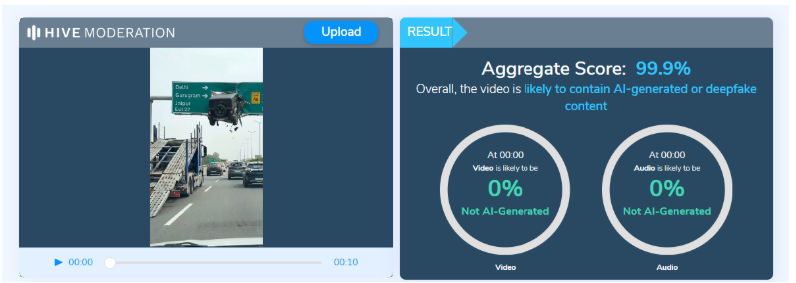

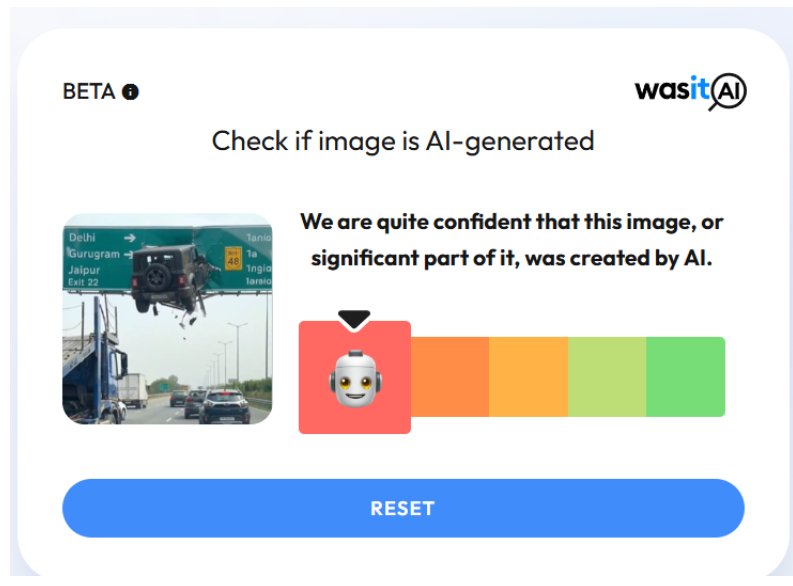

- Emphasize reviewing T&Cs before downloading apps.Introduce concepts like scams, phishing, deepfakes, and virus attacks using real-life examples.

- Keep banking and credit card credentials private—children may unintentionally share sensitive information.

Cyber Safety Mantras:

- STOP. THINK. ACT.

- Do Not Trust Blindly Online.

13 – 16 Years: The Teenage Phase

Teenagers are likely to resist rules and demand independence, but if cybersecurity has been a part of their upbringing, they will tolerate parental oversight.

What can you do?

- Continue parental controls but allow greater access to previously restricted content.

- Encourage open conversations about digital safety and online threats.

- Respect their need for privacy but remain involved as a silent observer.

- Discuss cyberbullying, harassment, and online reputation management.

- Keep phones out of bedrooms at night and maintain device-free zones during family time.

- Address online relationships and risks like dating scams, sextortion, and trafficking.

16 – 19 Years: The Transition to Adulthood

By this stage, children have developed a sense of responsibility and maturity. It’s time to gradually loosen control while reinforcing good digital habits.

What can you do?

- Monitor their online presence without being intrusive.Maintain open discussions—teens still value parental advice.

- Stay updated on digital trends so you can offer relevant guidance.

- Encourage digital balance by planning device-free family outings.

Final Thoughts

As a parent, your role is not just to set rules but to empower your child to navigate the digital world safely. Lead by example, encourage responsible usage, and create an environment where your child feels comfortable discussing online challenges with you.

Wishing you a safe and successful digital parenting journey!