#FactCheck -AI-Generated Image Falsely Claims Mukesh and Nita Ambani Gifted Luxury Car to Suryakumar Yadav

Executive Summary

A picture circulating on social media allegedly shows Reliance Industries chairman Mukesh Ambani and Nita Ambani presenting a luxury car to India’s T20 team captain Suryakumar Yadav. The image is being widely shared with the claim that the Ambani family gifted the cricketer a luxury car in recognition of his outstanding performance. However, research conducted by the CyberPeace found the viral claim to be false. The research revealed that the image being circulated online is not authentic but generated using artificial intelligence (AI).

Claim

On February 8, 2025, a Facebook user shared the viral image claiming that Mukesh Ambani and Nita Ambani gifted a luxury car to Suryakumar Yadav following his brilliant innings. The post has been widely circulated across social media platforms. In another instance, a user shared a collage in which one image shows Suryakumar Yadav receiving an award, while another depicts him with Nita Ambani, further amplifying the claim.

- https://www.facebook.com/61559815349585/posts/122207061746327178/?rdid=0MukeT6c7WK1uB8m#

- https://archive.ph/wip/UH9Xh

Fact Check:

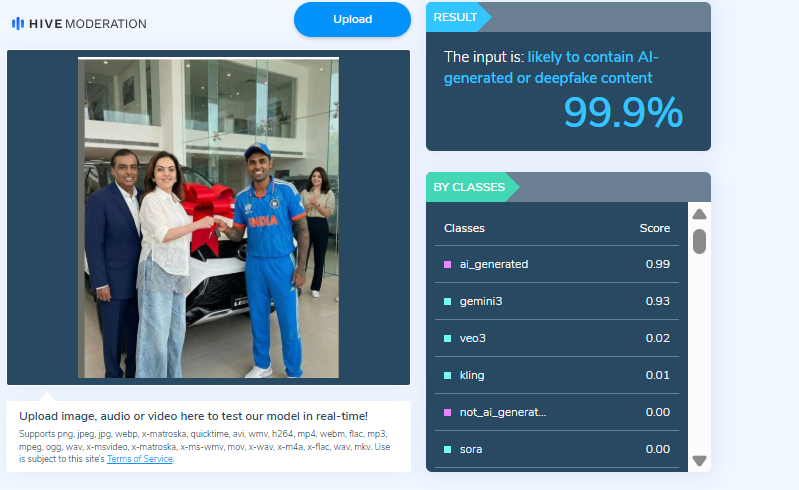

Upon closely examining the viral image, certain visual inconsistencies raised suspicion that it might be AI-generated. To verify its authenticity, the image was analysed using the AI detection tool Hive Moderation, which indicated a 99 percent probability that the image was AI-generated.

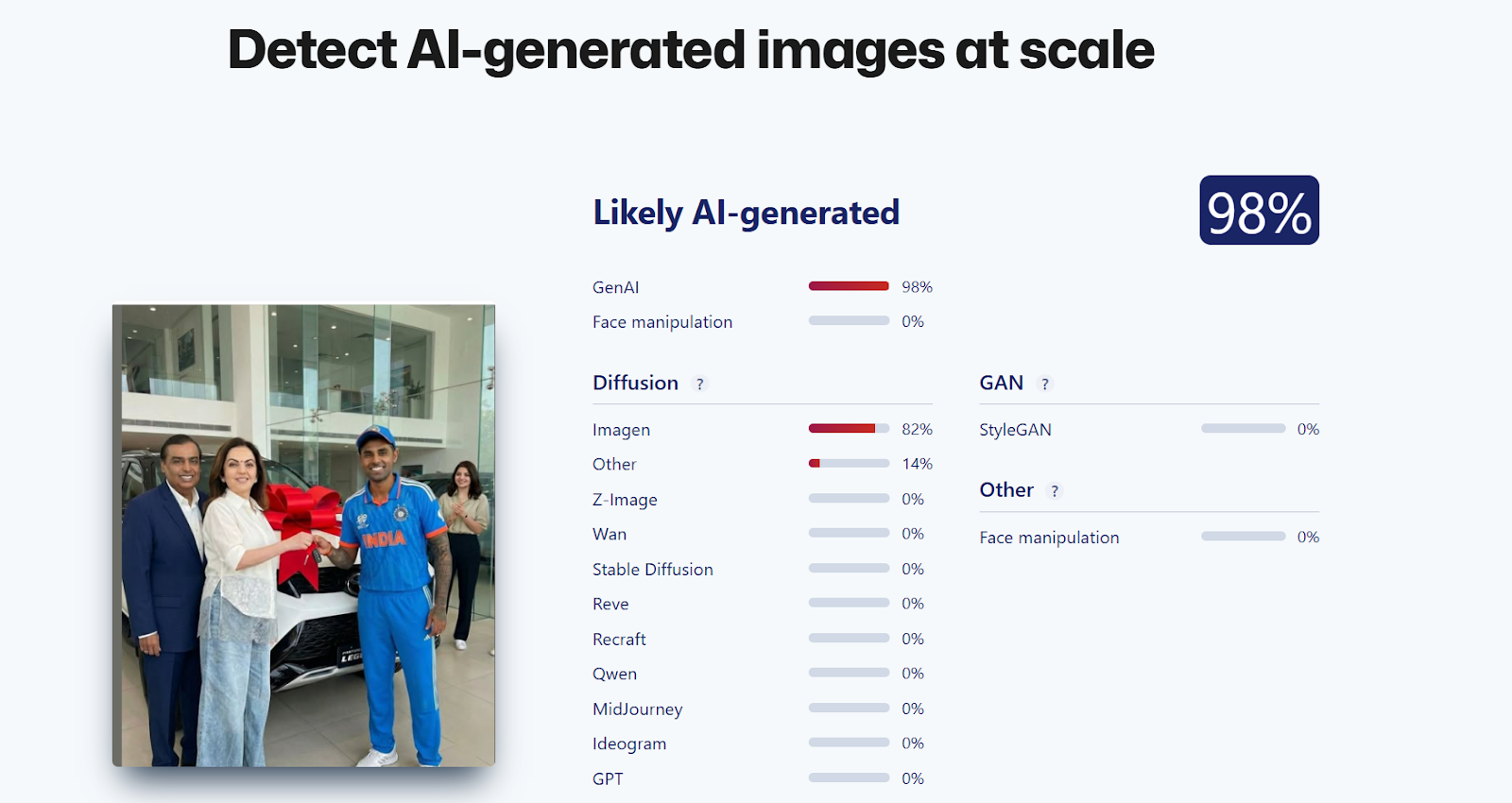

In the next step of the research, the image was also analysed using another AI detection tool, Sightengine, which found a 98 percent likelihood that the image was created using artificial intelligence.

Conclusion

The research clearly establishes that the viral image claiming Mukesh Ambani and Nita Ambani gifted a luxury car to Suryakumar Yadav is misleading. The picture is not real and has been generated using AI.

Related Blogs

Introduction

The Computer Emergency Response Team (CERT-in) is a nodal agency of the government established and appointed as a national agency in respect of cyber incidents and cyber security incidents in terms of the provisions of section 70B of the Information Technology (IT) Act, 2000. CERT-In has issued a cautionary note to Microsoft Edge, Adobe and Google Chrome users. Users have been alerted to many vulnerabilities by the government's cybersecurity agency, which hackers might use to obtain private data and run arbitrary code on the targeted machine. Users are advised by CERT-In to apply a security update right away in order to guard against the problem.

Vulnerability note

Vulnerability notes CIVN-2023-0361, CIVN-2023-0362 and CIVN-2023-0364 for Google Chrome for Desktop, Microsoft Edge and Adobe respectively, include more information on the alert. The problems have been categorized as high-severity issues by CERT-In, which suggests applying a security upgrade right now. According to the warning, there is a security risk if you use Google Chrome versions earlier than v120.0.6099.62 on Linux and Mac, or earlier than 120.0.6099.62/.63 on Windows. Similar to this, the vulnerability may also impact users of Microsoft Edge browser versions earlier than 120.0.2210.61.

Cause of the Problem

These vulnerabilities are caused by "Use after release in Media Stream, Side Panel Search, and Media Capture; Inappropriate implementation in Autofill and Web Browser UI, “according to the explanation in the issue note on the CERT-In website. The alert further warns that individuals who use the susceptible Microsoft Edge and Google Chrome browsers could end up being targeted by a remote attacker using these vulnerabilities to send a specially crafted request.” Once these vulnerabilities are effectively exploited, hackers may obtain higher privileges, obtain sensitive data, and run arbitrary code on the system of interest.

High-security issues: consequences

CERT-In has brought attention to vulnerabilities in Google Chrome, Microsoft Edge, and Adobe that might have serious repercussions and put users and their systems at risk. The vulnerabilities found in widely used browsers, like Adobe, Microsoft Edge, and Google Chrome, present serious dangers that might result in data breaches, unauthorized code execution, privilege escalation, and remote attacks. If these vulnerabilities are taken advantage of, private information may be violated, money may be lost, and reputational harm may result.

Additionally, the confidentiality and integrity of sensitive information may be compromised. The danger also includes the potential to interfere with services, cause outages, reduce productivity, and raise the possibility of phishing and social engineering assaults. Users may become less trusting of the impacted software as a result of the urgent requirement for security upgrades, which might make them hesitant to utilize these platforms until guarantees of thorough security procedures are provided.

Advisory

- Users should update their Google Chrome, Microsoft Edge, and Adobe software as soon as possible to protect themselves against the vulnerabilities that have been found. These updates are supplied by the individual software makers. Furthermore, use caution when browsing and refrain from downloading things from unidentified sites or clicking on dubious links.

- Make use of reliable ad-blockers and strong, often updated antivirus and anti-malware software. Maintain regular backups of critical data to reduce possible losses in the event of an attack, and keep up with best practices for cybersecurity. Maintaining current security measures with vigilance and proactiveness can greatly lower the likelihood of becoming a target for prospective vulnerabilities.

References

Introduction

In the digital realm of social media, Meta Platforms, the driving force behind Facebook and Instagram, faces intense scrutiny following The Wall Street Journal's investigative report. This exploration delves deeper into critical issues surrounding child safety on these widespread platforms, unravelling algorithmic intricacies, enforcement dilemmas, and the ethical maze surrounding monetisation features. Instances of "parent-managed minor accounts" leveraging Meta's subscription tools to monetise content featuring young individuals have raised eyebrows. While skirting the line of legality, this practice prompts concerns due to its potential appeal to adults and the associated inappropriate interactions. It's a nuanced issue demanding nuanced solutions.

Failed Algorithms

The very heartbeat of Meta's digital ecosystem, its algorithms, has come under intense scrutiny. These algorithms, designed to curate and deliver content, were found to actively promoting accounts featuring explicit content to users with known pedophilic interests. The revelation sparks a crucial conversation about the ethical responsibilities tied to the algorithms shaping our digital experiences. Striking the right balance between personalised content delivery and safeguarding users is a delicate task.

While algorithms play a pivotal role in tailoring content to users' preferences, Meta needs to reevaluate the algorithms to ensure they don't inadvertently promote inappropriate content. Stricter checks and balances within the algorithmic framework can help prevent the inadvertent amplification of content that may exploit or endanger minors.

Major Enforcement Challenges

Meta's enforcement challenges have come to light as previously banned parent-run accounts resurrect, gaining official verification and accumulating large followings. The struggle to remove associated backup profiles adds layers to concerns about the effectiveness of Meta's enforcement mechanisms. It underscores the need for a robust system capable of swift and thorough actions against policy violators.

To enhance enforcement mechanisms, Meta should invest in advanced content detection tools and employ a dedicated team for consistent monitoring. This proactive approach can mitigate the risks associated with inappropriate content and reinforce a safer online environment for all users.

The financial dynamics of Meta's ecosystem expose concerns about the exploitation of videos that are eligible for cash gifts from followers. The decision to expand the subscription feature before implementing adequate safety measures poses ethical questions. Prioritising financial gains over user safety risks tarnishing the platform's reputation and trustworthiness. A re-evaluation of this strategy is crucial for maintaining a healthy and secure online environment.

To address safety concerns tied to monetisation features, Meta should consider implementing stricter eligibility criteria for content creators. Verifying the legitimacy and appropriateness of content before allowing it to be monetised can act as a preventive measure against the exploitation of the system.

Meta's Response

In the aftermath of the revelations, Meta's spokesperson, Andy Stone, took centre stage to defend the company's actions. Stone emphasised ongoing efforts to enhance safety measures, asserting Meta's commitment to rectifying the situation. However, critics argue that Meta's response lacks the decisive actions required to align with industry standards observed on other platforms. The debate continues over the delicate balance between user safety and the pursuit of financial gain. A more transparent and accountable approach to addressing these concerns is imperative.

To rebuild trust and credibility, Meta needs to implement concrete and visible changes. This includes transparent communication about the steps taken to address the identified issues, continuous updates on progress, and a commitment to a user-centric approach that prioritises safety over financial interests.

The formation of a task force in June 2023 was a commendable step to tackle child sexualisation on the platform. However, the effectiveness of these efforts remains limited. Persistent challenges in detecting and preventing potential child safety hazards underscore the need for continuous improvement. Legislative scrutiny adds an extra layer of pressure, emphasising the urgency for Meta to enhance its strategies for user protection.

To overcome ongoing challenges, Meta should collaborate with external child safety organisations, experts, and regulators. Open dialogues and partnerships can provide valuable insights and recommendations, fostering a collaborative approach to creating a safer online environment.

Drawing a parallel with competitors such as Patreon and OnlyFans reveals stark differences in child safety practices. While Meta grapples with its challenges, these platforms maintain stringent policies against certain content involving minors. This comparison underscores the need for universal industry standards to safeguard minors effectively. Collaborative efforts within the industry to establish and adhere to such standards can contribute to a safer digital environment for all.

To align with industry standards, Meta should actively participate in cross-industry collaborations and adopt best practices from platforms with successful child safety measures. This collaborative approach ensures a unified effort to protect users across various digital platforms.

Conclusion

Navigating the intricate landscape of child safety concerns on Meta Platforms demands a nuanced and comprehensive approach. The identified algorithmic failures, enforcement challenges, and controversies surrounding monetisation features underscore the urgency for Meta to reassess and fortify its commitment to being a responsible digital space. As the platform faces this critical examination, it has an opportunity to not only rectify the existing issues but to set a precedent for ethical and secure social media engagement.

This comprehensive exploration aims not only to shed light on the existing issues but also to provide a roadmap for Meta Platforms to evolve into a safer and more responsible digital space. The responsibility lies not just in acknowledging shortcomings but in actively working towards solutions that prioritise the well-being of its users.

References

- https://timesofindia.indiatimes.com/gadgets-news/instagram-facebook-prioritised-money-over-child-safety-claims-report/articleshow/107952778.cms

- https://www.adweek.com/blognetwork/meta-staff-found-instagram-tool-enabled-child-exploitation-the-company-pressed-ahead-anyway/107604/

- https://www.tbsnews.net/tech/meta-staff-found-instagram-subscription-tool-facilitated-child-exploitation-yet-company

.webp)

Introduction

Conversations surrounding the scourge of misinformation online typically focus on the risks to social order, political stability, economic safety and personal security. An oft-overlooked aspect of this phenomenon is the fact that it also takes a very real emotional and mental toll on people. Even as we grapple with the big picture questions about financial fraud or political rumors or inaccurate medical information online, we must also appreciate the fact that being exposed to misinformation and becoming aware of one’s own vulnerability are both significant sources of mental stress in today’s digital ecosystem.

Inaccurate information causes confusion and worry, which has negative consequences for mental health. Misinformation may also impair people's sense of well-being by undermining their trust in institutions, authority figures, and their own judgment. The constant bombardment of misinformation can lead to information overload, wherein people are unable to discriminate between legitimate sources and misleading content, resulting in mental exhaustion and a sense of being overwhelmed by the sheer volume of information available. Vulnerable groups such as children, the elderly, and those with pre-existing health conditions are more sensitive or susceptible to the negative effects of misinformation.

How Does Misinformation Endanger Mental Health?

Misinformation on social media platforms is a matter of public health because it has the potential to confuse people, lead to poor decision-making and result in cognitive dissonance, anxiety and unwanted behavioural changes.

Unconstrained misinformation can also lead to social disorder and the prevalence of negative emotions amongst larger numbers, ultimately causing a huge impact on society. Therefore, understanding the spread and diffusion characteristics of misinformation on Internet platforms is crucial.

The spread of misinformation can elicit different emotions of the public, and the emotions also change with the spread of misinformation. Factors such as user engagement, number of comments, and time of discussion all have an impact on the change of emotions in misinformation. Active users tend to make more comments, engage longer in discussions, and display more dominant negative emotions when triggered by misinformation. Understanding the evolution pattern of emotions triggered by misinformation is also important in view of the public’s emotional fluctuations under the influence of misinformation, and social media often magnifies the impact of emotions and makes emotions spread rapidly in social networks. For example, the sentiment of misinformation increases when there are sensitive topics such as political elections, viral trending topics, health-related information, communal and local information, information about natural disasters and more. Active misinformation on the Internet not only affects the public's psychology, mental health and behavior, but also has an impact on the stability of social order and the maintenance of social security.

Prebunking and Debunking To Build Mental Guards Against Misinformation

As the spread of misinformation and disinformation rises, so do the techniques aimed to tackle their spread. Prebunking or attitudinal inoculation is a technique for training individuals to recogniseand resist deceptive communications before they can take root. Prebunking is a psychological method for mitigating the effects of misinformation, strengthening resilience and creating cognitive defenses against future misinformation. Debunking provides individuals with accurate information to counter false claims and myths, correcting misconceptions and preventing the spread of misinformation. By presenting evidence-based refutations, debunking helps individuals distinguish fact from fiction.

What do health experts say about online misinformation?

“In the21st century, mental health is crucial due to the overwhelming amount of information available online. The COVID-19 pandemic-related misinformation was a prime example of this, with misinformation spreading online, leading to increased anxiety, panic buying, fear of leaving home, and mistrust in health measures. To protect our mental health, it is essential to cultivate a discerning mindset, question sources, and verify information before consumption. Fostering a supportive community that encourages open dialogue and fact-checking can help navigate the digital information landscape with confidence and emotional support. Prioritising self-care routines, mindfulness practices, and seeking professional guidance are also crucial for safeguarding mental health in the digital information era.”

In conversation with CyberPeace ~ Says Dubai-based psychologist, Aishwarya Menon, (BA,in Psychology and Criminology from the University of Westen Ontario, London and MA in Mental Health and Addictions (Humber College, University of Guelph),Toronto.

CyberPeace Policy Recommendations:

1) Countering misinformation is everyone's shared responsibility. To mitigate the negative effects of infodemics online, we must look at developing strong legal policies, creating and promoting awareness campaigns, relying on authenticated content on mass media, and increasing people's digital literacy.

2) Expert organisations actively verifying the information through various strategies including prebunking and debunking efforts are among those best placed to refute misinformation and direct users to evidence-based information sources. It is recommended that countermeasures for users on platforms be increased with evidence-based data or accurate information.

3) The role of social media platforms is crucial in the misinformation crisis, hence it is recommended that social media platforms actively counter the production of misinformation on their platforms. Local, national, and international efforts and additional research are required to implement the robust misinformation counterstrategies.

4) Netizens are advised or encouraged to follow official sources to check the reliability of any news or information. They must recognise the red flags by recognising the signs such as questionable facts, poorly written texts, surprising or upsetting news, fake social media accounts and fake websites designed to look like legitimate ones. Netizens are also encouraged to develop cognitive skills to discern fact and reality. Netizens are advised to approach information with a healthy dose of skepticism and curiosity.

Final Words:

It is crucial to protect mental health by escalating and disturbing the rise of misinformation incidents on various subjects, safeguarding our minds requires cognitive skills, building media literacy and verifying the information from trusted sources, prioritising mental health by self-care practices and staying connected with supportive authenticated networks. Promoting prebunking and debunking initiatives is necessary. Netizen scan protect themselves against the negative effects of misinformation and cultivate a resilient mindset in the digital information age.

References:

- https://www.hindawi.com/journals/scn/2021/7999760/

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8502082/