#FactCheck - Viral Image of Broken Mahatma Gandhi Statue Is Not from Bangladesh

An image showing a damaged statue of Mahatma Gandhi, broken into two pieces, is being widely shared on social media. The image shows Gandhi’s statue with its head separated from the body, prompting strong reactions online.

Social media users are claiming that the incident occurred in Bangladesh, alleging that Mahatma Gandhi’s statue was deliberately vandalised there. The image is being described as a recent incident and is being circulated across platforms with provocative and inflammatory captions.

Cyber Peace Foundation’s research and verification found that the claim being shared online is misleading. Our rsearch revealed that the viral image is not from Bangladesh. The image is actually from Chakulia in Uttar Dinajpur district of West Bengal, India

Claim:

Social media users claim that Mahatma Gandhi’s statue was vandalised in Bangladesh, and that the viral image shows a recent incident from the country.One Facebook user shared the video on 19 January 2026, making derogatory remarks and falsely linking the incident to Bangladesh. The post has since been widely shared on social media platforms. (Archived links and screenshots are available.)

Fact Check:

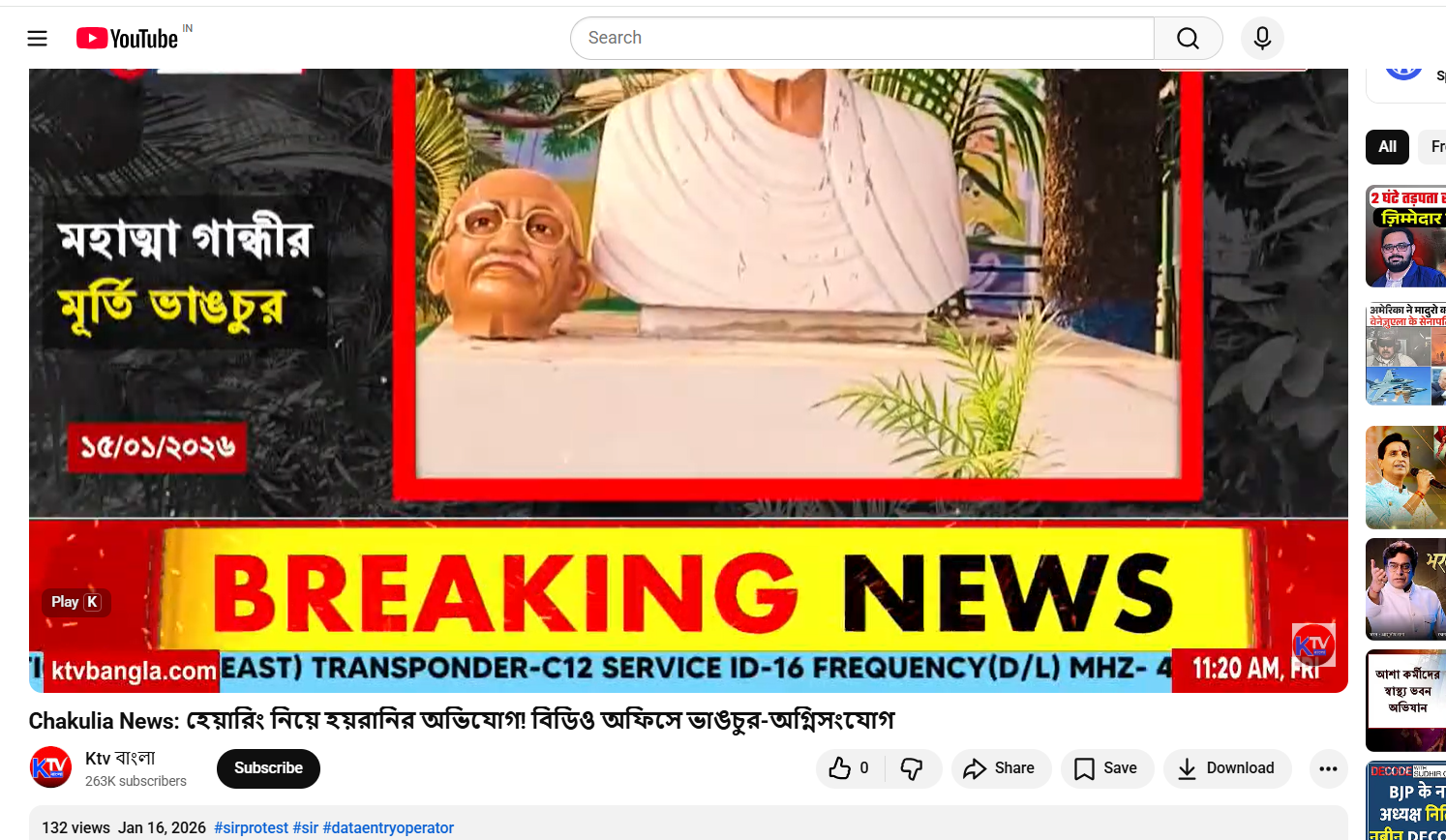

Our research revealed that the viral image is not from Bangladesh. The image is actually from Chakulia in Uttar Dinajpur district of West Bengal, India. To verify the claim, we conducted a reverse image search using Google Lens on key frames from the viral video. This led us to a report published by ABP Live Bangla on 16 January 2026, which featured the same visuals. Link and screenshot

According to ABP Live Bangla, the statue of Mahatma Gandhi was damaged during a protest in Chakulia. The statue’s head was found separated from the body. While a portion of the broken statue remained at the site on Thursday night, it was reported missing by Friday morning. The report further stated that extensive damage was observed at BDO Office No. 2 in Golpokhar. Gandhi’s statue, located at the entrance of the administrative building, was found broken, and ashes were discovered near the premises. Government staff were seen clearing scattered debris from the site.

The incident reportedly occurred during a SIR (Special Intensive Revision) hearing at the BDO office, which was disrupted due to vandalism. In connection with the violence and damage to government property, 21 people have been arrested so far. In the next stage of verification, we found the same footage in a 16 January 2026 report by local Bengali news channel K TV, which also showed clear visuals of the damaged Mahatma Gandhi statue. Link and screenshot.

Conclusion:

The viral image of Mahatma Gandhi’s broken statue does not depict an incident from Bangladesh. The image is from Chakulia in West Bengal’s Uttar Dinajpur district, where the statue was damaged during a protest.