#FactCheck - Viral Post of Gautam Adani’s Public Arrest Found to Be AI-Generated

Executive Summary:

A viral post on X (formerly twitter) shared with misleading captions about Gautam Adani being arrested in public for fraud, bribery and corruption. The charges accuse him, his nephew Sagar Adani and 6 others of his group allegedly defrauding American investors and orchestrating a bribery scheme to secure a multi-billion-dollar solar energy project awarded by the Indian government. Always verify claims before sharing posts/photos as this came out to be AI-generated.

Claim:

An image circulating of public arrest after a US court accused Gautam Adani and executives of bribery.

Fact Check:

There are multiple anomalies as we can see in the picture attached below, (highlighted in red circle) the police officer grabbing Adani’s arm has six fingers. Adani’s other hand is completely absent. The left eye of an officer (marked in blue) is inconsistent with the right. The faces of officers (marked in yellow and green circles) appear distorted, and another officer (shown in pink circle) appears to have a fully covered face. With all this evidence the picture is too distorted for an image to be clicked by a camera.

A thorough examination utilizing AI detection software concluded that the image was synthetically produced.

Conclusion:

A viral image circulating of the public arrest of Gautam Adani after a US court accused of bribery. After analysing the image, it is proved to be an AI-Generated image and there is no authentic information in any news articles. Such misinformation spreads fast and can confuse and harm public perception. Always verify the image by checking for visual inconsistency and using trusted sources to confirm authenticity.

- Claim: Gautam Adani arrested in public by law enforcement agencies

- Claimed On: Instagram and X (Formerly Known As Twitter)

- Fact Check: False and Misleading

Related Blogs

Executive Summary:

A video purporting to be from Lal Chowk in Srinagar, which features Lord Ram's hologram on a clock tower, has gone popular on the internet. The footage is from Dehradun, Uttarakhand, not Jammu and Kashmir, the CyberPeace Research Team discovered.

Claims:

A Viral 48-second clip is getting shared over the Internet mostly in X and Facebook, The Video shows a car passing by the clock tower with the picture of Lord Ram. A screen showcasing songs about Lord Ram is shown when the car goes forward and to the side of the road.

The Claim is that the Video is from Kashmir, Srinagar

Similar Post:

Fact Check:

The CyberPeace Research team found that the Information is false. Firstly we did some keyword search relating to the Caption and found that the Clock Tower in Srinagar is not similar to the Video.

We found an article by NDTV mentioning Srinagar Lal Chowk’s Clock Tower, It's the only Clock Tower in the Middle of Road. We are somewhat confirmed that the Video is not From Srinagar. We then ran a reverse image search of the Video by breaking down into frames.

We found another Video that visualizes a similar structure tower in Dehradun.

Taking a cue from this we then Searched for the Tower in Dehradun and tried to see if it matches with the Video, and yes it’s confirmed that the Tower is a Clock Tower in Paltan Bazar, Dehradun and the Video is actually From Dehradun but not from Srinagar.

Conclusion:

After a thorough Fact Check Investigation of the Video and the originality of the Video, we found that the Visualisation of Lord Ram in the Clock Tower is not from Srinagar but from Dehradun. Internet users who claim the Visual of Lord Ram from Srinagar is totally Baseless and Misinformation.

- Claim: The Hologram of Lord Ram on the Clock Tower of Lal Chowk, Srinagar

- Claimed on: Facebook, X

- Fact Check: Fake

Executive Summary

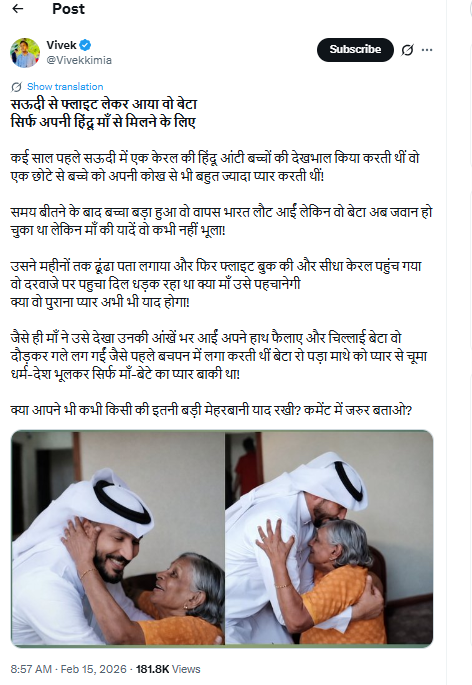

A photo is going viral on social media showing a young man dressed in traditional Arab attire warmly embracing an elderly woman. The post claims that the man flew in from Saudi Arabia to Kerala just to meet his “Hindu mother,” portraying the image as a heartwarming example of communal harmony. However, research by the CyberPeace found that the claim being shared with the image is misleading.

Claim

The viral post narrates an emotional story, alleging that years ago a Hindu woman from Kerala worked in Saudi Arabia caring for children and loved a young boy like her own son. After she returned to India, the boy—now grown up—reportedly searched for her for months, booked a flight, and finally reached Kerala to reunite with her. The post describes an emotional reunion filled with tears, affection, and a bond beyond religion and nationality.

Fact Check

A reverse image search of the viral picture led us to a video uploaded on August 18, 2023, on the YouTube channel of social media influencer Hashim Abbas. In the video, he is seen meeting and hugging the elderly woman while extending Onam greetings.

Further examination of Hashim Abbas’ social media accounts revealed several other videos from his Kerala visit. Our research also found that Abbas played a significant role in the Malayalam film Kondotty Pooram.

Additionally, we found a video posted on August 13, 2023, by actress and theatre artist Sandhya Rajendran, daughter of veteran Malayalam actress Vijayakumari. The video shows Vijayakumari teaching Onam songs to Hashim Abbas.

Conclusion

The evidence clearly establishes that the viral claim is misleading. The man seen in the image is Hashim Abbas, who was meeting senior Malayalam actress Vijayakumari to extend Onam greetings. The emotional story about a son flying from Saudi Arabia to reunite with his Hindu mother is fictional and not connected to the viral image.

.webp)

Introduction

In July 2025, the Digital Defence Report prepared by Microsoft raised an alarm that India is part of the top target countries in AI-powered nation-state cyberattacks with malicious agents automating phishing, creating convincing deepfakes, and influencing opinion with the help of generative AI (Microsoft Digital Defence Report, 2025). Most of the attention in the world has continued to be on the United States and Europe, but Asia-Pacific and especially India have become a major target in terms of AI-based cyber activities. This blog discusses the role of AI in espionage, redefining the threat environment of India, the reaction of the government, and what India can learn by looking at the example of cyber giants worldwide.

Understanding AI-Powered Cyber Espionage

Conventional cyber-espionage intends to hack systems, steal information or bring down networks. With the emergence of generative AI, these strategies have changed completely. It is now possible to automate reconnaissance, create fake voices and videos of authorities and create highly advanced phishing campaigns which can pass off as genuine even to a trained expert. According to the report made by Microsoft, AI is being used by state-sponsored groups to expand their activities and increase accuracy in victims (Microsoft Digital Defence Report, 2025). Based on SQ Magazine, almost 42 percent of state-based cyber campaigns in 2025 had AIs like adaptive malware or intelligent vulnerability scanners (SQ Magazine, 2025).

AI is altering the power dynamic of cyberspace. The tools previously needing significant technical expertise or substantial investments have become ubiquitous, and smaller countries can conduct sophisticated cyber operations as well as non-state actors. The outcome is the speeding up of the arms race with AI serving as the weapon and the armour.

India’s Exposure and Response

The weakness of the threat landscape lies in the growing online infrastructure and geopolitical location. The attack surface has expanded the magnitude of hundreds of millions of citizens with the integration of platforms like DigiLocker and CoWIN. Financial institutions, government portals and defence networks are increasingly becoming targets of cyber attacks that are more sophisticated. Faking videos of prominent figures, phishing letters with the official templates, and manipulation of the social media are currently all being a part of disinformation campaigns (Microsoft Digital Defence Report, 2025).

According to the Data Security Council of India (DSCI), the India Cyber Threat Report 2025 reported that attacks using AI are growing exponentially, particularly in the shape of malicious behaviour and social engineering (DSCI, 2025). The nodal cyber-response agency of India, CERT-In, has made several warnings regarding scams related to AI and AI-generated fake content that is aimed at stealing personal information or deceiving the population. Meanwhile, enforcement and red-teaming actions have been intensified, but the communication between central agencies and state police and the private platforms is not even. There is also an acute shortage of cybersecurity talents in India, as less than 20 percent of cyber defence jobs are occupied by qualified specialists (DSCI, 2025).

Government and Policy Evolution

The government response to AI-enabled threats is taking three forms, namely regulation, institutional enhancing, and capacity building. The Digital Personal Data Protection Act 2023 saw a major move in defining digital responsibility (Government of India, 2023). Nonetheless, threats that involve AI-specific issues like data poisoning, model manipulation, or automated disinformation remain grey areas. The following National Cybersecurity Strategy will attempt to remedy them by establishing AI-government guidelines and responsibility standards to major sectors.

At the institutional level, the efforts of such organisations as the National Critical Information Infrastructure Protection Centre (NCIIPC) and the Defence Cyber Agency are also being incorporated into their processes with the help of AI-based monitoring. There is also an emerging public-private initiative. As an example, the CyberPeace Foundation and national universities have signed a memorandum of understanding that currently facilitates the specialised training in AI-driven threat analysis and digital forensics (Times of India, August 2025). Even after these positive indications, India does not have any cohesive system of reporting cases of AI. The publication on arXiv in September 2025 underlines the importance of the fact that legal approaches to AI-failure reporting need to be developed by countries to approach AI-initiated failures in such fields as national security with accountability (arXiv, 2025).

Global Implications and Lessons for India

Major economies all over the world are increasing rapidly to integrate AI innovation with cybersecurity preparedness. The United States and United Kingdom are spending big on AI-enhanced military systems, performing machine learning in security operations hubs and organising AI-based “red team” exercises (Microsoft Digital Defence Report, 2025). Japan is testing cross-ministry threat-sharing platforms that utilise AI analytics and real-time decision-making (Microsoft Digital Defence Report, 2025).

Four lessons can be distinguished as far as India is concerned.

- To begin with, the cyber defence should shift to proactive intelligence in place of reactive investigation. It is not only possible to detect the adversary behaviour after the attacks, but to simulate them in advance using AI.

- Second, teamwork is essential. The issue of cybersecurity cannot be entrusted to government enforcement. The private sector that maintains the majority of the digital infrastructure in India must be actively involved in providing information and knowledge.

- Third, there is the issue of AI sovereignty. Building or hosting its own defensive AI tools in India will diminish dependence on foreign vendors, and minimise the possible vulnerabilities of the supply-chain.

- Lastly, the initial defence is digital literacy. The citizens should be trained on how to detect deepfakes, phishing, and other manipulated information. The importance of creating human awareness cannot be underestimated as much as technical defences (SQ Magazine, 2025).

Conclusion

AI has altered the reasoning behind cyber warfare. There are quicker attacks, more difficult to trace and scalable as never before. In the case of India, it is no longer about developing better firewalls but rather the ability to develop anticipatory intelligence to counter AI-powered threats. This requires a national policy that incorporates technology, policy and education.

India can transform its vulnerability to strength with the sustained investment, ethical AI governance, and healthy cooperation between the government and the business sector. The following step in cybersecurity does not concern who possesses more firewalls than the other but aims to learn and adjust more quickly and successfully in a world where machines already belong to the battlefield (Microsoft Digital Defence Report, 2025).

References:

- Microsoft Digital Defense Report 2025

- India Cyber Threat Report 2025, DSCI

- Lucknow based organisations to help strengthen cybercrime research training policy ecosystem

- AI Cyber Attacks Statistics 2025: How Attacks, Deepfakes & Ransomware Have Escalated, SQ Magazine

- Incorporating AI Incident Reporting into Telecommunications Law and Policy: Insights from India.

- The Digital Personal Data Protection Act, 2023