#FactCheck - AI-Generated Video Falsely Shared as ‘Multi-Hooded Snake’ Sighting in Vrindavan

A video is being widely shared on social media showing devotees seated in a boat appearing stunned as a massive, multi-hooded snake—resembling the mythical Sheshnag—suddenly emerges from the middle of a water body.

The video captures visible panic and astonishment among the devotees. Social media users are sharing the clip claiming that it is from Vrindavan, with some portraying the sight as a divine or supernatural event. However, research conducted by the Cyber Peace Foundation found the viral claim to be false. Our research revealed that the video is not authentic and has been generated using artificial intelligence (AI).

Claim

On January 17, 2026, a user shared the viral video on Instagram with the caption suggesting that God had appeared again in the age of Kalyug. The post claims that a terrifying video from Vrindavan has surfaced in which devotees sitting in a boat were shocked to see a massive multi-hooded snake emerge from the water. The caption further states that devotees are hailing the creature as an incarnation of Sheshnag or Vasuki Nag, raising religious slogans and questioning whether the sight represents a divine sign. (The link to the post, its archive link, and screenshots are available.)

- https://www.instagram.com/reel/DTngN9FkoX0/?igsh=MTZvdTN1enI2NnFydA%3D%3D

- https://archive.ph/UuAqB

Fact Check:

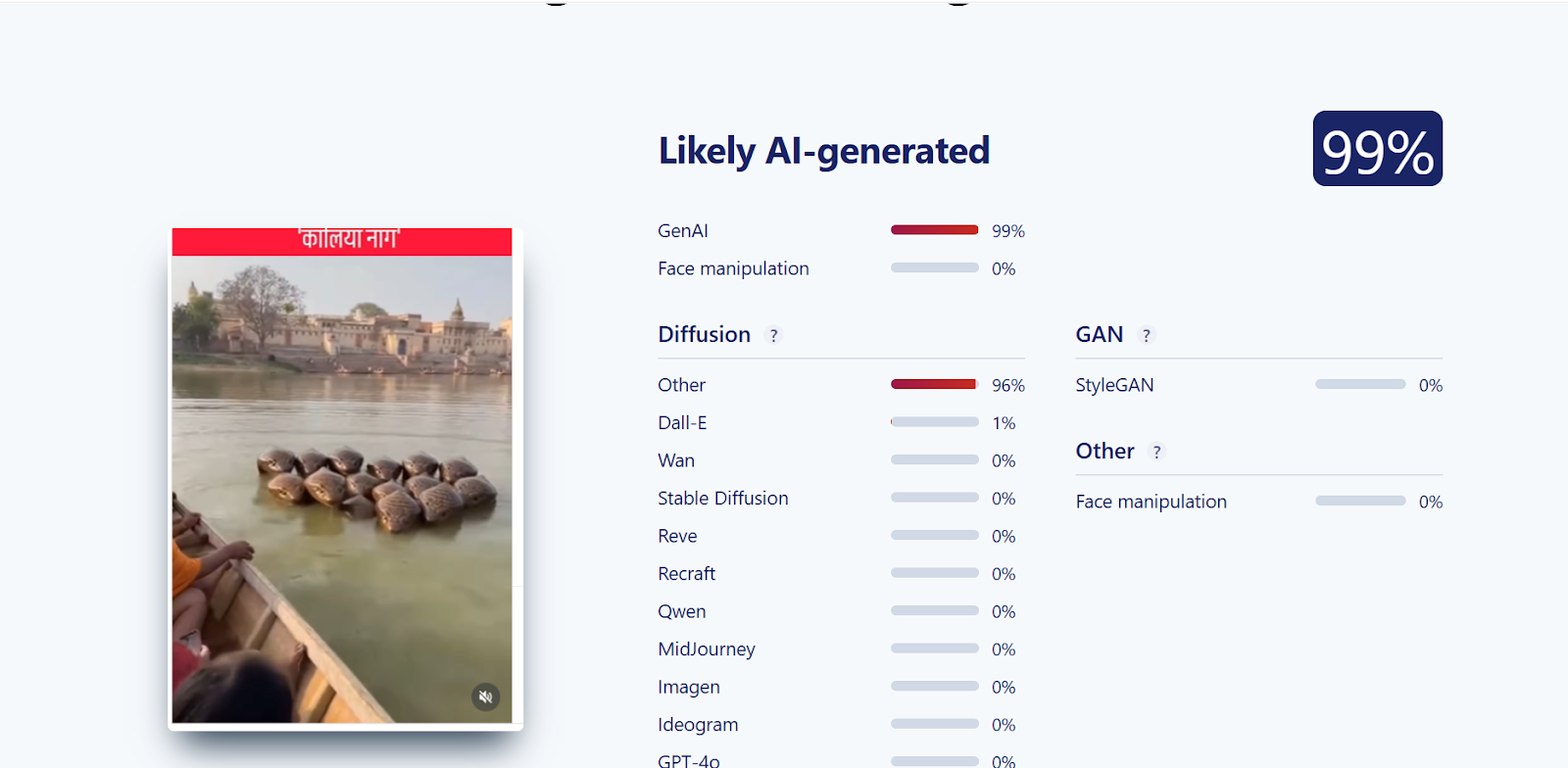

Upon closely examining the viral video, we suspected that it might be AI-generated. To verify this, the video was scanned using the AI detection tool SIGHTENGINE, which indicated that the visual is 99 per cent AI-generated.

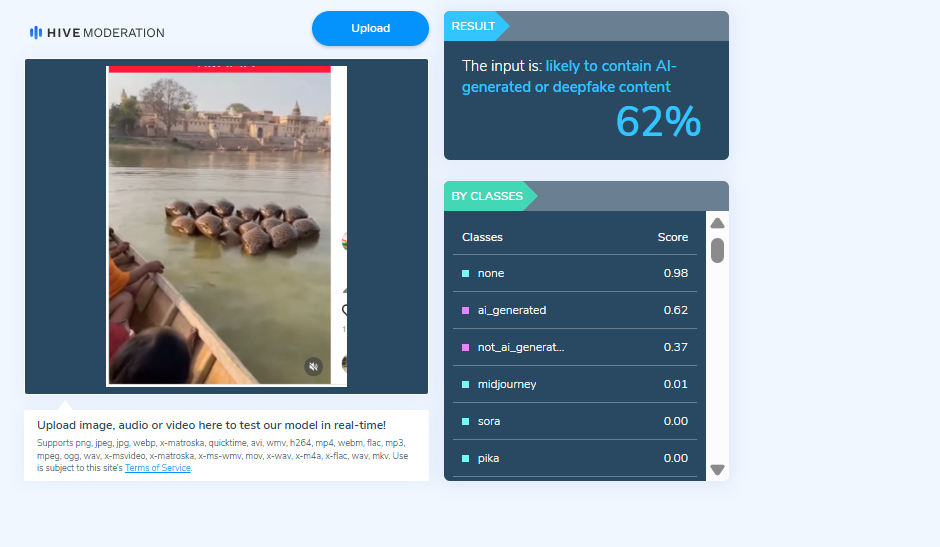

In the next step of the research , the video was analysed using another AI detection tool, HIVE Moderation. According to the results obtained, the video was found to be 62 per cent AI-generated.

Conclusion

Our research clearly establishes that the viral video claiming to show a multi-hooded snake in Vrindavan is not real. The clip has been created using artificial intelligence and is being falsely shared on social media with religious and sensational claims.

Related Blogs

The more ease and dependency the internet slithers into our lives, the more obscure parasites linger on with it, menacing our privacy and data. Among these digital parasites, cyber espionage, hacking, and ransom have never failed to grab the headlines. These hostilities carried out by cyber criminals, corporate juggernauts and several state and non-state actors lend them unlimited access to the customers’ data damaging the digital fabric and wellbeing of netizens.

As technology continues to evolve, so does the need for robust safety measures. To tackle these emerging challenges, Korea based Samsung Electronics has introduced a cutting-edge security tool called Auto Blocker. Introduced in the One UI 6 Update, Auto Blocker boasts an array of additional security features, granting users the ability to customize their device's security as per their requirements Also known as ‘advanced sandbox’ or ‘Virtual Quarantine’. Sandboxing is a safety measure for separating running programs to prevent spread of digital vulnerabilities. It prohibits automatic execution of malicious code embedded in images. This shield now extends to third-party apps like WhatsApp and Facebook messenger, providing better resilience against cyber-attacks in all Samsung devices.

Matter of Choice

Dr. Seungwon Shin, EVP & Head of Security Team, Mobile eXperience Business at Samsung Electronics, emphasizes the significance of user safety. He stated “At Samsung, we constantly strive to keep our users safe from security attacks, and with the introduction of Auto Blocker, users can continue to enjoy the benefits of our open ecosystem, knowing that their mobile experience is secured.”

Auto Blocker is a matter of choice. It's not a cookie cutter solution; instead, its USP is the ability to customize security measures of your device. The Auto Blocker can be accessed through device’s setting, and is activated via toggle.

Your personal Digital Armor

One of Auto Blocker's salient features is its ability to prevent bloatware (unnecessary apps) from installing in the devices from unknown sources which is called sideloading. While sideloading provides greater scope of control and better customization, it also exposes users to potential threats, such as malicious file downloads. The proactive approach of Auto Blocker disables sideloading by default. Auto Blocker serves as an extra line of defense, especially against gruesome social engineering attacks such as voice Phishing (Vhishing). The app has an essential tool called ‘Message Guard’, engineered to combat Zero Click attacks. These complicated attacks are executed when a message containing an image is viewed.

The Auto Blocker also offers a wide variety of new controls to enhance device’s safety, including security scans to detect malwares. Additionally, Auto Blocker prevents the installation of malwares via USB cable. This ensures the device's security even when someone gains physical access to it, such as when the device is being charged in a public place.

Raising the Bar for Cyber Security

Auto Blocker testifies Samsung's unwavering commitment to the safety and privacy of its users. It acts an essential part of Samsung's security suite and privacy innovations, improving overall mobile experience within the Galaxy’s ecosystem. It provides a safer mobile experience while allowing user superior control over their device's protection. In comparison. Apple offers a more standardized approach to privacy and security with emphasis on user friendly design and closed ecosystem. Samsung disables sideloading to combat threats, while Apple is more flexible in this regard on macOS.

In this dynamic digital space, the Auto Blocker offers a tool to maintain cyber peace and resilience. It protects from a broad spectrum of digital hostilities while allowing us to embrace the new digital ecosystem crafted by Galaxy. It's a security feature that puts you in control, allowing you to determine how you fortify your digital fort to safeguard your device against digital specters like zero clicks, voice phishing (Vishing) and malware downloads

Samsung’s new product emerges as impenetrable armor shielding users against cyber hostilities. With its new customizable security feature with Galaxy Ecosystem, it allows users to exercise greater control over their digital space, promoting more secure and peaceful cyberspace.

Reference:

HT News Desk. (2023, November 1). Samsung unveils new Auto Blocker feature to protect devices. How does it work? Hindustan Times. https://www.hindustantimes.com/technology/samsung-unveils-new auto-blocker-feature to-protect-devices-how-does-it-work 101698805574773.html

Introduction

With mobile phones at the centre of our working and personal lives, the SIM card, which was once just a plain chip that links phones with networks, has turned into a vital component of our online identity, SIM cloning has become a sneaky but powerful cyber-attack, where attackers are able to subvert multi-factor authentication (MFA), intercept sensitive messages, and empty bank accounts, frequently without the victim's immediate awareness. As threat actors are becoming more sophisticated, knowing the process, effects, and prevention of SIM cloning is essential for security professionals, telecom operators, and individuals alike.

Understanding SIM Cloning

SIM cloning is the act of making an exact copy of a victim's original SIM card. After cloning, the attacker's phone acts like the victim's, receiving calls, messages, and OTPs. This allows for a variety of cybercrimes, ranging from unauthorised financial transactions to social media account hijacking. The attacker virtually impersonates the victim, often leading to disastrous outcomes.

The cloning can be executed through various means:

● Phishing or Social Engineering: The attack compels the victim or a mobile carrier into divulging personal information or requesting a replacement SIM.

● SIM Swap Requests: Attackers use fake IDs or stolen credentials to make telecom providers port the victim's number to a new SIM.

● SS7 Protocol Exploitation: Certain sophisticated attacks target weaknesses in the Signalling System No. 7 (SS7) protocol employed by cellular networks to communicate.

● Hardware based SIM Cloning: Although uncommon, experienced attackers will clone SIMs through the use of specialized hardware and malware that steals authentication keys.

The Real-World Consequences

The harm inflicted by SIM cloning is systemic as well as personal. The victims are deprived of their phones and online accounts, realising the breach only when improper dealings or login attempts have occurred. The FBI reported over $50 million loss in 2023 from crimes associated with SIM, most of which involved cryptocurrency account and high net-worth persons.

Closer to home, Indian entrepreneurs, journalists, and fintech users have reported losing access to their numbers, only to have their WhatsApp, UPI, and banking apps taken over. In a few instances, the attackers even contacted contacts, posing as the victim to scam others.

Why the Threat Is Growing

Dependence on SMS-based OTPs is still a core vulnerability. Even as there are attempts to move towards app-based two-factor authentication (2FA), most banking, government, and e-commerce websites continue to employ SMS as their main authentication method. This reliance provides an entry point for attackers who can replicate a SIM and obtain OTPs without detection.

Vulnerabilities in telecom infrastructure are also a part of the issue. Insider attacks at telecom operators, where malicious employees handle fraud SIM swap requests, also keep cropping up. On top of that, most users are not even aware of what exactly SIM cloning is or how to identify it, leaving attackers with a head start.

Very often, the victims are only aware that their SIM has been cloned when they lose mobile service or notice unusual activity on their accounts. Red flags include loss of signal, failure to send or receive messages, and inability to receive OTPs. Alerts on password changes or unusual login attempts must never be taken lightly, particularly if this is coupled with loss of mobile service.

How Users Can Protect Themselves

● Use A Strong SIM Pin: This protects your SIM from access by unauthorized users should your phone be lost or stolen.

● Secure Personal Information: Don't post sensitive personal information online that can have a place in social engineering.

● Notify your Carrier of Suspicious Activity: If your phone suddenly has lost service or is behaving strangely, contact your mobile operator immediately.

● Register for Telecom Alerts: Many providers offer alerts to SIM swap or porting requests that are useful to preliminarily detect a possible takeover.

● Verify SIM card status using Sanchar Saathi: Visit [https://sancharsaathi.gov.in](https://sancharsaathi.gov.in) to check how many mobile numbers are issued using your ID. This government portal allows you to identify unauthorized or unknown SIM cards, helping prevent SIM swapping fraud. You can also request to block suspicious numbers linked to your identity.

Conclusion

SIM cloning is not a retrograde nod to vintage cybercrime; it's an effective method of exploitation, especially where there's a strong presence of SMS-based authentication. The attack vector is simple, but the damage it causes can be profound, both financial and reputational. With telecommunication networks forming the backbone of digital identity, users, regulators, and telecom service providers have to move in tandem. For the users, awareness is the best protection. For Telecoms, security must be a baseline requirement, not a value-add option. It's time to redefine mobile security, before your identity is in anyone else's hands.

References

● https://www.trai.gov.in/faqcategory/mobile-number-portability

● https://www.cert-in.org.in/PDF/Digital_Threat_Report_2024.pdf

● https://www.ic3.gov/PSA/2022/PSA220208/

● https://www.hdfcbank.com/personal/useful-links/security/beware-of-fraud/sim-swap

● https://security-gen.com/SecurityGen-Article-Cloning-SimCard.pdf

● https://www.p1sec.com/blog/understanding-ss7-attacks-vulnerabilities-impacts-and-protection-measures

Over the last decade, battlefields have percolated from mountains, deserts, jungles, seas, and the skies into the invisible networks of code and cables. Cyberwarfare is no longer a distant possibility but today’s reality. The cyberattacks of Estonia in 2007, the crippling of Iran’s nuclear program by the Stuxnet virus, the SolarWinds and Colonial Pipeline breaches in recent years have proved one thing: that nations can now paralyze economies and infrastructures without firing a bullet. Cyber operations now fall beyond the traditional threshold of war, allowing aggressors to exploit the grey zone where full-scale retaliation may be unlikely.

At the same time, this ambiguity has also given rise to the concept of cyber deterrence. It is a concept that has been borrowed from the nuclear strategies during the Cold War era and has been adapted to the digital age. At the core, cyber deterrence seeks to alter the adversary’s cost-benefit calculation that makes attacks either too costly or pointless to pursue. While power blocs like the US, Russia, and China continue to build up their cyber arsenals, smaller nations can hold unique advantages, most importantly in terms of their resilience, if not firepower.

Understanding the concept of Cyber Deterrence

Deterrence, in its classic sense, is about preventing action through the fear of consequences. It usually manifests in four mechanisms as follows:

- Punishment by threatening to impose costs on attackers, whether by counter-attacks, economic sanctions, or even conventional forces.

- Denial of attacks by making them futile through hardened defences, and ensuring the systems to resist, recover, and continue to function.

- Entanglement by leveraging interdependence in trade, finance, and technology to make attacks costly for both attackers and defenders.

- Norms can also help shape behaviour by stigmatizing reckless cyber actions by imposing reputational costs that can exceed any gains.

However, great powers have always emphasized the importance of punishment as a tool to showcase their power by employing offensive cyber arsenals to instill psychological pressure on their rivals. Yet in cyberspace, punishment has inherent flaws.

The Advantage of Asymmetry

For small states, smaller geographical size can be utilised as a benefit. Three advantages of this exist, such as:

- With fewer critical infrastructures to protect, resources can be concentrated. For example, Denmark, with a modest population of $40 million cyber budget, is considered to be among the most cyber-secure nations, despite receiving billions of US spending.

- Smaller bureaucracies enable faster response. The centralised cyber command of Singapore allows it to ensure a rapid coordination between the government and the private sector.

- Smaller countries with lesser populations can foster a higher public awareness and participation in cyber hygiene by amplifying national resilience.

In short, defending a small digital fortress can be easier than securing a sprawling empire of interconnected systems.

Lessons from Estonia and Singapore

The 2007 crisis of Estonia remains a case study of cyber resilience. Although its government, bank, and media were targeted in offline mode, Estonia emerged stronger by investing heavily in cyber defense mechanisms. Another effort in this case stood was with the hosting of NATO’s Cooperative Cyber Defence Centre of Excellence to build one of the world’s most resilient e-governance models.

Singapore is another case. Where, recognising its vulnerability as a global financial hub, it has adopted a defense-centric deterrence strategy by focusing on redundancy, cyber education, and international partnership rather than offensive capacity. These approaches can also showcase that deterrence is not always about scaring attackers with retaliation, it is about making the attacks meaningless.

Cyber deterrence and Asymmetric Warfare

Cyber conflict is understood through the lens of asymmetric warfare, where weaker actors exploit the unconventional and stronger foes. As guerrillas get outmanoeuvred by superpowers in Vietnam or Afghanistan, small states hold the capability to frustrate the cyber giants by turning their size into a shield. The essence of asymmetric cyber defence also lies in three principles, which can be mentioned as;

- Resilience over retaliation by ensuring a rapid recovery to neutralise the goals of the attackers.

- Undertaking smart investments focusing on limited budgets over critical assets, not sprawling infrastructures.

- Leveraging norms to shape the international opinions to stigmatize the aggressors and increase the reputational costs.

This also helps to transform the levels of cyber deterrence into a game of endurance rather than escalating it into a domain where small states can excel.

There remain challenges as well, as attribution problems persist, the smaller nations still depend on foreign technology, which the adversaries have sought to exploit. Issues over the shortage of talent have plagued the small states, as cyber professionals have migrated to get lucrative jobs abroad. Moreover, building deterrence capability through norms requires active multilateral cooperation, which may not be possible for all small nations to sustain.

Conclusion

Cyberwarfare represents a new frontier of asymmetric conflict where size does not guarantee safety or supremacy. Great powers have often dominated the offensive cyber arsenals, where small states have carved their own path towards security by focusing on defence, resilience, and international collaboration. The examples of Singapore and Estonia demonstrate the fact that the small size of a state can be its identity of a hidden strength in capabilities like cyberspace, allowing nimbleness, concentration of resources and societal cohesion. In the long run, cyber deterrence for small states will not rest on fearsome retaliation but on making attacks futile and recovery inevitable.

References

- https://bluegoatcyber.com/blog/asymmetric-warfare/

- https://digitalcommons.usf.edu/cgi/viewcontent.cgi?article=2268&context=jss

- https://www.linkedin.com/pulse/rising-tide-cyberwarfare-battle-between-superpowers-hussain/

- https://digitalcommons.odu.edu/cgi/viewcontent.cgi?article=1243&context=gpis_etds

- https://www.scirp.org/journal/paperinformation?paperid=141708

- https://digitalcommons.odu.edu/cgi/viewcontent.cgi?article=1243&context=gpis_etds