#FactCheck: Viral video of Unrest in Kenya is being falsely linked with J&K

Executive Summary:

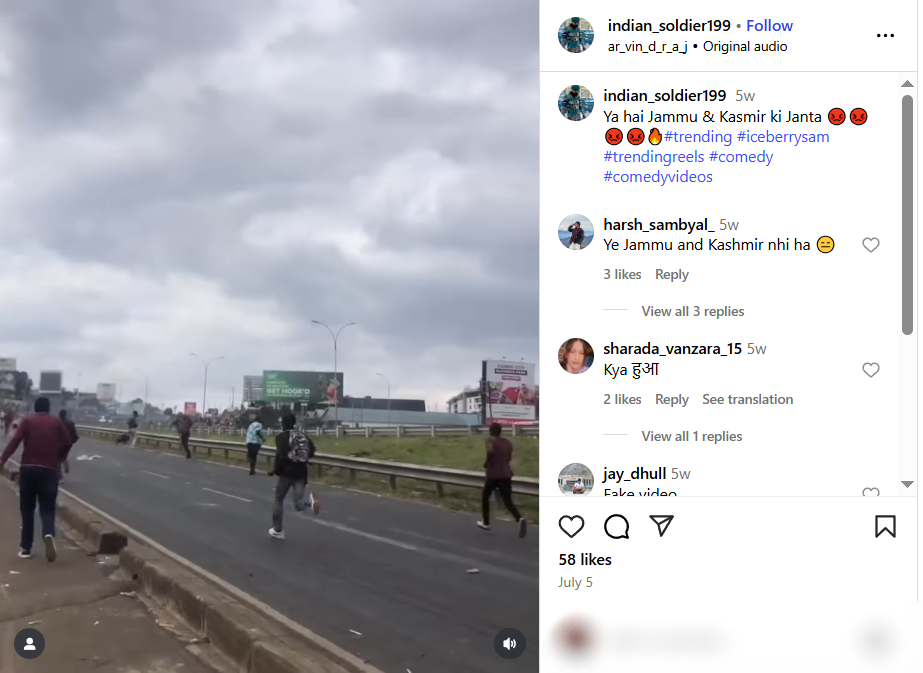

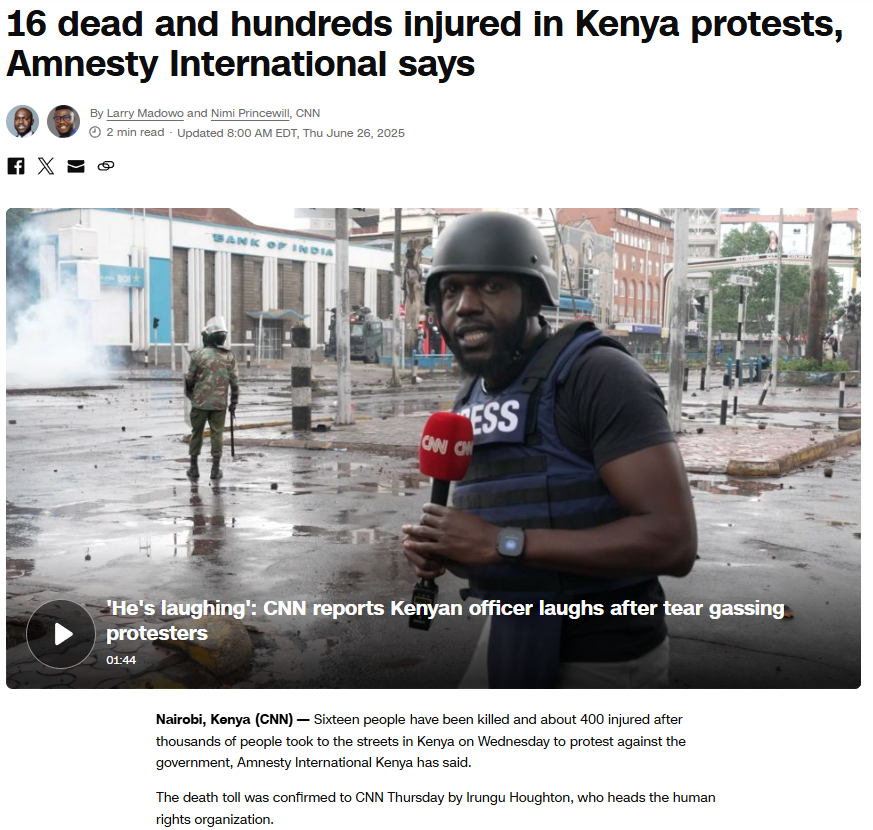

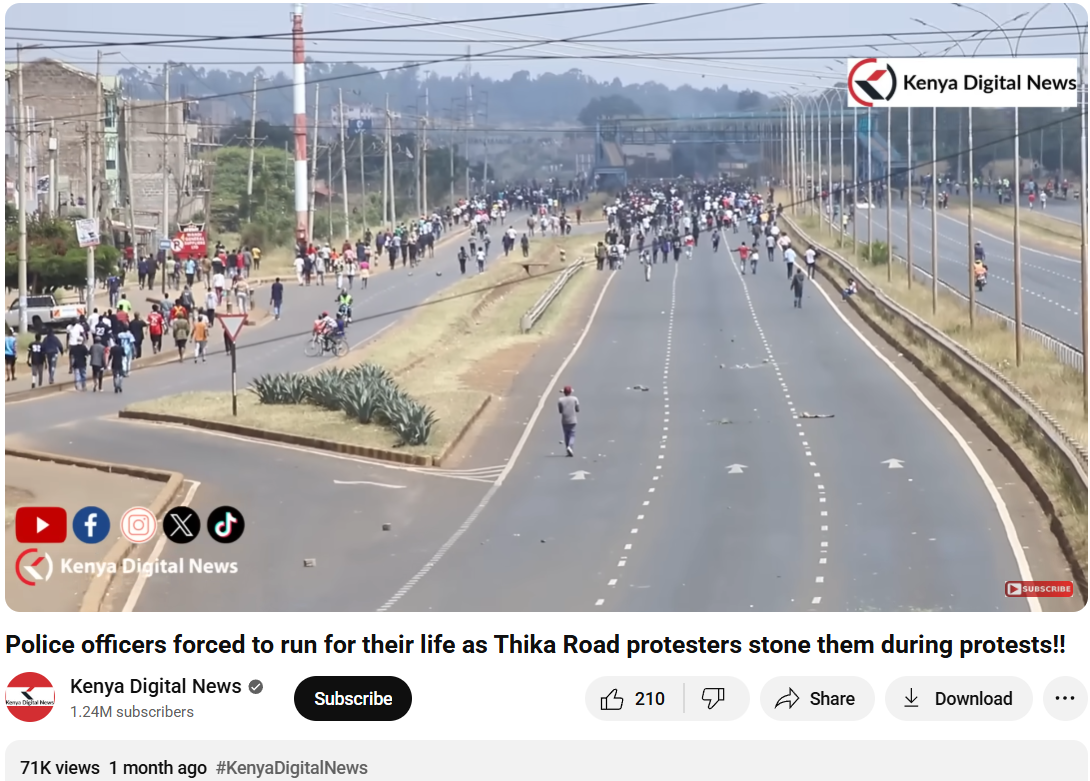

A video of people throwing rocks at vehicles is being shared widely on social media, claiming an incident of unrest in Jammu and Kashmir, India. However, our thorough research has revealed that the video is not from India, but from a protest in Kenya on 25 June 2025. Therefore, the video is misattributed and shared out of context to promote false information.

Claim:

The viral video shows people hurling stones at army or police vehicles and is claimed to be from Jammu and Kashmir, implying ongoing unrest and anti-government sentiment in the region.

Fact Check:

To verify the validity of the viral statement, we did a reverse image search by taking key frames from the video. The results clearly demonstrated that the video was not sourced from Jammu and Kashmir as claimed, but rather it was consistent with footage from Nairobi, Kenya, where a significant protest took place on 25 June 2025. Protesters in Kenya had congregated to express their outrage against police brutality and government action, which ultimately led to violent clashes with police.

We also came across a YouTube video with similar news and frames. The protests were part of a broader anti-government movement to mark its one-year time period.

To support the context, we did a keyword search of any mob violence or recent unrest in J&K on a reputable Indian news source, But our search did not turn up any mention of protests or similar events in J&K around the relevant time. Based on this evidence, it is clear that the video has been intentionally misrepresented and is being circulated with false context to mislead viewers.

Conclusion:

The assertion that the viral video shows a protest in Jammu and Kashmir is incorrect. The video appears to be taken from a protest in Nairobi, Kenya, in June 2025. Labeling the video incorrectly only serves to spread misinformation and stir up uncalled for political emotions. Always be sure to verify where content is sourced from before you believe it or share it.

- Claim: Army faces heavy resistance from Kashmiri youth — the valley is in chaos.

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

As e-commerce companies expand their base and sell a wide range of products on their platforms, attackers continue to look for newer avenues to exploit and potential loopholes to perpetuate scams. A recent method used by scammers is the brushing scam, which targets online shoppers to drive sales. As per reports, it is already being conducted on popular and trusted e-commerce websites such as Amazon and Alibaba Express, and online shoppers must exercise caution with regard to the packages they receive.

The Brushing Scam

Deriving its name from China’s e-commerce practice, this scam includes sellers creating and sending fake orders to unsuspecting individuals, posing to be from e-commerce websites in order to ‘brush up’ the sales figures of their product. The products received are usually low quality and contain items such as low-cost jewellery, seeds, and random gadgets, among other things. The aim is to manipulate reviews for a particular product and make it seem popular so other buyers online are encouraged to purchase the items marketed. Most online shoppers today check reviews before making a purchase, and popular items and seemingly-trustworthy reviews can go a long way towards influencing customer behaviour. Since many platforms do include labels to authenticate reviews tied to genuine purchases to counter fake reviews, scammers have evolved a step further to develop an MO for fake reviews that holds up against basic levels of scrutiny. Some of the packages received under the brushing scam also have QR codes which once scanned lead the receiver to malicious websites.

CyberPeace Insights

Mysterious deliveries that have no information but your name and address may seem tempting to many, as receivers might assume that it could be a marketing gig and free products to try for the sake of promoting a product. The credibility of such deliveries increases as they are packaged to show that these are delivered through trusted online shopping and e-commerce sites. However, even though receiving products for free might seem harmless, it is advised that unknown items be dealt with carefully, more so when addressed to an individual with personal details. Receiving an order itself is an indication that personal information such as one’s name and address has been compromised, and it is likely that the sellers are involved in procuring personal information through a third party, often using illegal methods.

Registering complaints to the concerned e-commerce websites is encouraged, as the frequency of cases raises questions and encourages platforms to take action to ensure a secure buying and delivery experience from their end. An awareness of such scams being carried out for their customers could encourage caution on the part of these platforms and prove to be helpful in addressing the issue on multiple levels. On the part of the receivers, they can change the passwords of their e-commerce accounts and use a 2FA (2-factor authentication) for better security. They should also exercise caution while receiving such parcels, and avoid scanning QR codes on suspicious items.

References

- https://www.livemint.com/technology/tech-news/brushing-scam-explained-from-fake-orders-to-reviews-how-fraudsters-are-manipulating-online-shopping-platforms-11735824384866.html

- https://www.indiatvnews.com/technology/news/beware-of-amazon-scams-how-fraudsters-use-fake-reviews-to-sell-counterfeit-products-2025-01-02-969115

- https://www.indiatoday.in/technology/news/story/brushing-scam-now-makes-buzz-as-it-targets-online-shoppers-everything-you-need-to-know-2659172-2025-01-03

- https://www.msn.com/en-in/money/news/brushing-scam-now-makes-buzz-as-it-targets-online-shoppers-everything-you-need-to-know/ar-AA1wTvon

According to Statista, the number of users in India's digital assets market is expected to reach 107.30m users by 2025 (Impacts of Inflation on Financial Markets, August 2023). India's digital asset market has been experiencing exponential growth fueled by the increased adoption of cryptocurrencies and blockchain technology. This furthers the need for its regulation. Digital assets include cryptocurrencies, NFTs, asset-backed tokens, and tokenised real estate.

India has defined Digital Assets under Section 47(A) of the Income Tax Act, 1961. The Finance Act 2022-23 has added the word 'virtual' to make it “Virtual Digital Assets”. A “virtual digital asset” is any information or code, number, or token, created through cryptographic methods or otherwise, by any name, giving a digital representation of value exchanged with or without consideration. A VDA should contain an inherent value and represent a store of value or unit of account, functional in any financial transaction or investment. These can be stored, transferred, or traded in electronic format.

Digital Asset Governance: Update and Future Outlook

Indian regulators have been conservative in their approach towards digital assets, with the Reserve Bank of India first issuing directions against cryptocurrency transactions in 2018. This ban was removed by the Supreme Court through a court order in 2020. The presentation of the Cryptocurrency and Regulation of Official Digital Currency Bill of 2021 is a fairly important milestone in its attempts to lay down the framework for issuing an official digital currency by the Reserve Bank of India. While some digital assets seem to have potential, like the Central Bank Digital Currencies (CBDCs) and blockchain-based financial applications, a blanket prohibition has been enforced on private cryptocurrencies.

However, in more recent trends, the landscape is changing as the RBI's CBDC is to provide a state-backed digital alternative to cash under a more structured regulatory framework. This move seeks to balance state control with innovation on investor safety and compliance, expecting to reduce risk and enhance security for investors by enacting strict anti-money laundering and know-your-customer laws. Highlighting these developments is important to examine how global regulatory trends influence India's digital asset policies.

Impact of Global Development on India’s Approach

Global regulatory developments have an impact on Indian policies on digital assets. The European Union's Markets in Crypto-assets (MiCA) is to introduce a comprehensive regulatory framework for cryptocurrencies that could act as an inspiration for India. MiCA regulation covers crypto-assets that are not currently regulated by existing financial services legislation. Its particular focus on consumer protection and market integrity resonates with India in terms of investigating needs related to digital assets, including fraud and price volatility. Additionally, evolving policies in the US, such as regulating crypto exchanges and classifying certain tokens as securities, could also form the basis for India's regulatory posture.

Collaboration on the international level is also a chief contributing factor. India’s regular participation in global forums like the G20, facilitates an opportunity to align its regulations on digital assets with other countries, tending toward an even more standardised and predictable framework for cross-border transactions. This can significantly help India given that the nation has a huge diaspora providing a critical inflow of remuneration.

CyberPeace Outlook

Though digital assets offer many opportunities to India, challenges also exist. Cryptocurrency volatility affects investors, posing concerns over fraud and illicit dealings. A balance between the need for innovation and investor protection is paramount to avoid killing the growth of India's digital asset ecosystem with overly restrictive regulations.

Financial inclusion, efficient cross-border payments with low transaction costs, and the opening of investment opportunities are a few opportunities offered by digital assets. For example, the tokenisation of real estate throws open real estate investment to smaller investors. To strengthen the opportunities while addressing challenges, some policy reforms and new frameworks might prove beneficial.

CyberPeace Policy Recommendations

- Establish a regulatory sandbox for startups working in the area of blockchain and digital assets. This would allow them to test innovative solutions in a controlled environment with regulatory oversight minimising risks.

- Clear guidelines for the taxation of digital assets should be provided as they will ensure transparency, reduce ambiguity for investors, and promote compliance with tax regulations. Specific guidelines can be drawn from the EU's MiCA regulation.

- Workshops, online resources, and campaigns are some examples of initiatives aimed at improving consumer awareness about digital assets, benefits and associated risks that should be implemented. Partnerships with global fintech firms will provide a great opportunity to learn best practices.

Conclusion

India is positioned at a critical juncture with respect to the debate on digital assets. The challenge which lies ahead is one of balancing innovation with effective regulation. The introduction of the Central Bank Digital Currency (CBDC) and the development of new policies signal a willingness on the part of the regulators to embrace the digital future. In contrast, issues like volatility, fraud, and regulatory compliance continue to pose hurdles. By drawing insights from global frameworks and strengthening ties through international forums, India can pave the way for a secure and dynamic digital asset ecosystem. Embracing strategic measures such as regulatory sandboxes and transparent tax guidelines will not only protect investors but also unlock the immense potential of digital assets, propelling India into a new era of financial innovation and inclusivity.

References

- https://www.weforum.org/agenda/2024/10/different-countries-navigating-uncertainty-digital-asset-regulation-election-year/

- https://www.acfcs.org/eu-passes-landmark-crypto-regulation

- https://www.indiabudget.gov.in/budget2022-23/doc/Finance_Bill.pdf

- https://www.weforum.org/agenda/2024/10/different-countries-navigating-uncertainty-digital-asset-regulation-election-year/

- https://www3.weforum.org/docs/WEF_Digital_Assets_Regulation_2024.pdf

18th November 2022 CyberPeace Foundation in association with Universal Acceptance has successfully conducted the workshop on Universal Acceptance and Multilingual Internet for the students and faculties of Royal Global University under CyberPeace Center of Excellence (CCoE). CyberPeace Foundation has always been engaged towards the aim of spreading awareness regarding the various developments, avenues, opportunities and threats regarding cyberspace. The same has been the keen principle of the CyberPeace Centre of Excellence setup in collaboration with various esteemed educational institutes. We at CyberPeace Foundation would like to take the collaborations and our efforts to a new height of knowledge and awareness by proposing a workshop on UNIVERSAL ACCEPTANCE AND MULTILINGUAL INTERNET. This workshop was instrumental in providing the academia and research community a wholesome outlook towards the multilingual spectrum of internet including Internationalized domain names and email address Internationalization.

Date –18th November 2022

Time – 10:00 AM to 12:00 PM

Duration – 2 hours

Mode - Online

Audience – Academia and Research Community

Participants Joined- 130

Crowd Classification - Engineering students (1st and 4th year, all streams) and Faculties members

Organizer : Mr. Harish Chowdhary : UA Ambassador Moderator: Ms. Pooja Tomar, Project coordinator cum trainer

GuestSpeakers:Mr. Abdalmonem Galila, Abdalmonem: Vice Chair , Universal Acceptance Steering Group (UASG) ,Mr. Mahesh D Kulkarni: Director, Evaris Systems and Former Senior Director, CDAC, Government of India, Mr. Akshat Joshi, Founder Think Trans First session was delivered by Mr. Abdalmonem Galila, Abdalmonem: Vice Chair , Universal Acceptance Steering Group (UASG) “Universal Acceptance( UA) and why UA matters?”

- What is universal acceptance?

- UA is cornerstone to a digitally inclusive internet by ensuring all domain names and email addresses in all languages, script and character length.

- Achieving UA ensures that every person has the ability to navigate the internet.

- Different UA issues were also discussed and explained.

- Tagated systems by the UA and implication were discussed in detail.

Second Session was delivered by Mr. Akshat Joshi, Founder Think Trans on “Universal Acceptance to the IDNsand the economic Landscape”

- What is Universal Acceptance?

- The internet has had standards that allow people to use domain names and email addresses in their native scripts. Software developers need to bring their applications up-to-date so that consumers can use their chosen identity.

- A typical problem is that an IDN email address is not recognised by a website form as a valid email address.

- The importance of adopting IDNs z Enable citizens to use their own identity online (correct spelling, native language) z Relates to language, culture and content z Promotes local and regional content z Allows businesses and politicians to better target their messages.

Third session was delivered by Mr. Mahesh D Kulkarni, ES Director Evaris on the topic of “IDNs in Indian languages perspective- challenges and solutions”.

- The multilingual diversity of India was focused on and its impact.

- Most students were not aware of what Unicode, IDNS is and their usage.

- Students were briefed by giving real time examples on IDN, Domain name implementation using local language.

- In depth knowledge of and practical exposure of Universal Acceptance and Multilingual Internet has been served to the students.

- Tools and Resources for Domain Name and Domain Languages were explained.

- Languages nuances of Multilingual diversity of India explained with real time facts and figures.

- Given the idea of IDN Email,Homograph attack,Homographic variant with proper real time examples.

- Explained about the security threats and IDNA protocols.

- Given the explanation on ABNF.

- Explained the stages of Universal Acceptance.