#FactCheck: Viral Video Claiming IAF Air Chief Marshal Acknowledged Loss of Jets Found Manipulated

Executive Summary:

A video circulating on social media falsely claims to show Indian Air Chief Marshal AP Singh admitting that India lost six jets and a Heron drone during Operation Sindoor in May 2025. It has been revealed that the footage had been digitally manipulated by inserting an AI generated voice clone of Air Chief Marshal Singh into his recent speech, which was streamed live on August 9, 2025.

Claim:

A viral video (archived video) (another link) shared by an X user stating in the caption “ Breaking: Finally Indian Airforce Chief admits India did lose 6 Jets and one Heron UAV during May 7th Air engagements.” which is actually showing the Air Chief Marshal has admitted the aforementioned loss during Operation Sindoor.

Fact Check:

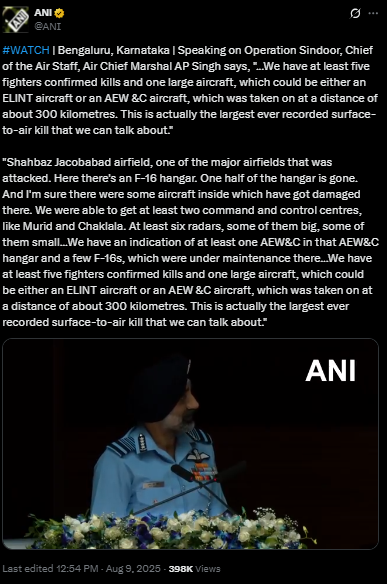

By conducting a reverse image search on key frames from the video, we found a clip which was posted by ANI Official X handle , after watching the full clip we didn't find any mention of the aforementioned alleged claim.

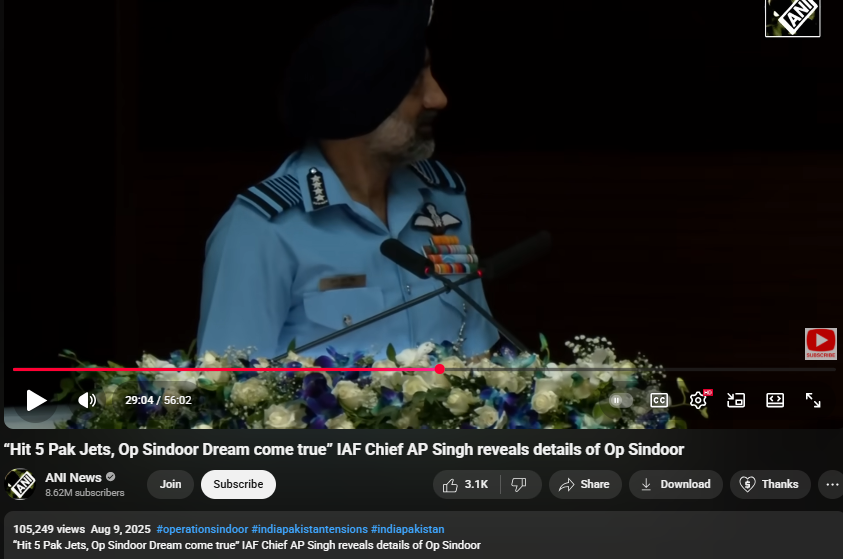

On further research we found an extended version of the video in the Official YouTube Channel of ANI which was published on 9th August 2025. At the 16th Air Chief Marshal L.M. Katre Memorial Lecture in Marathahalli, Bengaluru, Air Chief Marshal AP Singh did not mention any loss of six jets or a drone in relation to the conflict with Pakistan. The discrepancies observed in the viral clip suggest that portions of the audio may have been digitally manipulated.

The audio in the viral video, particularly the segment at the 29:05 minute mark alleging the loss of six Indian jets, appeared to be manipulated and displayed noticeable inconsistencies in tone and clarity.

Conclusion:

The viral video claiming that Air Chief Marshal AP Singh admitted to the loss of six jets and a Heron UAV during Operation Sindoor is misleading. A reverse image search traced the footage that no such remarks were made. Further an extended version on ANI’s official YouTube channel confirmed that, during the 16th Air Chief Marshal L.M. Katre Memorial Lecture, no reference was made to the alleged losses. Additionally, the viral video’s audio, particularly around the 29:05 mark, showed signs of manipulation with noticeable inconsistencies in tone and clarity.

- Claim: Viral Video Claiming IAF Chief Acknowledged Loss of Jets Found Manipulated

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction

The whole world is shifting towards a cashless economy, with innovative payment transaction systems such as UPI payments, card payments, etc. These payment systems require processing, storage, and movement of millions of cardholders data which is crucial for any successful transaction.

And therefore to maintain the credibility of this payment ecosystem, security or secure movement and processing of cardholders data becomes paramount. Entities involved in a payment ecosystem are responsible for the security of cardholders data. Security is also important because if breaches happen in cardholders data it would amount to financial loss. Fraudsters are attempting smart ways to leverage any kind of security loopholes in the payment system.

So these entities which are involved in the payment ecosystem need to maintain some security standards set by one council of network providers in the payment industry popularly known as the Payment Card Industry Security Standard Council.

Overview of what is PCI and PCI DSS Compliance

Earlier every network providers in the payment industry have their own set of security standards but later they all together i.e., Visa, Mastercard, American Express, Discover, and JCB constituted an independent body to come up with comprehensive security standards like PCI DSS, PA DSS, PCI-PTS, etc. And these network providers ensure the enforcement of the security standards by putting conditions on services being provided to the merchant or acquirer bank.

In other words, PCI DSS particularly is the global standard that provides a baseline of technical and operational requirements designed to protect account data. PCI DSS is a security standard specially designed for merchants and service providers in the payment ecosystem to protect the cardholders data against any fraud or theft.

It applies to all the entities including third-party vendors which are involved in processing storing and transmitting cardholders data. In organization, even all CDE (Card Holder Data Environment) including system components or network component that stores and process cardholders data, has to comply with all the requirements of PCI compliance. Recently PCI has released a new version of PCI DSS v4.0 a few months ago with certain changes from the previous version after three years of the review cycle.

12 Requirements of PCI DSS

This is the most important part of PCI DSS as following these requirements can make any organization to some extent PCI compliant. So what are these requirements:

- Installing firewalls or maintaining security controls in the networks

- Use strong password in order to secure the CDE( Card holders data environment)

- Protection of cardholder data

- Encrypting the cardholder data during transmission over an open and public network.

- Timely detection and protection of the cardholders data environment from any malicious activity or software.

- Regular updating the software thereby maintaining a secure system.

- Rule of business need to know should apply to access the cardholders data

- Identification and authentication of the user are important to access the system components.

- Physical access to cardholders data should be restricted.

- Monitoring or screening of system components to know the malicious activity internally in real-time.

- Regular auditing of security control and finding any vulnerabilities available in the systems.

- Make policies and programs accordingly in order to support information security.

How organization can become PCI compliant

- Scope: First step is to determine all the system components or networks storing and processing cardholders data i.e., Cardholders Data Environment.

- Assess: Then test whether these systems or networks are complying with all the requirements of PCI DSS COMPLIANCE.

- Report: Documenting all the assessment through self assessment questionnaire by answering following questions like whether the requirements are met or not? Whether the requirements are met with customized approach.

- Attest: Then the next step is to complete the attestation process available on the website of PCI SSC.

- Submit: Then organization can submit all the documents including reports and other supporting documents if it is requested by other entities such as payment brands, merchant or acquirer.

- Remediate: Then the organisation should take remedial action for the requirements which are not in place on the system components or networks.

Conclusion

One of the most important issues facing those involved in the digital payment ecosystem is cybersecurity. The likelihood of being exposed to cybersecurity hazards including online fraud, information theft, and virus assaults is rising as more and more users prefer using digital payments.

And thus complying and adopting with these security standards is the need of the hour. And moreover RBI has also mandated all the regulated entities ( NBFCs Banks etc) under one recent notification to comply with these standards.

.webp)

Introduction

Cyber slavery has emerged as a serious menace. Offenders target innocent individuals, luring them with false promises of employment, only to capture them and subject them to horrific torture and forced labour. According to reports, hundreds of Indians have been imprisoned in 'Cyber Slavery' in certain Southeast Asian countries. Indians who have travelled to South Asian nations such as Cambodia in the hopes of finding work and establishing themselves have fallen victim to the illusion of internet slavery. According to reports, 30,000 Indians who travelled to this region on tourist visas between 2022 and 2024 did not return. India Today’s coverage demonstrated how survivors of cyber slavery who have somehow escaped and returned to India have talked about the terrifying experiences they had while being coerced into engaging in cyber slavery.

Tricked by a Job Offer, Trapped in Cyber Slavery

India Today aired testimonials of cyber slavery victims who described how they were trapped. One individual shared that he had applied for a well-paying job as an electrician in Cambodia through an agent in Delhi. However, upon arriving in Cambodia, he was offered a job with a Chinese company where he was forced to participate in cyber scam operations and online fraudulent activities.

He revealed that a personal system and mobile phone were provided, and they were compelled to cheat Indian individuals using these devices and commit cyber fraud. They were forced to work 12-hour shifts. After working there for several months, he repeatedly requested his agent to help him escape. In response, the Chinese group violently loaded him into a truck, assaulted him, and left him for dead on the side of the road. Despite this, he managed to survive. He contacted locals and eventually got in touch with his brother in India, and somehow, he managed to return home.

This case highlights how cyber-criminal groups deceive innocent individuals with the false promise of employment and then coerce them into committing cyber fraud against their own country. According to the Ministry of Home Affairs' Indian Cyber Crime Coordination Center (I4C), there has been a significant rise in cybercrimes targeting Indians, with approximately 45% of these cases originating from Southeast Asia.

CyberPeace Recommendations

Cyber slavery has developed as a serious problem, beginning with digital deception and progressing to physical torture and violent actions to commit fraudulent online acts. It is a serious issue that also violates human rights. The government has already taken note of the situation, and the Indian Cyber Crime Coordination Centre (I4C) is taking proactive steps to address it. It is important for netizens to exercise due care and caution, as awareness is the first line of defence. By remaining vigilant, they can oppose and detect the digital deceit of phony job opportunities in foreign nations and the manipulative techniques of scammers. Netizens can protect themselves from significant threats that could harm their lives by staying watchful and double-checking information from reliable sources.

References

- CyberPeace Highlights Cyber Slavery: A Serious Concern https://www.cyberpeace.org/resources/blogs/cyber-slavery-a-serious-concern

- https://www.indiatoday.in/india/story/india-today-operation-cyber-slaves-stories-of-golden-triangle-network-of-fake-job-offers-2642498-2024-11-29

- https://www.indiatoday.in/india/video/cyber-slavery-survivors-narrate-harrowing-accounts-of-torture-2642540-2024-11-29?utm_source=washare

Introduction

The Data Security Council of India’s India Cyber Threat Report 2025 calculates that a staggering 702 potential attacks happened per minute on average in the country in 2024. Recent alleged data breaches on organisations such as Star Health, WazirX, Indian Council of Medical Research (ICMR), BSNL, etc. highlight the vulnerabilities of government organisations, critical industries, businesses, and individuals in managing their digital assets. India is the second most targeted country for cyber attacks globally, which warrants the development and adoption of cybersecurity governance frameworks essential for the structured management of cyber environments. The following global models offer valuable insights and lessons that can help strengthen cybersecurity governance.

Overview of Global Cybersecurity Governance Models

Cybersecurity governance frameworks provide a structured strategy to mitigate and address cyber threats. Different regions have developed their own governance models for cybersecurity, but they all emphasize risk management, compliance, and cross-sector collaboration for the protection of digital assets. Four such major models are:

- NIST CSF 2.0 (U.S.A): The National Institute of Standards and Technology Cyber Security Framework provides a flexible, voluntary, risk-based approach rather than a one-size-fits-all solution to manage cybersecurity risks. It endorses six core functions, which are: Govern, Identify, Protect, Detect, Respond, and Recover. This is a widely adopted framework used by both public and private sector organizations even outside the U.S.A.

- ISO/IEC 27001: This is a globally recognized standard developed jointly by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC). It provides a risk-based approach to help organizations of all sizes and types to identify, assess, and mitigate potential cybersecurity threats to Information Security Management Systems (ISMS) and preserve the confidentiality, integrity, and availability of information. Organizations can seek ISO 27001 certification to demonstrate compliance with laws and regulations.

- EU NIS2 Directive: The Network and Information Security Directive 2 (NIS2) is an updated EU cybersecurity law that imposes strict obligations on critical services providers in four overarching areas: risk management, corporate accountability, reporting obligations, and business continuity. It is the most comprehensive cybersecurity directive in the EU to date, and non-compliance may attract non-monetary remedies, administrative fines up to at least €10 million or 2% of the global annual revenue (whichever is higher), or even criminal sanctions for top managers.

- GDPR: The General Data Protection Regulation (GDPR)of the EU is a comprehensive data privacy law that also has major cybersecurity implications. It mandates that organizations must integrate cybersecurity into their data protection policies and report breaches within 72 hours, and it prescribes a fine of up to €20 million or 4% of global turnover for non-compliance.

India’s Cybersecurity Governance Landscape

In light of the growing nature of cyber threats, it is notable that the Indian government has taken comprehensive measures along with efforts by relevant agencies such as the Ministry of Electronics and Information Technology, Reserve Bank of India (RBI), National Payments Corporation (NPCI) and Indian Cyber Crime Coordination Centre (I4C), CERT-In. However, there is still a lack of an overarching cybersecurity governance framework or comprehensive law in this area. Multiple regulatory bodies in India oversee cybersecurity for various sectors. Key mechanisms are:

- CERT-In Guidelines: The Indian Computer Emergency Response Team, under the Ministry of Electronics and Information Technology (MeitY), is the nodal agency responsible for cybersecurity incident response, threat intelligence sharing, and capacity building. Organizations are mandated to maintain logs for 180 days and report cyber incidents to CERT-In within six hours of noticing them according to directions under the Information Technology Act, 2000 (IT Act).

- IT Act & DPDP Act: These Acts, along with their associated rules, lay down the legal framework for the protection of ICT systems in India. While some sections mandate that “reasonable” cybersecurity standards be followed, specifics are left to the discretion of the organisations. Enforcement frameworks are vague, which leaves sectoral regulators to fill the gaps.

- Sectoral regulations: The Reserve Bank of India (RBI), the Insurance Regulatory and Development Authority of India (IRDAI), the Department of Telecommunications, the Securities Exchange Board of India (SEBI), National Critical Information Infrastructure Protection Centre (NCIIPC) and other regulatory bodies require that cybersecurity standards be maintained by their regulated entities.

Lessons for India & Way Forward

As the world faces unprecedented security and privacy threats to its digital ecosystem, the need for more comprehensive cybersecurity policies, awareness, and capacity building has perhaps never been greater. While cybersecurity practices may vary with the size, nature, and complexity of an organization (hence “reasonableness” informing measures taken), there is a need for a centralized governance framework in India similar to NIST2 to unify sectoral requirements for simplified compliance and improve enforcement. India ranks 10th on the World Cybercrime Index and was found to be "specialising" in scams and mid-tech crimes- those which affect mid-range businesses and individuals the most. To protect them, India needs to strengthen its enforcement mechanisms across more than just the critical sectors. This can be explored by penalizing bigger organizations handling user data susceptible to breaches more stringently, creating an enabling environment for strong cybersecurity practices through incentives for MSMEs, and investing in cybersecurity workforce training and capacity building. Finally, there is a scope for increased public-private collaboration for real-time cyber intelligence sharing. Thus, a unified, risk-based national cybersecurity governance framework encompassing the current multi-pronged cybersecurity landscape would give direction to siloed efforts. It would help standardize best practices, streamline compliance, and strengthen overall cybersecurity resilience across all sectors in India.

References

- https://cdn.prod.website-files.com/635e632477408d12d1811a64/676e56ee4cc30a320aecf231_Cloudsek%20Annual%20Threat%20Landscape%20Report%202024%20(1).pdf

- https://strobes.co/blog/top-data-breaches-in-2024-month-wise/#:~:text=In%20a%20large%2Dscale%20data,emails%2C%20and%20even%20identity%20theft.

- https://www.google.com/search?q=nist+2.0&oq=nist+&gs_lcrp=EgZjaHJvbWUqBggBEEUYOzIHCAAQABiPAjIGCAEQRRg7MgYIAhBFGDsyCggDEAAYsQMYgAQyBwgEEAAYgAQyBwgFEAAYgAQyBwgGEAAYgAQyBggHEEUYPNIBCDE2MTJqMGo3qAIAsAIA&sourceid=chrome&ie=UTF-8

- https://www.iso.org/standard/27001

- https://nis2directive.eu/nis2-requirements/

- https://economictimes.indiatimes.com/tech/technology/india-ranks-number-10-in-cybercrime-study-finds/articleshow/109223208.cms?from=mdr