#FactCheck: Old Thundercloud Video from Lviv city in Ukraine Ukraine (2021) Falsely Linked to Delhi NCR, Gurugram and Haryana

Executive Summary:

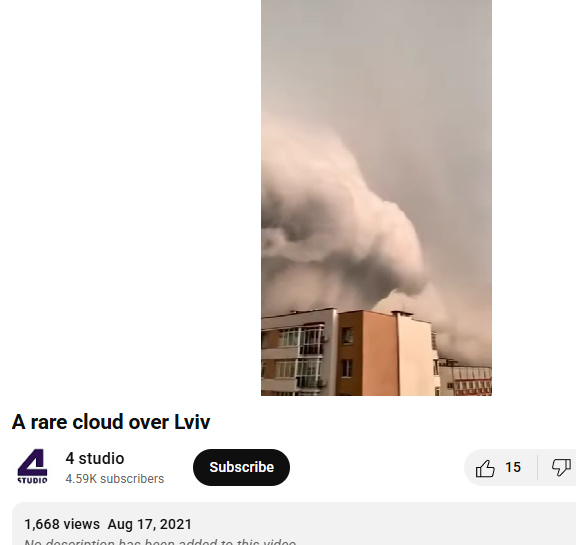

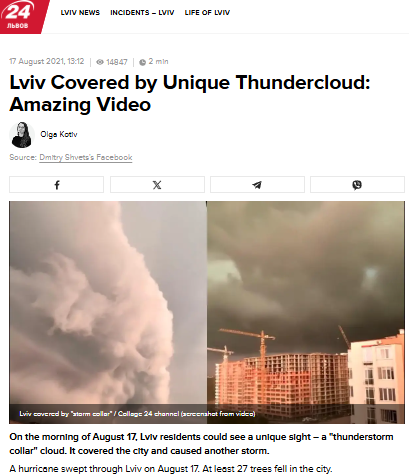

A viral video claims to show a massive cumulonimbus cloud over Gurugram, Haryana, and Delhi NCR on 3rd September 2025. However, our research reveals the claim is misleading. A reverse image search traced the visuals to Lviv, Ukraine, dating back to August 2021. The footage matches earlier reports and was even covered by the Ukrainian news outlet 24 Kanal, which published the story under the headline “Lviv Covered by Unique Thundercloud: Amazing Video”. Thus, the viral claim linking the phenomenon to a recent event in India is false.

Claim:

A viral video circulating on social media claims to show a massive cloud formation over Gurugram, Haryana, and the Delhi NCR region on 3rd September 2025. The cloud appears to be a cumulonimbus formation, which is typically associated with heavy rainfall, thunderstorms, and severe weather conditions.

Fact Check:

After conducting a reverse image search on key frames of the viral video, we found matching visuals from videos that attribute the phenomenon to Lviv, a city in Ukraine. These videos date back to August 2021, thereby debunking the claim that the footage depicts a recent weather event over Gurugram, Haryana, or the Delhi NCR region.

Further research revealed that a Ukrainian news channel named 24 Kanal, had reported on the Lviv thundercloud phenomenon in August 2021. The report was published under the headline “Lviv Covered by Unique Thundercloud: Amazing Video” ( original in Russian, translated into English).

Conclusion:

The viral video does not depict a recent weather event in Gurugram or Delhi NCR, but rather an old incident from Lviv, Ukraine, recorded in August 2021. Verified sources, including Ukrainian media coverage, confirm this. Hence, the circulating claim is misleading and false.

- Claim: Old Thundercloud Video from Lviv city in Ukraine Ukraine (2021) Falsely Linked to Delhi NCR, Gurugram and Haryana.

- Claimed On: Social Media

- Fact Check: False and Misleading.

Related Blogs

Introduction

Meta is the leader in social media platforms and has been successful in having a widespread network of users and services across global cyberspace. The corporate house has been responsible for revolutionizing messaging and connectivity since 2004. The platform has brought people closer together in terms of connectivity, however, being one of the most popular platforms is an issue as well. Popular platforms are mostly used by cyber criminals to gain unauthorised data or create chatrooms to maintain anonymity and prevent tracking. These bad actors often operate under fake names or accounts so that they are not caught. The platforms like Facebook and Instagram have been often in the headlines as portals where cybercriminals were operating and committing crimes.

To keep the data of the netizen safe and secure Paytm under first of its kind service is offering customers protection against cyber fraud through an insurance policy available for fraudulent mobile transactions up to Rs 10,000 for a premium of Rs 30. The cover ‘Paytm Payment Protect’ is provided through a group insurance policy issued by HDFC Ergo. The company said that the plan is being offered to increase the trust in digital payments, which will push up adoption.

Meta’s Cybersecurity

Meta has one of the best cyber security in the world but that diest mean that it cannot be breached. The social media giant is the most vulnerable platform in cases of data breaches as various third parties are also involved. As seen the in the case of Cambridge Analytica, a huge chunk of user data was available to influence the users in terms of elections. Meta needs to be ahead of the curve to have a safe and secure platform, for this Meta has deployed various AI and ML driven crawlers and software which work o keeping the platform safe for its users and simultaneously figure out which accounts may be used by bad actors and further removes the criminal accounts. The same is also supported by the keen participation of the user in terms of the reporting mechanism. Meta-Cyber provides visibility of all OT activities, observes continuously the PLC and SCADA for changes and configuration, and checks the authorization and its levels. Meta is also running various penetration and bug bounty programs to reduce vulnerabilities in their systems and applications, these testers are paid heavily depending upon the scope of the vulnerability they found.

CyberRoot Risk Investigation

Social media giant Meta has taken down over 40 accounts operated by an Indian firm CyberRoot Risk Analysis, allegedly involved in hack-for-hire services along with this Meta has taken down 900 fraudulently run accounts, these accounts are said to be operated from China by an unknown entity. CyberRoot Risk Analysis was responsible for sharing malware over the platform and used it to impersonate themselves just as their targets, i.e lawyers, doctors, entrepreneurs, and industries like – cosmetic surgery, real estate, investment firms, pharmaceutical, private equity firms, and environmental and anti-corruption activists. They would get in touch with such personalities and then share malware hidden in files which would often lead to data breaches subsequently leading to different types of cybercrimes.

Meta and its team is working tirelessly to eradicate the influence of such bad actors from their platforms, use of AI and Ml based tools have increased exponentially.

Paytm CyberFraud Cover

Paytm is offering customers protection against cyber fraud through an insurance policy available for fraudulent mobile transactions up to Rs 10,000 for a premium of Rs 30. The cover ‘Paytm Payment Protect’ is provided through a group insurance policy issued by HDFC Ergo. The company said that the plan is being offered to increase the trust in digital payments, which will push up adoption. The insurance cover protects transactions made through UPI across all apps and wallets. The insurance coverage has been obtained by One97 Communications, which operates under the Paytm brand.

The exponential increase in the use of digital payments during the pandemic has made more people susceptible to cyber fraud. While UPI has all the digital safeguards in place, most UPI-related frauds are undertaken by confidence tricksters who get their victims to authorise a transaction by passing collect requests as payments. There are also many fraudsters collecting payments by pretending to be merchants. These types of frauds have resulted in a loss of more than Rs 63 crores in the previous financial year. The issue of data insurance is new to India but is indeed the need of the hour, majority of netizens are unaware of the value of their data and hence remain ignorant towards data protection, such steps will result in safer data management and protection mechanisms, thus safeguarding the Indian cyberspace.

Conclusion

cyberspace is at a critical juncture in terms of data protection and privacy, with new legislation coming out on the same we can expect new and stronger policies to prevent cybercrimes and cyber-attacks. The efforts by tech giants like Meta need to gain more speed in terms of the efficiency of cyber safety of the platform and the user to make sure that the future of the platforms remains secured strongly. The concept of data insurance needs to be shared with netizens to increase awareness about the subject. The initiative by Paytm will be a monumental initiative as this will encourage more platforms and banks to commit towards coverage for cyber crimes. With the increasing cases of cybercrimes, such financial coverage has come as a light of hope and security for the netizens.

Introduction

The insurance industry is a target for cybercriminals due to the sensitive nature of the information it holds. This makes it essential for insurance companies to have robust cybersecurity measures to protect their data and customers’ personal information.

Cyber fraud in India’s insurance industry is increasing. It is reported that the Indian insurance sector has witnessed a surge in cyber-attacks, with several instances of data breaches, identity thefts, and financial fraud being reported. These cybercrimes not only pose a significant threat to the financial stability of the insurance industry but also to the privacy and security of policyholders.

Cyber Frauds in the Insurance Industry

The insurance industry in India has been the target of increasing cyber fraud in recent years. With the growing digital transformation trend, insurance companies have become increasingly vulnerable to cyber-attacks. Cyber frauds in the insurance industry are initiated by hackers who use various techniques such as phishing, malware, ransomware, and social engineering to gain unauthorised access to policyholders’ personal data and sensitive information

Kinds of cyber frauds in the insurance industry

It is essential for insurers and policyholders alike to be aware of these kinds of cyber-attacks on insurance companies in today’s digital age. Staying educated about these threats can help prevent them from happening in the future.

Identity theft– One common type of cyber fraud that occurs in the insurance industry is identity theft. In this type of fraud, criminals steal personal information such as name, address, date of birth and social security numbers through phishing emails or fraudulent websites. They then use this information to open fraudulent policies or access existing ones.

Payment fraud- Another type of cyber fraud that is on the rise is payment fraud. In this type of fraud, hackers intercept electronic payments made by policyholders or agents using fake bank accounts or compromised payment gateways. The money is then siphoned into untraceable accounts, making it difficult for law enforcement agencies to identify and arrest the perpetrators.

Phishing attacks- Where the fraudsters posed as company officials and sent emails to policyholders requesting their account details. The unsuspecting customers fell for this scam and shared their sensitive information, which was then used to access their accounts and steal funds.

Hacking- Where hackers breach the company’s system to gain access to policyholder data. The hackers’ stoles personal records, including names, addresses, phone numbers, social security numbers, and financial information, which they later sell on the dark web.

Fake policies scam- Fraudsters create fake policies using stolen identities and collect premiums from innocent customers. The insurer then voided these policies due to fraudulent activity leaving those people without valid coverage when they needed it most. The victims suffer significant financial losses due to this scam.

Fake Insurance Websites- Discuss the creation of deceptive websites that imitate well-known insurance companies, where unsuspecting individuals provide their personal details, leading to identity theft or financial losses.

Prevention of Cyber Frauds in the Insurance Industry- Best practices to follow

Prevention is better than cure, which also holds true in the case of cyber fraud in the insurance industry. The industry must take proactive steps to prevent such frauds from occurring in the first place. One of the most effective ways to do so is by investing in cybersecurity measures that are specifically designed for the insurance sector.

Insurance companies must conduct regular employee training programs on cybersecurity best practices. This includes educating employees on how to identify and avoid phishing emails, create strong passwords, and recognise potential cyber threats. Companies should also establish a reporting mechanism for employees to report suspicious activity or incidents immediately.

Having proper access controls in place is also necessary. This means limiting access to sensitive data only to those employees who need it, implementing two-factor authentication, and regularly monitoring user activity logs. Regular audits can also provide an extra layer of protection against potential threats by identifying vulnerabilities that may have been overlooked during routine security checks.

Another essential step is encrypting all data transmitted between different systems and devices. Encryption scrambles data into unreadable codes that can only be deciphered using a decryption key, making it difficult for hackers to intercept or steal information in transit.

Legal Framework for Cyber Frauds in the Insurance Industry

The legal framework for cyber fraud in the insurance industry is critical to preventing such crimes. The Insurance Regulatory and Development Authority of India (IRDAI) has issued guidelines for insurers to establish a cybersecurity framework. The guidelines require insurers to conduct regular risk assessments, implement security measures, and ensure compliance with data privacy laws.

The Information Technology Act 2000, is another significant piece of legislation dealing with cyber fraud in India. The act defines offences such as unauthorised access to a computer system, hacking, and tampering with data. It also provides for stringent penalties and imprisonment for those found guilty of such offences.

The IRDAI’s guidelines provide insurers with a roadmap to establish robust cybersecurity measures to help prevent cyber fraud in the insurance industry. Stringent implementation of these guidelines will go a long way in safeguarding sensitive customer information from falling into the wrong hands.

Best Practices for Insurers and Policyholders

Insurers:

Implementing Strong Authentication: Encouraging the use of multi-factor authentication and secure login processes to safeguard customer accounts and prevent unauthorised access.

Regular Employee Training: Conduct cybersecurity awareness programs to educate employees about the latest threats and preventive measures.

Investing in Advanced Technologies: Utilizing robust cybersecurity tools and systems to promptly detect and mitigate potential cyber threats.

Policyholders:

Vigilance and Awareness: Policyholders must stay vigilant while sharing personal information online and verify the authenticity of insurance websites and communication channels.

Regular Updates and Patches: Advising individuals to keep their devices and software up to date to minimise vulnerabilities that cybercriminals can exploit.

Secure Online Practices: Encouraging the use of strong and unique passwords, avoiding sharing sensitive information on unsecured networks, and exercising caution when clicking on suspicious links or attachments.

Conclusion

As the Indian insurance industry embraces digitisation, the risk of cyber scams and data breaches becomes a significant concern. Insurers and policyholders must collaborate to ensure robust cybersecurity measures are in place to protect sensitive information and financial interests.

It is essential for insurance companies to invest in robust cybersecurity measures that can detect and prevent fraud attempts. Additionally, educating employees on the dangers of cyber fraud and implementing strict compliance measures can go a long way in mitigating risks. With these efforts, the insurance industry can continue to provide trustworthy and reliable services to its customers while protecting against cyber threats. As technology continues to evolve, it is imperative that the insurance industry adapts accordingly and remains vigilant against emerging threats.

Introduction

The spread of information in the quickly changing digital age presents both advantages and difficulties. The phrases "misinformation" and "disinformation" are commonly used in conversations concerning information inaccuracy. It's important to counter such prevalent threats, especially in light of how they affect countries like India. It becomes essential to investigate the practical ramifications of misinformation/disinformation and other prevalent digital threats. Like many other nations, India has had to deal with the fallout from fraudulent internet actions in 2023, which has highlighted the critical necessity for strong cybersecurity safeguards.

The Emergence of AI Chatbots; OpenAI's ChatGPT and Google's Bard

The launch of OpenAI's ChatGPT in November 2022 was a major turning point in the AI space, inspiring the creation of rival chatbot ‘Google's Bard’ (Launched in 2023). These chatbots represent a significant breakthrough in artificial intelligence (AI) as they produce replies by combining information gathered from huge databases, driven by Large Language Models (LLMs). In the same way, AI picture generators that make use of diffusion models and existing datasets have attracted a lot of interest in 2023.

Deepfake Proliferation in 2023

Deepfake technology's proliferation in 2023 contributed to misinformation/disinformation in India, affecting politicians, corporate leaders, and celebrities. Some of these fakes were used for political purposes while others were for creating pornographic and entertainment content. Social turmoil, political instability, and financial ramifications were among the outcomes. The lack of tech measures about the same added difficulties in detection & prevention, causing widespread synthetic content.

Challenges of Synthetic Media

Problems of synthetic media, especially AI-powered or synthetic Audio video content proliferated widely during 2023 in India. These included issues with political manipulation, identity theft, disinformation, legal and ethical issues, security risks, difficulties with identification, and issues with media integrity. It covered an array of consequences, ranging from financial deception and the dissemination of false information to swaying elections and intensifying intercultural conflicts.

Biometric Fraud Surge in 2023

Biometric fraud in India, especially through the Aadhaar-enabled Payment System (AePS), has become a major threat in 2023. Due to the AePS's weaknesses being exploited by cybercriminals, many depositors have had their hard-earned assets stolen by fraudulent activity. This demonstrates the real effects of biometric fraud on those who have had their Aadhaar-linked data manipulated and unauthorized access granted. The use of biometric data in financial systems raises more questions about the security and integrity of the nation's digital payment systems in addition to endangering individual financial stability.

Government strategies to counter digital threats

- The Indian Union Government has sent a warning to the country's largest social media platforms, highlighting the importance of exercising caution when spotting and responding to deepfake and false material. The advice directs intermediaries to delete reported information within 36 hours, disable access in compliance with IT Rules 2021, and act quickly against content that violates laws and regulations. The government's dedication to ensuring the safety of digital citizens was underscored by Union Minister Rajeev Chandrasekhar, who also stressed the gravity of deepfake crimes, which disproportionately impact women.

- The government has recently come up with an advisory to social media intermediaries to identify misinformation and deepfakes and to make sure of the compliance of Information Technology (IT) Rules 2021. It is the legal obligation of online platforms to prevent the spread of misinformation and exercise due diligence or reasonable efforts to identify misinformation and deepfakes.

- The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules 2021 were amended in 2023. The online gaming industry is required to abide by a set of rules. These include not hosting harmful or unverified online games, not promoting games without approval from the SRB, labelling real-money games with a verification mark, educating users about deposit and winning policies, setting up a quick and effective grievance redressal process, requesting user information, and forbidding the offering of credit or financing for real-money gaming. These steps are intended to guarantee ethical and open behaviour throughout the online gaming industry.

- With an emphasis on Personal Data Protection, the government enacted the Digital Personal Data Protection Act, 2023. It is a brand-new framework for digital personal data protection which aims to protect the individual's digital personal data.

- The " Cyber Swachhta Kendra " (Botnet Cleaning and Malware Analysis Centre) is a part of the Government of India's Digital India initiative under the (MeitY) to create a secure cyberspace. It uses malware research and botnet identification to tackle cybersecurity. It works with antivirus software providers and internet service providers to establish a safer digital environment.

Strategies by Social Media Platforms

Various social media platforms like YouTube, and Meta have reformed their policies on misinformation and disinformation. This shows their comprehensive strategy for combating deepfake, misinformation/disinformation content on the network. The platform YouTube prioritizes eliminating content that transgresses its regulations, decreasing the amount of questionable information that is recommended, endorsing reliable news sources, and assisting reputable authors. YouTube uses unambiguous facts and expert consensus to thwart misrepresentation. In order to quickly delete information that violates policies, a mix of content reviewers and machine learning is used throughout the enforcement process. Policies are designed in partnership with external experts and producers. In order to improve the overall quality of information that users have access to, the platform also gives users the ability to flag material, places a strong emphasis on media literacy, and gives precedence to giving context.

Meta’s policies address different misinformation categories, aiming for a balance between expression, safety, and authenticity. Content directly contributing to imminent harm or political interference is removed, with partnerships with experts for assessment. To counter misinformation, the efforts include fact-checking partnerships, directing users to authoritative sources, and promoting media literacy.

Promoting ‘Tech for Good’

By 2024, the vision for "Tech for Good" will have expanded to include programs that enable people to understand the ever-complex digital world and promote a more secure and reliable online community. The emphasis is on using technology to strengthen cybersecurity defenses and combat dishonest practices. This entails encouraging digital literacy and providing users with the knowledge and skills to recognize and stop false information, online dangers, and cybercrimes. Furthermore, the focus is on promoting and exposing effective strategies for preventing cybercrime through cooperation between citizens, government agencies, and technology businesses. The intention is to employ technology's good aspects to build a digital environment that values security, honesty, and moral behaviour while also promoting innovation and connectedness.

Conclusion

In the evolving digital landscape, difficulties are presented by false information powered by artificial intelligence and the misuse of advanced technology by bad actors. Notably, there are ongoing collaborative efforts and progress in creating a secure digital environment. Governments, social media corporations, civil societies and tech companies have shown a united commitment to tackling the intricacies of the digital world in 2024 through their own projects. It is evident that everyone has a shared obligation to establish a safe online environment with the adoption of ethical norms, protective laws, and cybersecurity measures. The "Tech for Good" goal for 2024, which emphasizes digital literacy, collaboration, and the ethical use of technology, seems promising. The cooperative efforts of people, governments, civil societies and tech firms will play a crucial role as we continue to improve our policies, practices, and technical solutions.

References:

- https://news.abplive.com/fact-check/deepfakes-ai-driven-misinformation-year-2023-brought-new-era-of-digital-deception-abpp-1651243

- https://pib.gov.in/PressReleaseIframePage.aspx?PRID=1975445