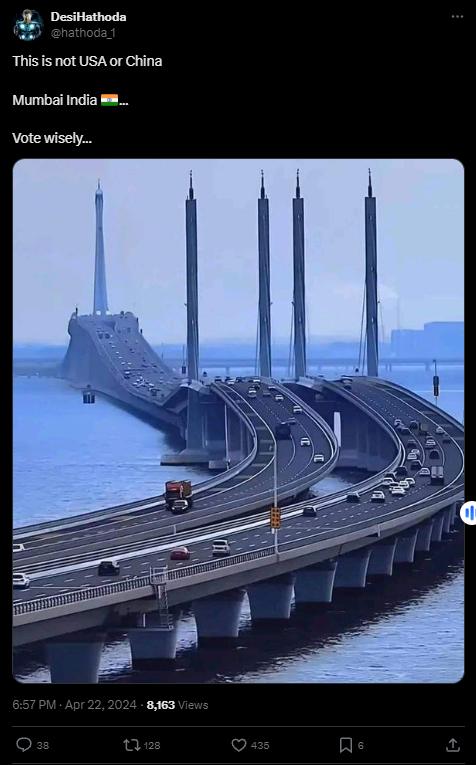

#FactCheck - Viral Image of Bridge claims to be of Mumbai, but in reality it's located in Qingdao, China

Executive Summary:

The photograph of a bridge allegedly in Mumbai, India circulated through social media was found to be false. Through investigations such as reverse image searches, examination of similar videos, and comparison with reputable news sources and google images, it has been found that the bridge in the viral photo is the Qingdao Jiaozhou Bay Bridge located in Qingdao, China. Multiple pieces of evidence, including matching architectural features and corroborating videos tell us that the bridge is not from Mumbai. No credible reports or sources have been found to prove the existence of a similar bridge in Mumbai.

Claims:

Social media users claim a viral image of the bridge is from Mumbai.

Fact Check:

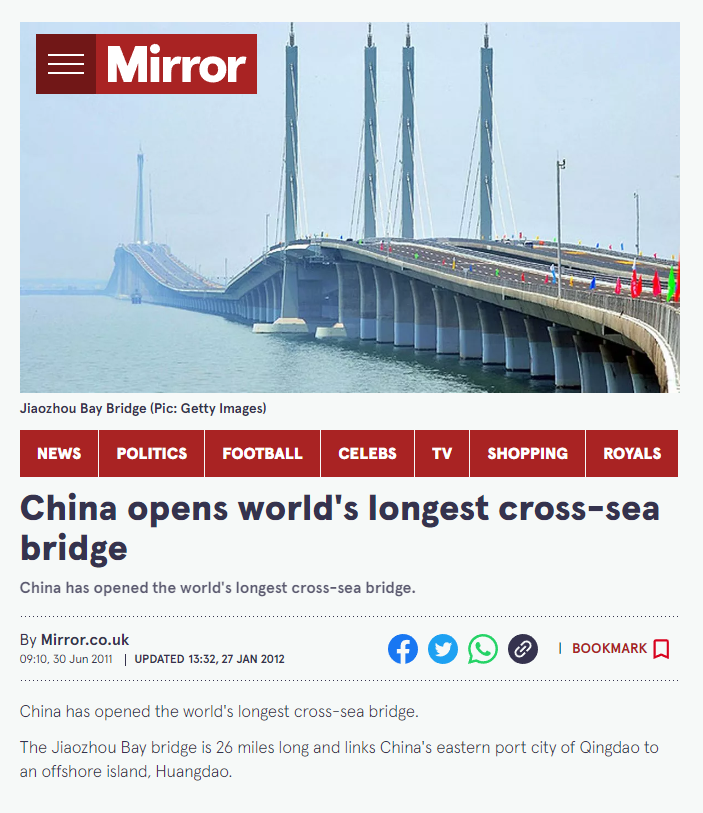

Once the image was received, it was investigated under the reverse image search to find any lead or any information related to it. We found an image published by Mirror News media outlet, though we are still unsure but we can see the same upper pillars and the foundation pillars with the same color i.e white in the viral image.

The name of the Bridge is Jiaozhou Bay Bridge located in China, which connects the eastern port city of the country to an offshore island named Huangdao.

Taking a cue from this we then searched for the Bridge to find any other relatable images or videos. We found a YouTube Video uploaded by a channel named xuxiaopang, which has some similar structures like pillars and road design.

In reverse image search, we found another news article that tells about the same bridge in China, which is more likely similar looking.

Upon lack of evidence and credible sources for opening a similar bridge in Mumbai, and after a thorough investigation we concluded that the claim made in the viral image is misleading and false. It’s a bridge located in China not in Mumbai.

Conclusion:

In conclusion, after fact-checking it was found that the viral image of the bridge allegedly in Mumbai, India was claimed to be false. The bridge in the picture climbed to be Qingdao Jiaozhou Bay Bridge actually happened to be located in Qingdao, China. Several sources such as reverse image searches, videos, and reliable news outlets prove the same. No evidence exists to suggest that there is such a bridge like that in Mumbai. Therefore, this claim is false because the actual bridge is in China, not in Mumbai.

- Claim: The bridge seen in the popular social media posts is in Mumbai.

- Claimed on: X (formerly known as Twitter), Facebook,

- Fact Check: Fake & Misleading

Related Blogs

Introduction

Cert-In (Indian Computer Emergency Response Team) has recently issued the “Guidelines on Information Security Practices” for Government Entities for Safe & Trusted Internet. The guideline has come at a critical time when the Draft Digital India Bill is about to be released, which is aimed at revamping the legal aspects of Indian cyberspace. These guidelines lay down the policy framework and the requirements for critical infrastructure for all government organisations and institutions to improve the overall cyber security of the nation.

What is Cert-In?

A Computer Emergency Response Team (CERT) is a group of information security experts responsible for the protection against, detection of and response to an organisation’s cybersecurity incidents. A CERT may focus on resolving data breaches and denial-of-service attacks and providing alerts and incident handling guidelines. CERTs also conduct ongoing public awareness campaigns and engage in research aimed at improving security systems. The Ministry of Electronics and Information Technology (MeitY) oversees CERT-In. It regularly releases alerts to help individuals and companies safeguard their data, information, and ICT (Information and Communications Technology) infrastructure.

Indian Computer Emergency Response Team (CERT-In) has been established and appointed as national agency in respect of cyber incidents and cyber security incidents in terms of the provisions of section 70B of Information Technology (IT) Act, 2000.

CERT-In requests information from service providers, intermediaries, data centres, and body corporates to coordinate reaction actions and emergency procedures regarding cyber security incidents. It is a focal point for incident reporting and offers round-the-clock security services. It manages cyber occurrences that are tracked and reported while continuously analysing cyber risks. It strengthens the security barriers for the Indian Internet domain.

Background

India is fast becoming one of the world’s largest connected nations – with over 80 Crore Indians (Digital Nagriks) presently connected and using the Internet and cyberspace – and with this number is expected to touch 120 Crores in the coming few years. The Digital Nagriks of the country are using the Internet for business, education, finance and various applications and services including Digital Government services. Internet provides growth and innovation and at the same time it has seen rise in cybercrimes, user harm and other challenges to online safety. The policies of the Government are aimed at ensuring an Open, Safe & Trusted and Accountable Internet for its users. Government is fully cognizant and aware of the growing cyber security threats and attacks.

It is the Government of India’s objective to ensure that Digital Nagriks experience a Safe & Trusted Internet. Along with ubiquitous applications of Information & Communication Technologies (ICT) in almost all facets of service delivery and operations, continuously evolving cyber threats have become a concern for the Government. Cyber-attacks can come in the form of malware, ransomware, phishing, data breach etc., that adversely affect an organisation’s information and systems. Cyber threats leading to cyber-attacks or incidents can compromise the confidentiality, integrity, and availability of an organisation’s information and systems and can have far reaching impact on essential services and national interests. To protect against cyber threats, it is important for government entities to implement strong cybersecurity measures and follow best practices. As ICT infrastructure of the Government entities is one of the preferred targets of the malicious actors, responsibility of implementing good cyber security practices for protecting computers, servers, applications, electronic systems, networks, and data from digital attacks, also remain with the ICT assets’ owner i.e. Government entity.

What are the new Guidelines about?

The Government of India (distribution of business) Rules, 1961’s First Schedule lists a number of Ministries, Departments, Secretariats, and Offices, along with their affiliated and subordinate offices, which are all subject to the rules. They also comprise all governmental organisations, businesses operating in the public sector, and other governmental entities under their administrative control.

“The government has launched a number of steps to guarantee an accessible, trustworthy, and accountable digital environment. With a focus on capabilities, systems, human resources, and awareness, we are extending and speeding our work in the area of cyber security, according to Rajeev Chandrasekhar, Minister of State for Electronics, Information Technology, Skill Development, and Entrepreneurship.

The Recommendations

- Various security domains are covered in the standards, including network security, identity and access management, application security, data security, third-party outsourcing, hardening procedures, security monitoring, incident management, and security audits.

- For instance, the rules advise using only a Standard User (non-administrator) account to use computers and laptops for regular work regarding desktop, laptop, and printer security in the workplace. Users may only be granted administrative access with the CISO’s consent.

- The usage of lengthy passwords containing at least eight characters that combine capital letters, tiny letters, numerals, and special characters; Never save any usernames or passwords in your web browser. Likewise, never save any payment-related data there.

- They include guidelines created by the National Informatics Centre for Chief Information Security Officers (CISOs) and staff members of Central government Ministries/Departments to improve cyber security and cyber hygiene in addition to adhering to industry best practises.

Conclusion

The government has been proactive in the contemporary times to eradicate the menace of cybercrimes and therreats from the Indian cyberspace and hence now we have seen a series of new bills and polices introduced by the Ministry of Electronics and Information Technology, and various other government organisations like Cert-In and TRAI. These policies have been aimed towards being relevant to time and current technologies. The threats from emerging technologies like web 3.0 cannot be ignored and hence with active netizen participation and synergy between government and corporates will lead to a better and improved cyber ecosystem in India.

Introduction

Indian Cybercrime Coordination Centre (I4C) was established by the Ministry of Home Affairs (MHA) to provide a framework for law enforcement agencies (LEAs) to deal with cybercrime in a coordinated and comprehensive manner. The Indian Ministry of Home Affairs approved a scheme for the establishment of the Indian Cyber Crime Coordination Centre (I4C) in October 2018. I4C is actively working towards initiatives to combat the emerging threats in cyberspace and it has become a strong pillar of India’s cyber security and cybercrime prevention. The ‘National Cyber Crime Reporting Portal’ equipped with a 24x7 helpline number 1930, is one of the key components of the I4C.

On 10 September 2024, I4Ccelebrated its foundation day for the first time at Vigyan Bhawan, New Delhi. This celebration marked a major milestone in India’s efforts against cybercrimes and in enhancing its cybersecurity infrastructure. Union Home Minister and Minister of Cooperation, Shri Amit Shah, launched key initiatives aimed at strengthening the country’s cybersecurity landscape.

Launch of Key Initiatives to Strengthen Cybersecurity

- Cyber Fraud Mitigation Centre (CFMC): As a product of Prime Minister Shri Narendra Modi’s vision, the Cyber Fraud Mitigation Centre (CFMC), was incorporated to bring together banks, financial institutions, telecom companies, Internet Service Providers, and law enforcement agencies on a single platform to tackle online financial crimes efficiently. This integrated approach is expected to minimise the time required to streamline operations and to track and neutralise cyber fraud.

- Cyber Commando: The Cyber Commandos Program is an initiative in which a specialised wing of trained Cyber Commandos will be established in states, Union Territories, and Central Police Organizations. These commandos will work to secure the nation’s digital space and counter rising cyber threats. They will form the first line of defence in safeguarding India from the growing cyber threats.

- Samanvay Platform: The Samanvay platform is a web-based Joint Cybercrime Investigation Facility System that was introduced as a one-stop data repository for cybercrime. It facilitates cybercrime mapping, data analytics, and cooperation among law enforcement agencies across the country. This will play a pivotal role in fostering collaborations in combating cybercrimes. Mr. Shah recognised the Samanvay platform as a crucial step in fostering data sharing and collaboration. He called for a shift from the “need to know” principle to a “duty to share” mindset in dealing with cyber threats. The Samanvay platform will serve as India’s first shared data repository, significantly enhancing the country’s cybercrime response.

- Suspect Registry: The Suspect Registry Portal is a national-level platform that has been designed to track cybercriminals. The portal registry will be connected to the National Cybercrime Reporting Portal (NCRP) which aims to help banks, financial intermediaries, and law enforcement agencies strengthen fraud risk management. The initiative is expected to improve the real-time tracking of cyber suspects, preventing repeat offences and improving fraud detection mechanisms.

Rising Digitalization: Prioritizing Cybersecurity

The number of internet users in India has grown from 25 crores in 2014 to 95 crores in 2024, accompanied by a 78-foldincrease in data consumption. This growth is echoed in the number of growing cybersecurity challenges in the digital era. With the rise of digital transactions through Jan Dhan accounts, Rupay debit cards, and UPI systems, Shri Shah underscored the growing threat of digital fraud. He emphasised the need to protect personal data, prevent online harassment, and counter misinformation, fake news, and child abuse in the digital space.

The three new criminal laws, the Bharatiya Nyaya Sanhita (BNS), Bharatiya Nagrik Suraksha Sanhita (BNSS), and Bharatiya Sakshya Adhiniyam (BSA), which aim to strengthen India’s legal framework for cybercrime prevention, were also referred to in the address bythe Home Minister. These laws incorporate tech-driven solutions that will ensure investigations are conducted scientifically and effectively.

Mr. Shah emphasised popularising the 1930Cyber Crime Helpline. Additionally, he noted that I4C has issued over 600advisories, blocked numerous websites and social media pages operated by cybercriminals, and established a National Cyber Forensic Laboratory in Delhi. Over 1,100 officers have already received cyber forensics training under theI4C umbrella.

In response to the regional cybercrime challenges, the formation of Joint Cyber Coordination Teams in cybercrime hotspot areas like Mewat, Jamtara, Ahmedabad, Hyderabad, Chandigarh, Visakhapatnam and Guwahati was highlighted as a coordinated response to local cybercrime hotspot issues.

Conclusion

With the launch of initiatives like the Cyber Fraud Mitigation Centre, the Samanvay platform, and the Cyber Commandos Program, I4C is positioned to play a crucial role in combating cybercrime. The I4C is moving forward with a clear vision for a secure digital future and safeguarding India's digital ecosystem.

References:

● https://pib.gov.in/PressReleaseIframePage.aspx?PRID=2053438

The recent Promotion and Regulation of Online Gaming Act, 2025, that came into force in August, has been one of the most widely anticipated regulations in the digital entertainment industry. Among provisions such as promoting esports and licensing of online gaming, the legislation notably introduces a blanket ban on real-money gaming (RMG). The rationale behind this was to reduce its addictive effects, protect minors, and limit the circulation of black-money. However, in reality, the Act has spawned apprehension about the legislative process, regulatory redundancy, and unintended consequences that can shift users and revenue to offshore operators.

From Debate to Prohibition: How the Act was Passed

The Promotion and Regulation of Online Gaming Act was passed as a central law, providing the earlier fragmented state laws on online betting and gambling with an overarching framework. Proponents argue that, among other provisions, some kind of unified national framework was needed to deal with the scale of online betting due to its detrimental impact on young users. The current Act is a direct transition to criminalisation rather than the swings of self-regulation and partial restrictions used during the previous decade of incremental experiments in regulation. Stakeholders in the industry believe that this type of sudden, blanket action creates uncertainty and erodes confidence in the system in the long run. Further, critics have pointed out that the Bill was passed without adequate Parliamentary deliberation. A question has been raised about whether procedural safeguards were upheld.

Prohibition of Online RMG

Within the Indian context, a distinction has long been drawn between games of skill and games of chance, with the latter, like a lottery or a casino, being severely prohibited under state laws, whereas the former, like rummy or fantasy sports, have generally been allowed after being recognized as skill-based by court authorities. The Online Gaming Act of 2025 abolishes this distinction on the internet, thus banning all RMG actions that include cash transactions, regardless of skill or chance. The act also criminalises the advertising, facilitation, and hosting of such sites, thereby penalizing offshore operators with an Indian customer focus, and subjecting their payment gateways, app stores, and advertisers under its jurisdiction to penalties.

The Problem of Overlap

One potential issue that the Act presents is its overlap with the existing laws. The IT Rules 2023 mandate intermediaries in the gaming sector to appoint compliance officers, submit monthly reports, and undergo due diligence. The new Act introduces a three-level classification of games, whereas the advisories of the Central Consumer Protection Authority (CCPA) under the Consumer Protection Act treat online betting as an unfair trade practice.

This multiplicity of regulations builds a maze where different Ministries and state governments have overlapping jurisdiction. Policy experts caution that such an overlap can create enforcement challenges, punish players who act within the law, and leave offshore malefactors undetected.

Unintended Consequences: Driving Users Offshore

Outright prohibition will hardly ever remove demand; it will only push it out. Offshore sites have taken advantage of the situation as Indian operators like Dream11 shut down their money games after the ban. It has already been reported that there is aggressive advertising by foreign betting companies that are not registered in India, most of which have backend infrastructure that cannot be regulated by the Act (Storyboard18).

This diversion of users to unregulated markets has two main risks. First, Indian players are deprived of the consumer protection offered to them in local regulation, and their data can be sent to suspicious foreign organizations. Second, the government loses control over the money flow that can be transferred via informal channels or cryptocurrencies or other obscure systems. Industry analysts are alerting that such developments may only worsen the issue of black-money instead of solving it (IGamingBusiness).

Advertising, Age Gating, and Digital Rights

The Act has also strengthened advertisement regulations, aligning with advisories issued by the Advertising Standards Council of India, which prohibits the targeting of minors. However, critics believe that the application remains inadequately enforced, and children can with comparative ease access unregulated overseas applications. In the absence of complementary digital literacy programs and strong parental controls, these limitations can be effectively superficial instead of real.

Privacy advocates also warn that frequent prompts, vague messages, or invasive surveillance can weaken the digital rights of users instead of strengthening them. Overregulation has also been found to create banner blindness in global contexts where users ignore warnings without first clearly understanding them.

Enforcement Challenges

The Act puts a lot of responsibilities on many stakeholders, including the Ministry of Information and Broadcasting (MIB) and the Reserve Bank of India (RBI). Platforms like Google Play and Apple App Store are expected to verify government-approved lists of compliant gaming apps and remove non-compliant or banned ones, as directed by the MIB and the RBI. Although this pressure may motivate intermediaries to collaborate, it may also have a risk of overreach when it is applied unequally or in a political way.

According to the experts, the solution should be underpinned by technology itself. Artificial intelligence can be used to identify illegal advertisements, track illegal gaming in children, and trace payment streams. At the same time, the regulators should be able to issue final lists of either compliant or non-compliant applications to advise the consumers and intermediaries alike. Without such practical provisions, enforcement risks remaining patchy.

Online Gaming Rules

On 1 October 2025, the government issued a draft of the Online Gaming Rules in accordance with the Promotion and Regulation of Online Gaming Act. The regulations focus on the creation of the compliance frameworks, define the classification of the allowed gaming activities, and prescribe grievance-redressal mechanisms aiming to promote the protection of the players and procedural transparency. However, the draft does not revisit or soften the existing blanket prohibition on real-money gaming (RMG) and, hence, the questions about the effectiveness of enforcement and regulatory clarity remain open (Times of India, 2025).

Protecting Consumers Without Stifling Innovation

The ban highlights a larger conflict, i.e., the protection of the vulnerable users without stifling an industry that has traditionally contributed to innovation, jobs, and the collection of tax revenue. Online gaming has significantly added to the GST collections, and the sudden shakeup brings fiscal concerns (Reuters).

Several legal objections to the Act have already been brought, asking whether the Act is constitutional, especially as to whether the restrictions are proportional to the right to trade. The outcome of such cases will define the future trajectory of the digital economy of India (Reuters).

Way Forward

Instead of outright prohibition, a more balanced approach that incorporates regulation and consumer protection is suggested by the experts. Key measures could include:

- A definite difference between games of skill and games of chance, with proportionate regulation.

- Age confirmation and campaign against online illiteracy to protect the underage population.

- Enhanced advertising and payments compliance requirements and enforceable non-compliance penalty.

- Coordinated oversight among different ministries to prevent duplication and regulatory struggle.

- Leveraging AI and fintech to track illegal financial activities (black money flows) and developing innovation.

Conclusion

The Online Gaming Act 2025 addresses social issues, such as addiction, monetary risk, and child safety, that require governance interventions. However, the path it follows to this end, that of total prohibition, is more likely to spawn a new set of issues instead of providing solutions because it will send consumers to offshore sites, undermine consumer rights, and slow innovation.

For India, the real challenge is not whether to prohibit online money gaming but how to create a balanced, transparent, and enforceable framework that protects users while fostering a responsible gaming ecosystem. India can reduce the adverse consequences of online betting without keeping the industry in the shadows with better coordination, reasonable use of technology, and balanced protection.

References:

- India's Dream11, top gaming apps halt money-based games after ban

- India online gambling ban could drive punters to black market

- Offshore betting firms with backend ops in India not covered by online gaming law

- The Great Gamble: India’s Online Gaming Ban, The GST Battle, And What Lies Ahead.

- Game Over for Online Money Games? An Analysis of the Online Gaming Act 2025

- Government gambles heavily on prohibiting online money gaming

- Online gaming regulation: New rules to take effect from October 1; government stresses consultative approach with industry