#FactCheck: Misleading Clip of Nepal Crash Shared as Air India’s AI-171 Ahmedabad Accident

Executive Summary:

A viral video circulating on social media platforms, claimed to show the final moments of an Air India flight carrying passengers inside the cabin just before it crashed near Ahmedabad on June 12, 2025, is false. However, upon further research, the footage was found to originate from the Yeti Airlines Flight 691 crash that occurred in Pokhara, Nepal, on January 15, 2023. For all details, please follow the report.

Claim:

Viral videos circulating on social media claiming to show the final moments inside Air India flight AI‑171 before it crashed near Ahmedabad on June 12, 2025. The footage appears to have been recorded by a passenger during the flight and is being shared as real-time visuals from the recent tragedy. Many users have believed the clip to be genuine and linked it directly to the Air India incident.

Fact Check:

To confirm the validity of the video going viral depicting the alleged final moments of Air India's AI-171 that crashed near Ahmedabad on 12 June 2025, we engaged in a comprehensive reverse image search and keyframe analysis then we got to know that the footage occurs back in January 2023, namely Yeti Airlines Flight 691 that crashed in Pokhara, Nepal. The visuals shared in the viral video match up, including cabin and passenger details, identically to the original livestream made by a passenger aboard the Nepal flight, confirming that the video is being reused out of context.

Moreover, well-respected and reliable news organisations, including New York Post and NDTV, have shared reports confirming that the video originated from the 2023 Nepal plane crash and has no relation to the recent Air India incident. The Press Information Bureau (PIB) also released a clarification dismissing the video as disinformation. Reliable reports from the past, visual evidence, and reverse search verification all provide complete agreement in that the viral video is falsely attributed to the AI-171 tragedy.

Conclusion:

The viral footage does not show the AI-171 crash near Ahmedabad on 12 June 2025. It is an irrelevant, previously recorded livestream from the January 2023 Yeti Airlines crash in Pokhara, Nepal, falsely repurposed as breaking news. It’s essential to rely on verified and credible news agencies. Please refer to official investigation reports when discussing such sensitive events.

- Claim: A dramatic clip of passengers inside a crashing plane is being falsely linked to the recent Air India tragedy in Ahmedabad.

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Executive Summary

A news video is being widely circulated on social media with the claim that Bihar Chief Minister Nitish Kumar has resigned from his post in protest against the ongoing UGC-related controversy. Several users are sharing the clip while alleging that Kumar stepped down after opposing the issue. However, CyberPeace research has found the claim to be false. The researchrevealed that the video being shared is from 2022 and has no connection whatsoever with the UGC or any recent protests related to it. An old video has been misleadingly linked to a current issue to spread misinformation on social media.

Claim:

An Instagram user shared a video on January 26 claiming that Bihar Chief Minister Nitish Kumar had resigned. The post further alleged that the news was first aired on Republic channel and that Kumar had submitted his resignation to then-Governor Phagu Chauhan. The link to the post, its archived version, and screenshots can be seen below. (Links as provided)

Fact Check:

To verify the claim, CyberPeace first conducted a keyword-based search on Google. No credible or established media organisation reported any such resignation, clearly indicating that the viral claim lacked authenticity.

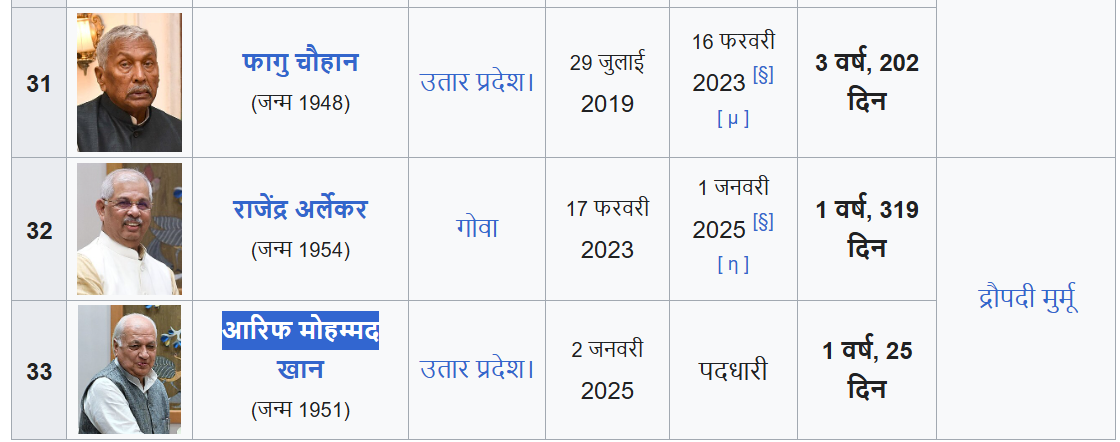

Further, the voiceover in the viral video states that Nitish Kumar handed over his resignation to Governor Phagu Chauhan. However, Phagu Chauhan ceased to be the Governor of Bihar in February 2023. The current Governor of Bihar is Arif Mohammad Khan, making the claim in the video factually incorrect and misleading.

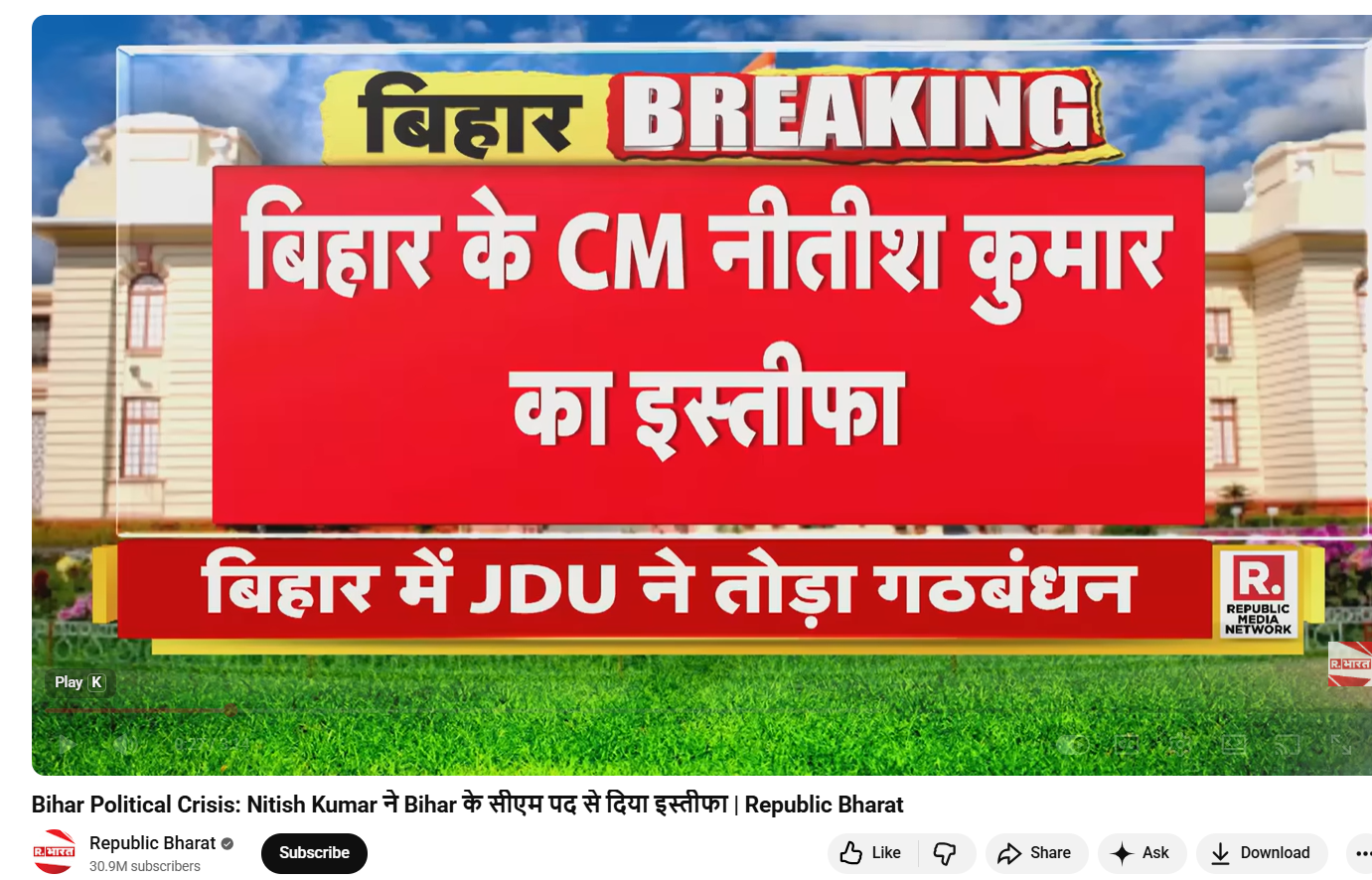

In the next step, keyframes from the viral video were extracted and reverse-searched using Google Lens. This led to the official YouTube channel of Republic Bharat, where the full version of the same video was found. The video was uploaded on August 9, 2022. This clearly establishes that the clip circulating on social media is not recent and is being shared out of context.

Conclusion

CyberPeace’s research confirms that the viral video claiming Nitish Kumar resigned over the UGC issue is false. The video dates back to 2022 and has no link to the current UGC controversy. An old political video has been deliberately circulated with a misleading narrative to create confusion on social media.

Transforming Misguided Knowledge into Social Strength

यत्र योगेश्वरः कृष्णो यत्र पार्थो धनुर्धरः । तत्र श्रीर्विजयो भूतिर्ध्रुवा नीतिर्मतिर्मम ॥ (Bhagavad Gita) translates as “Where there is divine guidance and righteous effort, there will always be prosperity, victory, and morality.” In the context of the idea of rehabilitation, this verse teaches us that if offenders receive proper guidance, their skills can be redirected. Instead of causing harm, the same abilities can be transformed into tools for protection and social good. Cyber offenders who misuse their skills can, through structured guidance, be redirected toward constructive purposes like cyber defence, digital literacy, and security innovation. This interpretation emphasises not discarding the “spoiled” but reforming and reintegrating them into society.

Introduction

Words and places are often associated with positive and negative aspects based on their history, stories, and the activities that might happen in that certain place. For example, the word “hacker” has a negative connotation, as does the place “Jamtara”, which is identified with its shady history as a cybercrime hotspot, but often people forget that there are lots of individuals who use their hacking skills to serve and protect their nation, also known as “white hat hackers”, a.k.a. ethical hackers, and places like Jamtara have a substantial number of talented individuals who have lost their way and are often victims of their circumstances. This presents the authorities with a fundamental issue of destigmatising cybercriminals and the need to act on their rehabilitation. The idea is to shift from punitive responses to rehabilitative and preventive approaches, especially in regions like Jamtara.

The Deeper Problem: Systemic Gaps and Social Context

Jamtara is not an isolated or a single case; there are many regions like Mewat, Bharatpur, Deoghar, Mathura, etc., that are facing a crisis, and various lives are uprooted because youth are entrapped in cybercrime rings, often to escape unemployment, poverty, and simply in the hope of a better life. In one such heart-wrenching story, a 24-year-old Shakil, belonging to Nuh, Haryana, was arrested for committing various cybercrimes, including sextortion and financial scams, and while his culpability is not in question here, his background reflects a deeper issue. He committed these crimes to pay for his diabetic father’s mounting bills and to see his sister, Shabana, married. This is the story of almost every other individual in the rural areas who is forced into committing these crimes, if not by a person, but by their circumstances. In a news report covered in 2024, an intervention was launched by various Meo leaders and social organisations in the Mewat region aimed at weaning the youth away from cybercrimes.

Not only poverty, but lack of education, social awareness, and digital literacy have acted as active agents for pushing the youth of India away from mainstream growth and towards the dark trenches of the cybercrime world. The local authorities have made active efforts to solve this problem; for instance, to dispel Jamtara’s unfavourable reputation for cybercrime and set the city firmly on the path to change, community libraries have been established in all 118 panchayats spread across six blocks of the district by IAS officer and DM Faiz Aq Ahmed Mumtaz.

The menace of cybercrimes is not limited to rural areas, as various reports surfaced during and post-COVID, where young children from urban areas became victims of various cybercrimes such as cyberbullying and stalking, and often perpetrators were someone from the same age group, adding to the dilemma. The issue has been noticed by various agencies, and the a need to deal with both victims and the accused in a sensitised manner. Recently, ex-CJI DY Chandrachud called for international collaboration to combat juvenile cybercrimes, as there are many who are ensnared and coerced into these criminal gangs, and swift resolution is the key to ensuring justice and rehabilitation.

CyberPeace Policy Outlook

Cybercrime is often a product of skill without purpose. The youth who are often pushed into these crimes either have an incomplete idea of the veracity of their actions or have no other resort. The legal system and the agencies will have to look beyond the nature of the crimes and adopt and undertake a reformative approach so that these people can make their way into society and harness their skills ethically. A good alternative would be to organise Cyber Bootcamps for Reform, i.e., structured training with placement support, and explain to them how ethical hacking and cybersecurity careers can be attractive alternatives. One way to make the process effective is to share real-world stories of reformed hackers. There are many who belong to small villages and districts who have written success stories on reform after participating in digital training programmes. The crime they commit doesn’t have to be the last thing they are able to do in life; it doesn’t have to be the ending. The digital programmes should be organised in a way and in a vernacular that the youth are well-versed in, so there are no language barriers. The programme may give training for coding, cyber hygiene, legal literacy, ethical hacking, psychological counselling, and financial literacy workshops.

It has become a matter of reclaiming the misdirected talent, as rehabilitation is not just humane; it is strategic in the fight against cybercrime. On 1st April 2025, IIT Madras Pravartak Technologies Foundation finished training its first batch of law enforcement officers in cybersecurity techniques. The initiative is commendable, and a similar initiative may prove effective for the youth accused of cybercrimes, and preferably, they can be involved in similar rehabilitation and empowerment programmes during the early stages of criminal proceedings. This will help prevent recidivism and convert digital deviance into digital responsibility. In order to successfully incorporate this into law enforcement, the police can effectively use it to identify first-time, non-habitual offenders involved in low-impact cybercrimes. Also, courts can exercise the authority to require participation in an approved cyber-reform programme as a condition of bail in addition to bail hearings.

Along with this, under the Juvenile Justice (Care and Protection of Children) Act, 2015, children in conflict with the law can be sent to observation homes where modules for digital literacy and skill development can be implemented. Other methods that may prove effective may include Restorative Justice Programmes, Court-monitored rehabilitation, etc.

Conlusion

A rehabilitative approach does not simply punish offenders, it transforms their knowledge into a force for good, ensuring that cybercrime is not just curtailed but converted into cyber defence and progress.

References

- Ismat Ara, How an impoverished district in Haryana became a breeding ground for cybercriminals, FRONTLINE (Jul 27, 2023, 11:00 IST), https://frontline.thehindu.com/the-nation/spotlight-how-nuh-district-in-haryana-became-a-breeding-ground-for-cybercriminals/article67098193.ece )

- Mohammed Iqbal, Counselling, skilling aim to wean Mewat youth away from cybercrimes, THE HINDU (Jul. 28, 2024, 01:39 AM), https://www.thehindu.com/news/cities/Delhi/counselling-skilling-aim-to-wean-mewat-youth-away-from-cybercrimes/article68454985.ece

- Prawin Kumar Tiwary,Jamtara’s journey from cybercrime to community libraries, 101 REPORTERS (Feb. 16, 2022), https://101reporters.com/article/development/Jamtaras_journey_from_cybercrime_to_community_libraries .

- IIT Madras Pravartak completes Training First Batch of Cyber Commandos, PRESS INFORMATION BUREAU (Apr. 1, 2025, 03:36 PM), https://www.pib.gov.in/PressReleasePage.aspx?PRID=2117256

.webp)

Introduction

Privacy has become a concern for netizens and social media companies have access to a user’s data and the ability to use the said data as they see fit. Meta’s business model, where they rely heavily on collecting and processing user data to deliver targeted advertising, has been under scrutiny. The conflict between Meta and the EU traces back to the enactment of GDPR in 2018. Meta is facing numerous fines for not following through with the regulation and mainly failing to obtain explicit consent for data processing under Chapter 2, Article 7 of the GDPR. ePrivacy Regulation, which focuses on digital communication and digital data privacy, is the next step in the EU’s arsenal to protect user privacy and will target the cookie policies and tracking tech crucial to Meta's ad-targeting mechanism. Meta’s core revenue stream is sourced from targeted advertising which requires vast amounts of data for the creation of a personalised experience and is scrutinised by the EU.

Pay for Privacy Model and its Implications with Critical Analysis

Meta came up with a solution to deal with the privacy issue - ‘Pay or Consent,’ a model that allows users to opt out of data-driven advertising by paying a subscription fee. The platform would offer users a choice between free, ad-supported services and a paid privacy-enhanced experience which aligns with the GDPR and potentially reduces regulatory pressure on Meta.

Meta presently needs to assess the economic feasibility of this model and come up with answers for how much a user would be willing to pay for the privacy offered and shift Meta’s monetisation from ad-driven profits to subscription revenues. This would have a direct impact on Meta’s advertisers who use Meta as a platform for detailed user data for targeted advertising, and would potentially decrease ad revenue and innovate other monetisation strategies.

For the users, increased privacy and greater control of data aligning with global privacy concerns would be a potential outcome. While users will undoubtedly appreciate the option to avoid tracking, the suggestion does beg the question that the need to pay might become a barrier. This could possibly divide users between cost-conscious and privacy-conscious segments. Setting up a reasonable price point is necessary for widespread adoption of the model.

For the regulators and the industry, a new precedent would be set in the tech industry and could influence other companies’ approaches to data privacy. Regulators might welcome this move and encourage further innovation in privacy-respecting business models.

The affordability and fairness of the ‘pay or consent’ model could create digital inequality if privacy comes at a digital cost or even more so as a luxury. The subscription model would also need clarifications as to what data would be collected and how it would be used for non-advertising purposes. In terms of market competition, competitors might use and capitalise on Meta’s subscription model by offering free services with privacy guarantees which could further pressure Meta to refine its offerings to stay competitive. According to the EU, the model needs to provide a third way for users who have ads but are a result of non-personalisation advertising.

Meta has further expressed a willingness to explore various models to address regulatory concerns and enhance user privacy. Their recent actions in the form of pilot programs for testing the pay-for-privacy model is one example. Meta is actively engaging with EU regulators to find mutually acceptable solutions and to demonstrate its commitment to compliance while advocating for business models that sustain innovation. Meta executives have emphasised the importance of user choice and transparency in their future business strategies.

Future Impact Outlook

- The Meta-EU tussle over privacy is a manifestation of broader debates about data protection and business models in the digital age.

- The EU's stance on Meta’s ‘pay or consent’ model and any new regulatory measures will shape the future landscape of digital privacy, leading to other jurisdictions taking cues and potentially leading to global shifts in privacy regulations.

- Meta may need to iterate on its approach based on consumer preferences and concerns. Competitors and tech giants will closely monitor Meta’s strategies, possibly adopting similar models or innovating new solutions. And the overall approach to privacy could evolve to prioritise user control and transparency.

Conclusion

Consent is the cornerstone in matters of privacy and sidestepping it violates the rights of users. The manner in which tech companies foster a culture of consent is of paramount importance in today's digital landscape. As the exploration by Meta in the ‘pay or consent’ model takes place, it faces both opportunities and challenges in balancing user privacy with business sustainability. This situation serves as a critical test case for the tech industry, highlighting the need for innovative solutions that respect privacy while fostering growth with the specificity of dealing with data protection laws worldwide, starting with India’s Digital Personal Data Protection Act, of 2023.

Reference:

- https://ciso.economictimes.indiatimes.com/news/grc/eu-tells-meta-to-address-consumer-fears-over-pay-for-privacy/111946106

- https://www.wired.com/story/metas-pay-for-privacy-model-is-illegal-says-eu/

- https://edri.org/our-work/privacy-is-not-for-sale-meta-must-stop-charging-for-peoples-right-to-privacy/

- https://fortune.com/2024/04/17/meta-pay-for-privacy-rejected-edpb-eu-gdpr-schrems/