#FactCheck: Fake Claim on Delhi Authority Culling Dogs After Supreme Court Stray Dog Ban Directive 11 Aug 2025

Executive Summary:

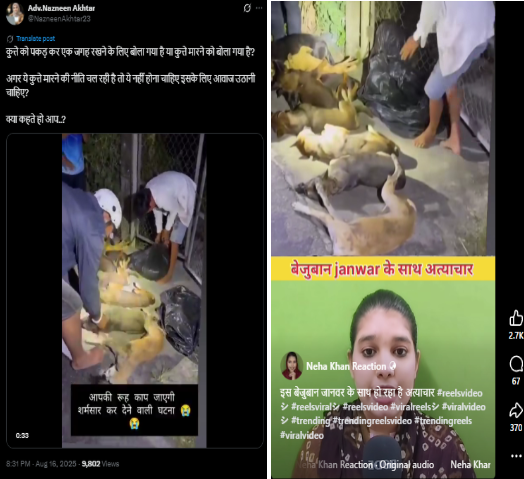

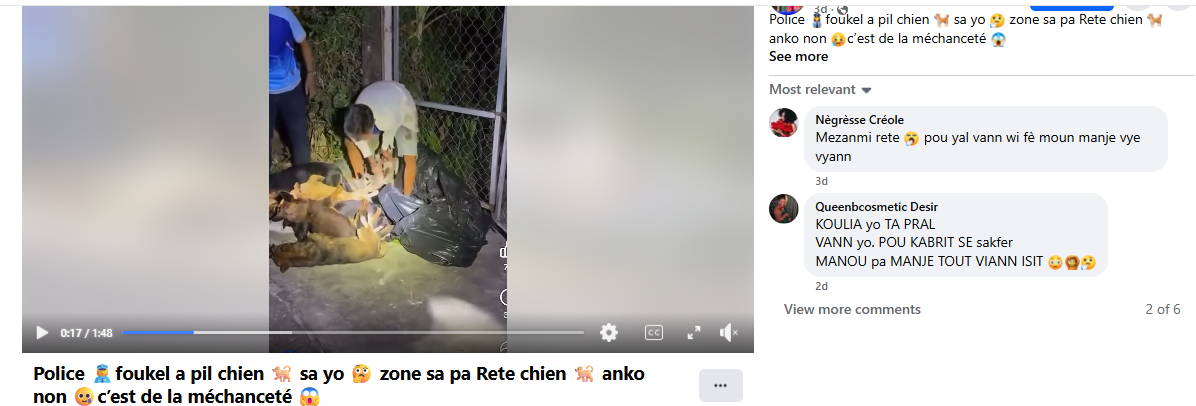

A viral claim alleges that following the Supreme Court of India’s August 11, 2025 order on relocating stray dogs, authorities in Delhi NCR have begun mass culling. However, verification reveals the claim to be false and misleading. A reverse image search of the viral video traced it to older posts from outside India, probably linked to Haiti or Vietnam, as indicated by the use of Haitian Creole and Vietnamese language respectively. While the exact location cannot be independently verified, it is confirmed that the video is not from Delhi NCR and has no connection to the Supreme Court’s directive. Therefore, the claim lacks authenticity and is misleading

Claim:

There have been several claims circulating after the Supreme Court of India on 11th August 2025 ordered the relocation of stray dogs to shelters. The primary claim suggests that authorities, following the order, have begun mass killing or culling of stray dogs, particularly in areas like Delhi and the National Capital Region. This narrative intensified after several videos purporting to show dead or mistreated dogs allegedly linked to the Supreme Court’s directive—began circulating online.

Fact Check:

After conducting a reverse image search using a keyframe from the viral video, we found similar videos circulating on Facebook. Upon analyzing the language used in one of the posts, it appears to be Haitian Creole (Kreyòl Ayisyen), which is primarily spoken in Haiti. Another similar video was also found on Facebook, where the language used is Vietnamese, suggesting that the post associates the incident with Vietnam.

However, it is important to note that while these posts point towards different locations, the exact origin of the video cannot be independently verified. What can be established with certainty is that the video is not from Delhi NCR, India, as is being claimed. Therefore, the viral claim is misleading and lacks authenticity.

Conclusion:

The viral claim linking the Supreme Court’s August 11, 2025 order on stray dogs to mass culling in Delhi NCR is false and misleading. Reverse image search confirms the video originated outside India, with evidence of Haitian Creole and Vietnamese captions. While the exact source remains unverified, it is clear the video is not from Delhi NCR and has no relation to the Court’s directive. Hence, the claim lacks credibility and authenticity.

Claim: Viral fake claim of Delhi Authority culling dogs after the Supreme Court directive on the ban of stray dogs as on 11th August 2025

Claimed On: Social Media

Fact Check: False and Misleading

Related Blogs

Introduction:

A new Android malware called NGate is capable of stealing money from payment cards through relaying the data read by the Near Field Communication (“NFС”) chip to the attacker’s device. NFC is a device which allows devices such as smartphones to communicate over a short distance wirelessly. In particular, NGate allows forging the victims’ cards and, therefore, performing fraudulent purchases or withdrawing money from ATMs. .

About NGate Malware:

The whole purpose of NGate malware is to target victims’ payment cards by relaying the NFC data to the attacker’s device. The malware is designed to take advantage of phishing tactics and functionality of the NFC on android based devices.

Modus Operandi:

- Phishing Campaigns: The first step is spoofed emails or SMS used to lure the users into installing the Progressive Web Apps (“PWAs”) or the WebAPKs presented as genuine banking applications. These apps usually have a layout and logo that makes them look like an authentic app of a Targeted Bank which makes them believable.

- Installation of NGate: When the victim downloads the specific app, he or she is required to input personal details including account numbers and PIN numbers. Users are also advised to turn on or install NFC on their gadgets and place the payment cards to the back part of the phone to scan the cards.

- NFCGate Component: One of the main working features of the NGate is the NFCGate, an application created and designed by some students of Technical University of Darmstadt. This tool allows the malware to:

- Collect NFC traffic from payment cards in the vicinity.

- Transmit, or relay this data to the attacker’s device through a server.

- Repeat data that has been previously intercepted or otherwise copied.

It is important to note that some aspects of NFCGate mandate a rooted device; however, forwarding NFC traffic can occur with devices that are not rooted, and therefore can potentially ensnare more victims.

Technical Mechanism of Data Theft:

- Data Capture: The malware exploits the NFC communication feature on android devices and reads the information from the payment card, if the card is near the infected device. It is able to intercept and capture the sensive card details.

- Data Relay: The stolen information is transmitted through a server to the attacker’s device so that he/she is in a position to mimic the victim’s card.

- Unauthorized Transactions: Attackers get access to spend money on the merchants or withdraw money from the ATM that has NFC enabled. This capability marks a new level of Android malware in that the hackers are able to directly steal money without having to get hold of the card.

Social Engineering Tactics:

In most cases, attackers use social engineering techniques to obtain more information from the target before implementing the attack. In the second phase, attackers may pretend to be representatives of a bank that there is a problem with the account and offer to download a program called NGate, which in fact is a Trojan under the guise of an application for confirming the security of the account. This method makes it possible for the attackers to get ITPIN code from the sides of the victim, which enables them to withdraw money from the targeted person’s account without authorization.

Technical Analysis:

The analysis of malicious file hashes and phishing links are below:

Malicious File Hashes:

csob_smart_klic.apk:

- MD5: 7225ED2CBA9CB6C038D8

- Classification: Android/Spy.NGate.B

csob_smart_klic.apk:

- MD5: 66DE1E0A2E9A421DD16B

- Classification: Android/Spy.NGate.C

george_klic.apk:

- MD5: DA84BC78FF2117DDBFDC

- Classification: Android/Spy.NGate.C

george_klic-0304.apk:

- MD5: E7AE59CD44204461EDBD

- Classification: Android/Spy.NGate.C

rb_klic.apk:

- MD5: 103D78A180EB973B9FFC

- Classification: Android/Spy.NGate.A

rb_klic.apk:

- MD5: 11BE9715BE9B41B1C852

- Classification: Android/Spy.NGate.C.

Phishing URLs:

Phishing URL:

- https://client.nfcpay.workers[.]dev/?key=8e9a1c7b0d4e8f2c5d3f6b2

Additionally, several distinct phishing websites have been identified, including:

- rb.2f1c0b7d.tbc-app[.]life

- geo-4bfa49b2.tbc-app[.]life

- rb-62d3a.tbc-app[.]life

- csob-93ef49e7a.tbc-app[.]life

- george.tbc-app[.]life.

Analysis:

Broader Implications of NGate:

The ultramodern features of NGate mean that its manifestation is not limited to financial swindling. An attacker can also generate a copy of NFC access cards and get full access when hacking into restricted areas, for example, the corporate offices or restricted facility. Moreover, it is also safe to use the capacity to capture and analyze NFC traffic as threats to identity theft and other forms of cyber-criminality.

Precautionary measures to be taken:

To protect against NGate and similar threats, users should consider the following strategies:

- Disable NFC: As mentioned above, NFC should be not often used, it is safe to turn NFC on Android devices off. This perhaps can be done from the general control of the device in which the bursting modes are being set.

- Scrutinize App Permissions: Be careful concerning the permission that applies to the apps that are installed particularly the ones allowed to access the device. Hence, it is very important that applications should be downloaded only from genuine stores like Google Play Store only.

- Use Security Software: The malware threat can be prevented by installing relevant security applications that are available in the market.

- Stay Informed: As it has been highlighted, it is crucial for a person to know risks that are associated with the use of NFC while attempting to safeguard an individual’s identity.

Conclusion:

The presence of malware such as NGate is proof of the dynamism of threats in the context of mobile payments. Through the utilization of NFC function, NGate is a marked step up of Android malware implying that the attackers can directly manipulate the cash related data of the victims regardless of the physical aspect of the payment card. This underscores the need to be careful when downloading applications and to be keen on the permission one grants on the application. Turn NFC when not in use, use good security software and be aware of the latest scams are some of the measures that help to fight this high level of financial fraud. The attackers are now improving their methods. It is only right for the people and companies to take the right steps in avoiding the breach of privacy and identity theft.

Reference:

- https://www.welivesecurity.com/en/eset-research/ngate-android-malware-relays-nfc-traffic-to-steal-cash/

- https://therecord.media/android-malware-atm-stealing-czech-banks

- https://www.darkreading.com/mobile-security/nfc-traffic-stealer-targets-android-users-and-their-banking-info

- https://cybersecuritynews.com/new-ngate-android-malware/

Introduction

The use of AI in content production, especially images and videos, is changing the foundations of evidence. AI-generated videos and images can mirror a person’s facial features, voice, or actions with a level of fidelity to which the average individual may not be able to distinguish real from fake. The ability to provide creative solutions is indeed a beneficial aspect of this technology. However, its misuse has been rapidly escalating over recent years. This creates threats to privacy and dignity, and facilitates the creation of dis/misinformation. Its real-world consequences are the manipulation of elections, national security threats, and the erosion of trust in society.

Why India Needs Deepfake Regulation

Deepfake regulation is urgently needed in India, evidenced by the recent Rashmika Mandanna incident, where a hoax deepfake of an actress created a scandal throughout the country. This was the first time that an individual's image was superimposed on the body of another woman in a viral deepfake video that fooled many viewers and created outrage among those who were deceived by the video. The incident even led to law enforcement agencies issuing warnings to the public about the dangers of manipulated media.

This was not an isolated incident; many influencers, actors, leaders and common people have fallen victim to deepfake pornography, deepfake speech scams, defraudations, and other malicious uses of deepfake technology. The rapid proliferation of deepfake technology is outpacing any efforts by lawmakers to regulate its widespread use. In this regard, a Private Member’s Bill was introduced in the Lok Sabha in its Winter Session. This proposal was presented to the Lok Sabha as an individual MP's Private Member's Bill. Even though these have had a low rate of success in being passed into law historically, they do provide an opportunity for the government to take notice of and respond to emerging issues. In fact, Private Member's Bills have been the catalyst for government action on many important matters and have also provided an avenue for parliamentary discussion and future policy creation. The introduction of this Bill demonstrates the importance of addressing the public concern surrounding digital impersonation and demonstrates that the Parliament acknowledges digital deepfakes to be a significant concern and, therefore, in need of a legislative framework to combat them.

Key Features Proposed by the New Deepfake Regulation Bill

The proposed legislation aims to create a strong legal structure around the creation, distribution and use of deepfake content in India. Its five core proposals are:

1. Prior Consent Requirement: individuals must give their written approval before producing or distributing deepfake media, including digital representations of themselves, as well as their faces, images, likenesses and voices. This aims to protect women, celebrities, minors, and everyday citizens against the use of their identities with the intent to harm them or their reputations or to harass them through the production of deepfakes.

2. Penalties for Malicious Deepfakes: Serious criminal consequences should be placed for creating or sharing deepfake media, particularly when it is intended to cause harm (defame, harass, impersonate, deceive or manipulate another person). The Bill also addresses financially fraudulent use of deepfakes, political misinformation, interfering with elections and other types of explicit AI-generated media.

3. Establishment of a Deepfake Task Force: To look at the potential impact of deepfakes on national security, elections and public order, as well as on public safety and privacy. This group will work with academic institutions, AI research labs and technology companies to create advanced tools for the detection of deepfakes and establish best practices for the safe and responsible use of generative AI.

4. Creation of a Deepfake Detection and Awareness Fund: To assist with the development of tools for detecting deepfakes, increasing the capacity of law enforcement agencies to investigate cybercrime, promoting public awareness of deepfakes through national campaigns, and funding research on artificial intelligence safety and misinformation.

How Other Countries Are Handling Deepfakes

1. United States

Many States in the United States, including California and Texas, have enacted laws to prohibit the use of politically deceptive deepfakes during elections. Additionally, the Federal Government is currently developing regulations requiring that AI-generated content be clearly labelled. Social Media Platforms are also being encouraged to implement a requirement for users to disclose deepfakes.

2. United Kingdom

In the United Kingdom, it is illegal to create or distribute intimate deepfake images without consent; violators face jail time. The Online Safety Act emphasises the accountability of digital media providers by requiring them to identify, eliminate, and avert harmful synthetic content, which makes their role in curating safe environments all the more important.

3. European Union:

The EU has enacted the EU AI Act, which governs the use of deepfakes by requiring an explicit label to be affixed to any AI-generated content. The absence of a label would subject an offending party to potentially severe regulatory consequences; therefore, any platform wishing to do business in the EU should evaluate the risks associated with deepfakes and adhere strictly to the EU's guidelines for transparency regarding manipulated media.

4. China:

China has among the most rigorous regulations regarding deepfakes anywhere on the planet. All AI-manipulated media will have to be marked with a visible watermark, users will have to authenticate their identities prior to being allowed to use advanced AI tools, and online platforms have a legal requirement to take proactive measures to identify and remove synthetic materials from circulation.

Conclusion

Deepfake technology has the potential to be one of the greatest (and most dangerous) innovations of AI technology. There is much to learn from incidents such as that involving Rashmika Mandanna, as well as the proliferation of deepfake technology that abuses globally, demonstrating how easily truth can be altered in the digital realm. The new Private Member's Bill created by India seeks to provide for a comprehensive framework to address these abuses based on prior consent, penalties that actually work, technical preparedness, and public education/awareness. With other nations of the world moving towards increased regulation of AI technology, proposals such as this provide a direction for India to become a leader in the field of responsible digital governance.

References

- https://www.ndtv.com/india-news/lok-sabha-introduces-bill-to-regulate-deepfake-content-with-consent-rules-9761943

- https://m.economictimes.com/news/india/shiv-sena-mp-introduces-private-members-bill-to-regulate-deepfakes/articleshow/125802794.cms

- https://www.bbc.com/news/world-asia-india-67305557

- https://www.akingump.com/en/insights/blogs/ag-data-dive/california-deepfake-laws-first-in-country-to-take-effect

- https://codes.findlaw.com/tx/penal-code/penal-sect-21-165/

- https://www.mishcon.com/news/when-ai-impersonates-taking-action-against-deepfakes-in-the-uk#:~:text=As%20of%2031%20January%202024,of%20intimate%20deepfakes%20without%20consent.

- https://www.politico.eu/article/eu-tech-ai-deepfakes-labeling-rules-images-elections-iti-c2pa/

- https://www.reuters.com/article/technology/china-seeks-to-root-out-fake-news-and-deepfakes-with-new-online-content-rules-idUSKBN1Y30VT/

Introduction

In a distressing incident that highlights the growing threat of cyber fraud, a software engineer in Bangalore fell victim to fraudsters who posed as police officials. These miscreants, operating under the guise of a fake courier service and law enforcement, employed a sophisticated scam to dupe unsuspecting individuals out of their hard-earned money. Unfortunately, this is not an isolated incident, as several cases of similar fraud have been reported recently in Bangalore and other cities. It is crucial for everyone to be aware of these scams and adopt preventive measures to protect themselves.

Bangalore Techie Falls Victim to ₹33 Lakh

The software engineer received a call from someone claiming to be from FedEx courier service, informing him that a parcel sent in his name to Taiwan had been seized by the Mumbai police for containing illegal items. The call was then transferred to an impersonator posing as a Mumbai Deputy Commissioner of Police (DCP), who alleged that a money laundering case had been registered against him. The fraudsters then coerced him into joining a Skype call for verification purposes, during which they obtained his personal details, including bank account information.

Under the guise of verifying his credentials, the fraudsters manipulated him into transferring a significant amount of money to various accounts. They assured him that the funds would be returned after the completion of the procedure. However, once the money was transferred, the fraudsters disappeared, leaving the victim devastated and financially drained.

Best Practices to Stay Safe

- Be vigilant and skeptical: Maintain a healthy level of skepticism when receiving unsolicited calls or messages, especially if they involve sensitive information or financial matters. Be cautious of callers pressuring you to disclose personal details or engage in immediate financial transactions.

- Verify the caller’s authenticity: If someone claims to represent a legitimate organisation or law enforcement agency, independently verify their credentials. Look up the official contact details of the organization or agency and reach out to them directly to confirm the authenticity of the communication.

- Never share sensitive information: Avoid sharing personal information, such as bank account details, passwords, or Aadhaar numbers, over the phone or through unfamiliar online platforms. Legitimate organizations will not ask for such information without proper authentication protocols.

- Use secure communication channels: When communicating sensitive information, prefer secure platforms or official channels that provide end-to-end encryption. Avoid switching to alternative platforms or applications suggested by unknown callers, as fraudsters can exploit these.

- Educate yourself and others: Stay informed about the latest cyber fraud techniques and scams prevalent in your region. Share this knowledge with family, friends, and colleagues to create awareness and prevent them from falling victim to similar schemes.

- Implement robust security measures: Keep your devices and software updated with the latest security patches. Utilize robust anti-virus software, firewalls, and spam filters to safeguard against malicious activities. Regularly review your financial statements and account activity to detect any unauthorized transactions promptly.

Conclusion:

The incident involving the Bangalore techie and other victims of cyber fraud highlights the importance of remaining vigilant and adopting preventive measures to safeguard oneself from such scams. It is disheartening to see individuals falling prey to impersonators who exploit their trust and manipulate them into sharing sensitive information. By staying informed, exercising caution, and following best practices, we can collectively minimize the risk and protect ourselves from these fraudulent activities. Remember, the best defense against cyber fraud is a well-informed and alert individual.