#FactCheck - "Viral Video Misleadingly Claims Surrender to Indian Army, Actually Shows Bangladesh Army”

Executive Summary:

A viral video has circulated on social media, wrongly showing lawbreakers surrendering to the Indian Army. However, the verification performed shows that the video is of a group surrendering to the Bangladesh Army and is not related to India. The claim that it is related to the Indian Army is false and misleading.

Claims:

A viral video falsely claims that a group of lawbreakers is surrendering to the Indian Army, linking the footage to recent events in India.

Fact Check:

Upon receiving the viral posts, we analysed the keyframes of the video through Google Lens search. The search directed us to credible news sources in Bangladesh, which confirmed that the video was filmed during a surrender event involving criminals in Bangladesh, not India.

We further verified the video by cross-referencing it with official military and news reports from India. None of the sources supported the claim that the video involved the Indian Army. Instead, the video was linked to another similar Bangladesh Media covering the news.

No evidence was found in any credible Indian news media outlets that covered the video. The viral video was clearly taken out of context and misrepresented to mislead viewers.

Conclusion:

The viral video claiming to show lawbreakers surrendering to the Indian Army is footage from Bangladesh. The CyberPeace Research Team confirms that the video is falsely attributed to India, misleading the claim.

- Claim: The video shows miscreants surrendering to the Indian Army.

- Claimed on: Facebook, X, YouTube

- Fact Check: False & Misleading

Related Blogs

Introduction

The advent of AI-driven deepfake technology has facilitated the creation of explicit counterfeit videos for sextortion purposes. There has been an alarming increase in the use of Artificial Intelligence to create fake explicit images or videos for sextortion.

What is AI Sextortion and Deepfake Technology

AI sextortion refers to the use of artificial intelligence (AI) technology, particularly deepfake algorithms, to create counterfeit explicit videos or images for the purpose of harassing, extorting, or blackmailing individuals. Deepfake technology utilises AI algorithms to manipulate or replace faces and bodies in videos, making them appear realistic and often indistinguishable from genuine footage. This enables malicious actors to create explicit content that falsely portrays individuals engaging in sexual activities, even if they never participated in such actions.

Background on the Alarming Increase in AI Sextortion Cases

Recently there has been a significant increase in AI sextortion cases. Advancements in AI and deepfake technology have made it easier for perpetrators to create highly convincing fake explicit videos or images. The algorithms behind these technologies have become more sophisticated, allowing for more seamless and realistic manipulations. And the accessibility of AI tools and resources has increased, with open-source software and cloud-based services readily available to anyone. This accessibility has lowered the barrier to entry, enabling individuals with malicious intent to exploit these technologies for sextortion purposes.

The proliferation of sharing content on social media

The proliferation of social media platforms and the widespread sharing of personal content online have provided perpetrators with a vast pool of potential victims’ images and videos. By utilising these readily available resources, perpetrators can create deepfake explicit content that closely resembles the victims, increasing the likelihood of success in their extortion schemes.

Furthermore, the anonymity and wide reach of the internet and social media platforms allow perpetrators to distribute manipulated content quickly and easily. They can target individuals specifically or upload the content to public forums and pornographic websites, amplifying the impact and humiliation experienced by victims.

What are law agencies doing?

The alarming increase in AI sextortion cases has prompted concern among law enforcement agencies, advocacy groups, and technology companies. This is high time to make strong Efforts to raise awareness about the risks of AI sextortion, develop detection and prevention tools, and strengthen legal frameworks to address these emerging threats to individuals’ privacy, safety, and well-being.

There is a need for Technological Solutions, which develops and deploys advanced AI-based detection tools to identify and flag AI-generated deepfake content on platforms and services. And collaboration with technology companies to integrate such solutions.

Collaboration with Social Media Platforms is also needed. Social media platforms and technology companies can reframe and enforce community guidelines and policies against disseminating AI-generated explicit content. And can ensure foster cooperation in developing robust content moderation systems and reporting mechanisms.

There is a need to strengthen the legal frameworks to address AI sextortion, including laws that specifically criminalise the creation, distribution, and possession of AI-generated explicit content. Ensure adequate penalties for offenders and provisions for cross-border cooperation.

Proactive measures to combat AI-driven sextortion

Prevention and Awareness: Proactive measures raise awareness about AI sextortion, helping individuals recognise risks and take precautions.

Early Detection and Reporting: Proactive measures employ advanced detection tools to identify AI-generated deepfake content early, enabling prompt intervention and support for victims.

Legal Frameworks and Regulations: Proactive measures strengthen legal frameworks to criminalise AI sextortion, facilitate cross-border cooperation, and impose offender penalties.

Technological Solutions: Proactive measures focus on developing tools and algorithms to detect and remove AI-generated explicit content, making it harder for perpetrators to carry out their schemes.

International Cooperation: Proactive measures foster collaboration among law enforcement agencies, governments, and technology companies to combat AI sextortion globally.

Support for Victims: Proactive measures provide comprehensive support services, including counselling and legal assistance, to help victims recover from emotional and psychological trauma.

Implementing these proactive measures will help create a safer digital environment for all.

Misuse of Technology

Misusing technology, particularly AI-driven deepfake technology, in the context of sextortion raises serious concerns.

Exploitation of Personal Data: Perpetrators exploit personal data and images available online, such as social media posts or captured video chats, to create AI- manipulation violates privacy rights and exploits the vulnerability of individuals who trust that their personal information will be used responsibly.

Facilitation of Extortion: AI sextortion often involves perpetrators demanding monetary payments, sexually themed images or videos, or other favours under the threat of releasing manipulated content to the public or to the victims’ friends and family. The realistic nature of deepfake technology increases the effectiveness of these extortion attempts, placing victims under significant emotional and financial pressure.

Amplification of Harm: Perpetrators use deepfake technology to create explicit videos or images that appear realistic, thereby increasing the potential for humiliation, harassment, and psychological trauma suffered by victims. The wide distribution of such content on social media platforms and pornographic websites can perpetuate victimisation and cause lasting damage to their reputation and well-being.

Targeting teenagers– Targeting teenagers and extortion demands in AI sextortion cases is a particularly alarming aspect of this issue. Teenagers are particularly vulnerable to AI sextortion due to their increased use of social media platforms for sharing personal information and images. Perpetrators exploit to manipulate and coerce them.

Erosion of Trust: Misusing AI-driven deepfake technology erodes trust in digital media and online interactions. As deepfake content becomes more convincing, it becomes increasingly challenging to distinguish between real and manipulated videos or images.

Proliferation of Pornographic Content: The misuse of AI technology in sextortion contributes to the proliferation of non-consensual pornography (also known as “revenge porn”) and the availability of explicit content featuring unsuspecting individuals. This perpetuates a culture of objectification, exploitation, and non-consensual sharing of intimate material.

Conclusion

Addressing the concern of AI sextortion requires a multi-faceted approach, including technological advancements in detection and prevention, legal frameworks to hold offenders accountable, awareness about the risks, and collaboration between technology companies, law enforcement agencies, and advocacy groups to combat this emerging threat and protect the well-being of individuals online.

Executive Summary:

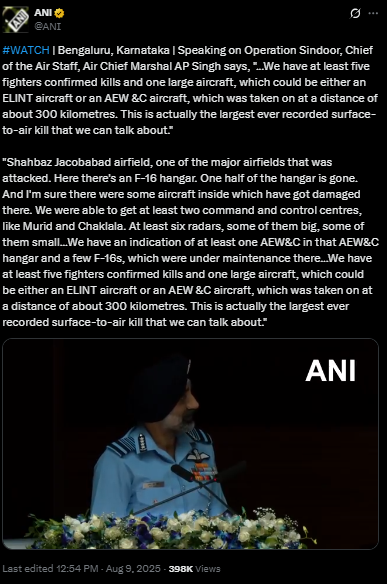

A video circulating on social media falsely claims to show Indian Air Chief Marshal AP Singh admitting that India lost six jets and a Heron drone during Operation Sindoor in May 2025. It has been revealed that the footage had been digitally manipulated by inserting an AI generated voice clone of Air Chief Marshal Singh into his recent speech, which was streamed live on August 9, 2025.

Claim:

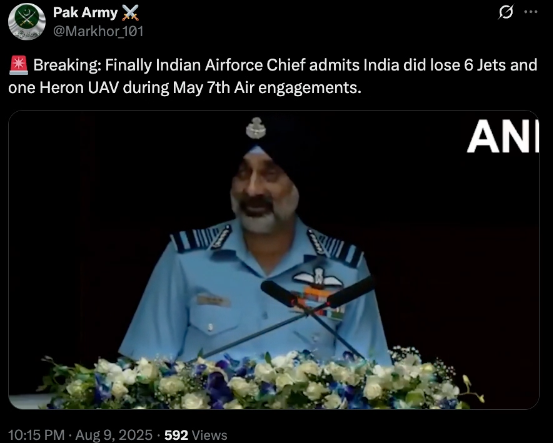

A viral video (archived video) (another link) shared by an X user stating in the caption “ Breaking: Finally Indian Airforce Chief admits India did lose 6 Jets and one Heron UAV during May 7th Air engagements.” which is actually showing the Air Chief Marshal has admitted the aforementioned loss during Operation Sindoor.

Fact Check:

By conducting a reverse image search on key frames from the video, we found a clip which was posted by ANI Official X handle , after watching the full clip we didn't find any mention of the aforementioned alleged claim.

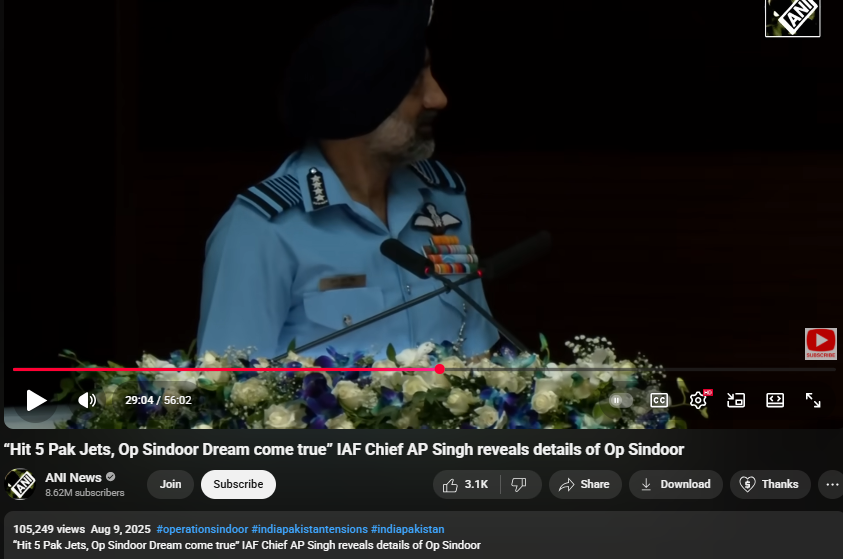

On further research we found an extended version of the video in the Official YouTube Channel of ANI which was published on 9th August 2025. At the 16th Air Chief Marshal L.M. Katre Memorial Lecture in Marathahalli, Bengaluru, Air Chief Marshal AP Singh did not mention any loss of six jets or a drone in relation to the conflict with Pakistan. The discrepancies observed in the viral clip suggest that portions of the audio may have been digitally manipulated.

The audio in the viral video, particularly the segment at the 29:05 minute mark alleging the loss of six Indian jets, appeared to be manipulated and displayed noticeable inconsistencies in tone and clarity.

Conclusion:

The viral video claiming that Air Chief Marshal AP Singh admitted to the loss of six jets and a Heron UAV during Operation Sindoor is misleading. A reverse image search traced the footage that no such remarks were made. Further an extended version on ANI’s official YouTube channel confirmed that, during the 16th Air Chief Marshal L.M. Katre Memorial Lecture, no reference was made to the alleged losses. Additionally, the viral video’s audio, particularly around the 29:05 mark, showed signs of manipulation with noticeable inconsistencies in tone and clarity.

- Claim: Viral Video Claiming IAF Chief Acknowledged Loss of Jets Found Manipulated

- Claimed On: Social Media

- Fact Check: False and Misleading

Introduction

Children today are growing up amidst technology, and the internet has become an important part of their lives. The internet provides a wealth of recreational and educational options and learning environments to children, but it also presents extensively unseen difficulties, particularly in the context of deepfakes and misinformation. AI is capable of performing complex tasks in a fast time. However, misuse of AI technologies led to increasing cyber crimes. The growing nature of cyber threats can have a negative impact on children wellbeing and safety while using the Internet.

India's Digital Environment

India has one of the world's fastest-growing internet user bases, and young netizens here are getting online every passing day. The internet has now become an inseparable part of their everyday lives, be it social media or online courses. But the speed at which the digital world is evolving has raised many privacy and safety concerns increasing the chance of exposure to potentially dangerous content.

Misinformation: The raising Concern

Today, the internet is filled with various types of misinformation, and youngsters are especially vulnerable to its adverse effects. With the diversity in the language and culture in India, the spread of misinformation can have a vast negative impact on society. In particular, misinformation in education has the power to divulge young brains and create hindrances in their cognitive development.

To address this issue, it is important that parents, academia, government, industry and civil society start working together to promote digital literacy initiatives that educate children to critically analyse online material which can ease navigation in the digital realm.

DeepFakes: The Deceptive Mirage:

Deepfakes, or digitally altered videos and/or images made with the use of artificial intelligence, pose a huge internet threat. The possible ramifications of deepfake technology are concerning in India, since there is a high level of dependence on the media. Deepfakes can have far-reaching repercussions, from altering political narratives to disseminating misleading information.

Addressing the deepfake problem demands a multifaceted strategy. Media literacy programs should be integrated into the educational curriculum to assist youngsters in distinguishing between legitimate and distorted content. Furthermore, strict laws as well as technology developments are required to detect and limit the negative impact of deepfakes.

Safeguarding Children in Cyberspace

● Parental Guidance and Open Communication: Open communication and parental guidance are essential for protecting children's internet safety. It's a necessity to have open discussions about the possible consequences and appropriate internet use. Understanding the platforms and material children are consuming online, parents should actively participate in their children's online activities.

● Educational Initiatives: Comprehensive programs for digital literacy must be implemented in educational settings. Critical thinking abilities, internet etiquette, and knowledge of the risks associated with deepfakes and misinformation should all be included in these programs. Fostering a secure online environment requires giving young netizens the tools they need to question and examine digital content.

● Policies and Rules: Admitting the threats or risks posed by misuse of advanced technologies such as AI and deepfake, the Indian government is on its way to coming up with dedicated legislation to tackle the issues arising from misuse of deepfake technology by the bad actors. The government has recently come up with an advisory to social media intermediaries to identify misinformation and deepfakes and to make sure of the compliance of Information Technology (IT) Rules 2021. It is the legal obligation of online platforms to prevent the spread of misinformation and exercise due diligence or reasonable efforts are made to identify misinformation and deepfakes. Legal frameworks need to be equipped to handle the challenges posed by AI. Accountability in AI is a complex issue that requires comprehensive legal reforms. In light of various cases reported about the misuse of deepfakes and spreading such deepfake content on social media, It is advocated that there is a need to adopt and enforce strong laws to address the challenges posed by misinformation and deepfakes. Working with technological companies to implement advanced content detection tools and ensuring that law enforcement takes swift action against those who misuse technology will act as a deterrent among cyber crooks.

● Digital parenting: It is important for parents to keep up with the latest trends and digital technologies. Digital parenting includes understanding privacy settings, monitoring online activity, and using parental control tools to create a safe online environment for children.

Conclusion

As India continues to move forward digitally, protecting children in cyberspace has become a shared responsibility. By promoting digital literacy, encouraging open communication and enforcing strong laws, we can create a safer online environment for younger generations. Knowledge, understanding, and active efforts to combat misinformation and deeply entrenched myths are the keys to unlocking the safety net in the online age. Social media Intermediaries or platforms must ensure compliance under IT Rules 2021, IT Act, 2000 and the newly enacted Digital Personal Data Protection Act, 2023. It is the shared responsibility of the government, parents & teachers, users and organisations to establish safe online space for children.