#FactCheck - "AI-Generated Image of UK Police Officers Bowing to Muslims Goes Viral”

Executive Summary:

A viral picture on social media showing UK police officers bowing to a group of social media leads to debates and discussions. The investigation by CyberPeace Research team found that the image is AI generated. The viral claim is false and misleading.

Claims:

A viral image on social media depicting that UK police officers bowing to a group of Muslim people on the street.

Fact Check:

The reverse image search was conducted on the viral image. It did not lead to any credible news resource or original posts that acknowledged the authenticity of the image. In the image analysis, we have found the number of anomalies that are usually found in AI generated images such as the uniform and facial expressions of the police officers image. The other anomalies such as the shadows and reflections on the officers' uniforms did not match the lighting of the scene and the facial features of the individuals in the image appeared unnaturally smooth and lacked the detail expected in real photographs.

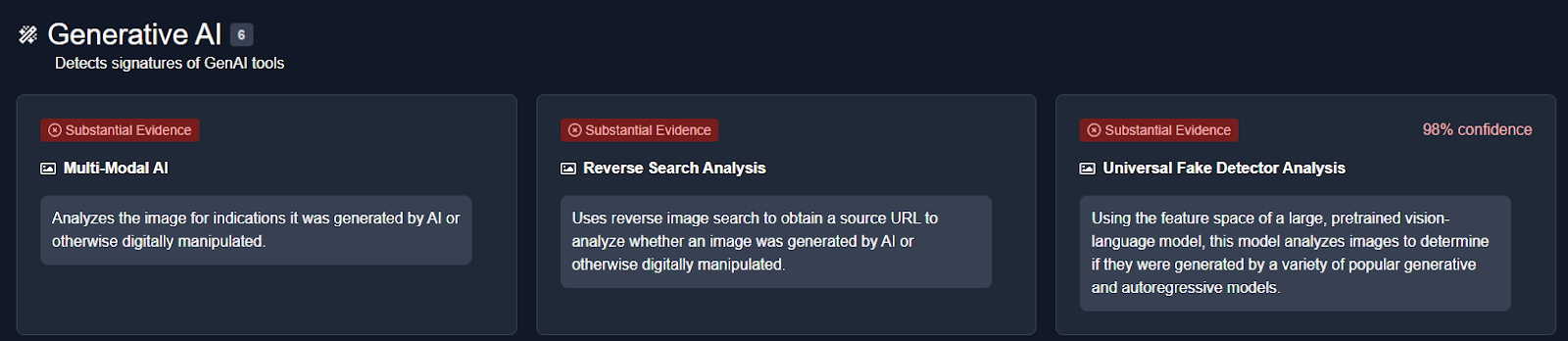

We then analysed the image using an AI detection tool named True Media. The tools indicated that the image was highly likely to have been generated by AI.

We also checked official UK police channels and news outlets for any records or reports of such an event. No credible sources reported or documented any instance of UK police officers bowing to a group of Muslims, further confirming that the image is not based on a real event.

Conclusion:

The viral image of UK police officers bowing to a group of Muslims is AI-generated. CyberPeace Research Team confirms that the picture was artificially created, and the viral claim is misleading and false.

- Claim: UK police officers were photographed bowing to a group of Muslims.

- Claimed on: X, Website

- Fact Check: Fake & Misleading