Digitally Altered Photo of Rowan Atkinson Circulates on Social Media

Executive Summary:

A photo claiming that Mr. Rowan Atkinson, the famous actor who played the role of Mr. Bean, lying sick on bed is circulating on social media. However, this claim is false. The image is a digitally altered picture of Mr.Barry Balderstone from Bollington, England, who died in October 2019 from advanced Parkinson’s disease. Reverse image searches and media news reports confirm that the original photo is of Barry, not Rowan Atkinson. Furthermore, there are no reports of Atkinson being ill; he was recently seen attending the 2024 British Grand Prix. Thus, the viral claim is baseless and misleading.

Claims:

A viral photo of Rowan Atkinson aka Mr. Bean, lying on a bed in sick condition.

Fact Check:

When we received the posts, we first did some keyword search based on the claim made, but no such posts were found to support the claim made.Though, we found an interview video where it was seen Mr. Bean attending F1 Race on July 7, 2024.

Then we reverse searched the viral image and found a news report that looked similar to the viral photo of Mr. Bean, the T-Shirt seems to be similar in both the images.

The man in this photo is Barry Balderstone who was a civil engineer from Bollington, England, died in October 2019 due to advanced Parkinson’s disease. Barry received many illnesses according to the news report and his application for extensive healthcare reimbursement was rejected by the East Cheshire Clinical Commissioning Group.

Taking a cue from this, we then analyzed the image in an AI Image detection tool named, TrueMedia. The detection tool found the image to be AI manipulated. The original image is manipulated by replacing the face with Rowan Atkinson aka Mr. Bean.

Hence, it is clear that the viral claimed image of Rowan Atkinson bedridden is fake and misleading. Netizens should verify before sharing anything on the internet.

Conclusion:

Therefore, it can be summarized that the photo claiming Rowan Atkinson in a sick state is fake and has been manipulated with another man’s image. The original photo features Barry Balderstone, the man who was diagnosed with stage 4 Parkinson’s disease and subsequently died in 2019. In fact, Rowan Atkinson seemed perfectly healthy recently at the 2024 British Grand Prix. It is important for people to check on the authenticity before sharing so as to avoid the spreading of misinformation.

- Claim: A Viral photo of Rowan Atkinson aka Mr. Bean, lying on a bed in a sick condition.

- Claimed on: X, Facebook

- Fact Check: Fake & Misleading

Related Blogs

Executive Summary

A video circulating on social media shows an electric car allegedly being powered by a portable generator attached to it. The clip is being shared with the claim that the generator is directly running the vehicle, suggesting a groundbreaking or unusual technological feat. However, research conducted by the CyberPeace found the viral claim to be false. Our research revealed that the video is not authentic but AI-generated.

Claim

On February 22, 2026, a user on X (formerly Twitter) shared the viral video with the caption: “After watching this video, Newton might turn in his grave.” The post implied that the video demonstrates a scientific impossibility.

Fact Check:

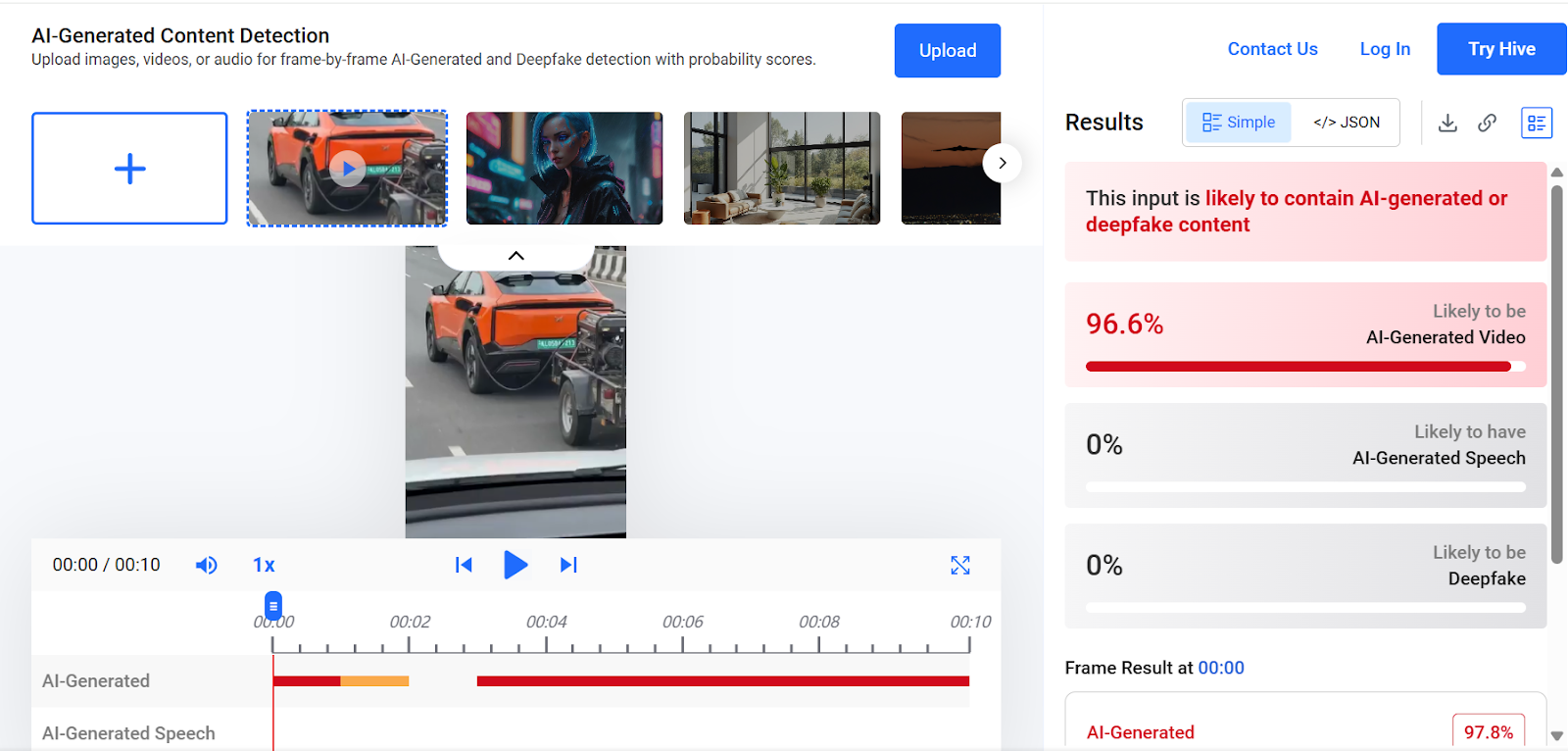

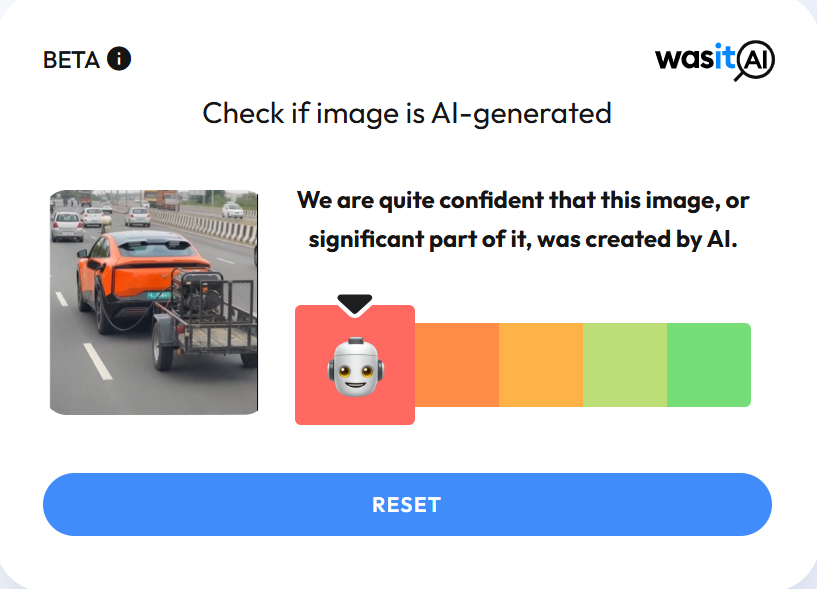

To verify the claim, we conducted a keyword search on Google. However, we found no credible reports from any reputable media organization supporting the assertion made in the viral post. A close examination of the video revealed several visual inconsistencies and unnatural elements, raising suspicion that the footage may have been generated using artificial intelligence. We then analyzed the video using the AI detection tool Hive Moderation. The results indicated a 96 percent probability that the video was AI-generated.

In the next step of our research , we scanned the video using another AI detection platform, WasItAI, which also concluded that the viral video was AI-generated.

Conclusion

Our research confirms that the viral video is not real. It has been artificially created using AI technology and is being circulated with a misleading claim.

In the Intricate mazes of the digital world, where the line between reality and illusion blurs, the quest for truth becomes a Sisyphean task. The recent firestorm of rumours surrounding global pop icon Dua Lipa's visit to Rajasthan, India, is a poignant example of this modern Dilemma. A single image, plucked from the continuum of time and stripped of context, became the fulcrum upon which a narrative of sexual harassment was precariously balanced. This incident, a mere droplet in the ocean of digital discourse, encapsulates the broader phenomenon of misinformation—a spectre that haunts the virtual halls of our interconnected existence.

Misinformation Incident

Amidst the ceaseless hum of social media, a claim surfaced with the tenacity of a weed in fertile soil: Dua Lipa, the three-time Grammy Award winner, had allegedly been subjected to sexual harassment during her sojourn in the historic city of Jodhpur. The evidence? A viral picture, its origins murky, accompanied by a caption that seemed to confirm the worst fears of her ardent followers. The digital populace quickly reacted, with many sharing the image, asserting the claim's veracity without pause for verification.

Unraveling the Fabric of Fake News: Fact-Checking Dua Lipa's India Experience

The narrative gained momentum through platforms of dubious credibility, such as the Twitter handle,' which, upon closer scrutiny by the Digital Forensics Research and Analytics Center, was revealed to be a purveyor of fake news. The very fabric of the claim began to unravel as the original photo was traced back to the official Facebook page of RVCJ Media, untainted by the allegations that had been so hastily ascribed to it. Moreover, the silence of Dua Lipa on the matter, rather than serving as a testament to the truth, inadvertently fueled the fires of speculation—a stark reminder of the paradox where the absence of denial is often misconstrued as an affirmation.

The pop star's words, shared on her Instagram account, painted a starkly different picture of her experience in India. She spoke not of fear and harassment, but of gratitude and joy, describing her trip as 'deeply meaningful' and expressing her luck to be 'within the magic' with her family. The juxtaposition of her heartfelt account with the sinister narrative constructed around her serves as a cautionary tale of the power of misinformation to distort and defile.

A Political Microcosm: Bye Elections of Telangana

Another incident is electoral misinformation, the political landscape of Telangana, India, bristled with anticipation as the Election Commission announced bye-elections for two Member of Legislative Council (MLC) seats. Here, too, the machinery of misinformation whirred into action, with political narratives being shaped and reshaped through the lens of partisan prisms. The electoral process, transparent in its intent, became susceptible to selective amplification, with certain facets magnified or distorted to fit entrenched political narratives. The bye-elections, thus, became a battleground not just for political supremacy but also for the integrity of information.

The Far-Reaching Claws of Misinformation: Fact Check

The misinformation regarding the experience of dua lipa upon India's visit and another incident of political Microcosm of Misinformation in Telangana are manifestations of a global challenge. Misinformation, adapts to the different contours of its environment, whether it be the gritty arena of politics or the glitzy realm of stardom. Its tentacles reach far and wide, with geopolitical implications that can destabilise regions, sow discord, and undermine the very pillars of democracy. The erosion of trust that misinformation engenders is perhaps its most insidious effect, as it chips away at the bedrock of societal cohesion and collective well-being.

Paradox of Technology

The same technological developments that have allowed the spread of misinformation also hold the keys to its containment. Artificial intelligence-powered fact-checking tools, blockchain-enabled transparency counter-measures, and comprehensive digital literacy campaigns stand as bulwarks against falsehoods. These tools, however, are not panaceas; they require the active engagement and critical thinking skills of each digital citizen to be truly effective.

Conclusion

As we stand at the cusp of the digital age, the way forward demands vigilance, collaboration, and innovation. Cultivating a digitally literate person, capable of discerning the nuances of digital content, is paramount. Governments, the tech industry, media companies, and civil society must join forces in a common front, leveraging their collective expertise in the battle against misinformation. Promoting algorithmic accountability and fostering diverse information ecosystems will also be crucial in mitigating the inadvertent amplification of falsehoods.

In the end, discerning truth in the digital age is a delicate process. It requires us to be attuned to the rhythm of reality, and wary of the seductive allure of unverified claims. As we navigate this digital realm, remember that the truth is not just a destination but a journey that demands our unwavering commitment to the pursuit of what is real and what is right.

References

- https://telanganatoday.com/eci-releases-schedule-for-bye-elections-to-two-mlc-seats-in-telangana

- https://www.oneindia.com/fact-check/was-pop-singer-dua-lipa-sexually-harassed-in-rajasthan-during-her-india-trip-heres-the-truth-3718833.html?story=3

- https://www.thequint.com/news/webqoof/edited-graphic-of-dua-lipa-being-sexually-harassed-in-jodhpur-falsely-shared-fact-check

Introduction

Microsoft has unveiled its ambitious roadmap for developing a quantum supercomputer with AI features, acknowledging the transformative power of quantum computing in solving complex societal challenges. Quantum computing has the potential to revolutionise AI by enhancing its capabilities and enabling breakthroughs in different fields. Microsoft’s groundbreaking announcement of its plans to develop a quantum supercomputer, its potential applications, and the implications for the future of artificial intelligence (AI). However, there is a need for regulation in the realms of quantum computing and AI and significant policies and considerations associated with these transformative technologies. This technological advancement will help in the successful development and deployment of quantum computing, along with the potential benefits and challenges associated with its implementation.

What isQuantum computing?

Quantum computing is an emerging field of computer science and technology that utilises principles from quantum mechanics to perform complex calculations and solve certain types of problems more efficiently than classical computers. While classical computers store and process information using bits, quantum computers use quantum bits or qubits.

Interconnected Future

Quantum computing promises to significantly expand AI’s capabilities beyond its current limitations. Integrating these two technologies could lead to profound advancements in various sectors, including healthcare, finance, and cybersecurity. Quantum computing and artificial intelligence (AI) are two rapidly evolving fields that have the potential to revolutionise technology and reshape various industries. This section explores the interdependence of quantum computing and AI, highlighting how integrating these two technologies could lead to profound advancements across sectors such as healthcare, finance, and cybersecurity.

- Enhancing AI Capabilities:

Quantum computing holds the promise of significantly expanding the capabilities of AI systems. Traditional computers, based on classical physics and binary logic, need help solving complex problems due to the exponential growth of computational requirements. Quantum computing, on the other hand, leverages the principles of quantum mechanics to perform computations on quantum bits or qubits, which can exist in multiple states simultaneously. This inherent parallelism and superposition property of qubits could potentially accelerate AI algorithms and enable more efficient processing of vast amounts of data.

- Solving Complex Problems:

The integration of quantum computing and AI has the potential to tackle complex problems that are currently beyond the reach of classical computing methods. Quantum machine learning algorithms, for example, could leverage quantum superposition and entanglement to analyse and classify large datasets more effectively. This could have significant applications in healthcare, where AI-powered quantum systems could aid in drug discovery, disease diagnosis, and personalised medicine by processing vast amounts of genomic and clinical data.

- Advancements in Finance and Optimisation:

The financial sector can benefit significantly from integrating quantum computing and AI. Quantum algorithms can be employed to optimise portfolios, improve risk analysis models, and enhance trading strategies. By harnessing the power of quantum machine learning, financial institutions can make more accurate predictions and informed decisions, leading to increased efficiency and reduced risks.

- Strengthening Cybersecurity:

Quantum computing can also play a pivotal role in bolstering cybersecurity defences. Quantum techniques can be employed to develop new cryptographic protocols that are resistant to quantum attacks. In conjunction with quantum computing, AI can further enhance cybersecurity by analysing massive amounts of network traffic and identifying potential vulnerabilities or anomalies in real time, enabling proactive threat mitigation.

- Quantum-Inspired AI:

Beyond the direct integration of quantum computing and AI, quantum-inspired algorithms are also being explored. These algorithms, designed to run on classical computers, draw inspiration from quantum principles and can improve performance in specific AI tasks. Quantum-inspired optimisation algorithms, for instance, can help solve complex optimisation problems more efficiently, enabling better resource allocation, supply chain management, and scheduling in various industries.

How Quantum Computing and AI Should be Regulated-

As quantum computing and artificial intelligence (AI) continues to advance, questions arise regarding the need for regulations to govern these technologies. There is debate surrounding the regulation of quantum computing and AI, considering the potential risks, ethical implications, and the balance between innovation and societal protection.

- Assessing Potential Risks: Quantum computing and AI bring unprecedented capabilities that can significantly impact various aspects of society. However, they also pose potential risks, such as unintended consequences, privacy breaches, and algorithmic biases. Regulation can help identify and mitigate these risks, ensuring these technologies’ responsible development and deployment.

- Ethical Implications: AI and quantum computing raise ethical concerns related to privacy, bias, accountability, and the impact on human autonomy. For AI, issues such as algorithmic fairness, transparency, and decision-making accountability must be addressed. Quantum computing, with its potential to break current encryption methods, requires regulatory measures to protect sensitive information. Ethical guidelines and regulations can provide a framework to address these concerns and promote responsible innovation.

- Balancing Innovation and Regulation: Regulating quantum computing and AI involves balancing fostering innovation and protecting society’s interests. Excessive regulation could stifle technological advancements, hinder research, and impede economic growth. On the other hand, a lack of regulation may lead to the proliferation of unsafe or unethical applications. A thoughtful and adaptive regulatory approach is necessary, considering the dynamic nature of these technologies and allowing for iterative improvements based on evolving understanding and risks.

- International Collaboration: Given the global nature of quantum computing and AI, international collaboration in regulation is essential. Harmonising regulatory frameworks can avoid fragmented approaches, ensure consistency, and facilitate ethical and responsible practices across borders. Collaborative efforts can also address data privacy, security, and cross-border data flow challenges, enabling a more unified and cooperative approach towards regulation.

- Regulatory Strategies: Regulatory strategies for quantum computing and AI should adopt a multidisciplinary approach involving stakeholders from academia, industry, policymakers, and the public. Key considerations include:

- Risk-based Approach: Regulations should focus on high-risk applications while allowing low-risk experimentation and development space.

- Transparency and Explainability: AI systems should be transparent and explainable to enable accountability and address concerns about bias, discrimination, and decision-making processes.

- Privacy Protection: Regulations should safeguard individual privacy rights, especially in quantum computing, where current encryption methods may be vulnerable.

- Testing and Certification: Establishing standards for the testing and certification of AI systems can ensure their reliability, safety, and adherence to ethical principles.

- Continuous Monitoring and Adaptation: Regulatory frameworks should be dynamic, regularly reviewed, and adapted to keep pace with the evolving landscape of quantum computing and AI.

Conclusion:

Integrating quantum computing and AI holds immense potential for advancing technology across diverse domains. Quantum computing can enhance the capabilities of AI systems, enabling the solution of complex problems, accelerating data processing, and revolutionising industries such as healthcare, finance, and cybersecurity. As research and development in these fields progress, collaborative efforts among researchers, industry experts, and policymakers will be crucial in harnessing the synergies between quantum computing and AI to drive innovation and shape a transformative future.The regulation of quantum computing and AI is a complex and ongoing discussion. Striking the right balance between fostering innovation, protecting societal interests, and addressing ethical concerns is crucial. A collaborative, multidisciplinary approach to regulation, considering international cooperation, risk assessment, transparency, privacy protection, and continuous monitoring, is necessary to ensure these transformative technologies' responsible development and deployment.