Introduction

Cyber-attacks are another threat in this digital world, not exclusive to a single country, that could significantly disrupt global movements, commerce, and international relations all of which experienced first-hand when a cyber-attack occurred at Heathrow, the busiest airport in Europe, which threw their electronic check-in and baggage systems into a state of chaos. Not only were there chaos and delays at Heathrow, airports across Europe including Brussels, Berlin, and Dublin experienced delay and had to conduct manual check-ins for some flights further indicating just how interconnected the world of aviation is in today's world. Though Heathrow assured passengers that the "vast majority of flights" would operate, hundreds were delayed or postponed for hours as those passengers stood in a queue while nearly every European airport's flying schedule was also negatively impacted.

The Anatomy of the Attack

The attack specifically targeted Muse software by Collins Aerospace, a software built to allow various airlines to share check-in desks and boarding gates. The disruption initially perceived to be technical issues soon turned into a logistical nightmare, with airlines relying on Muse having to engage in horror-movie-worthy manual steps hand-tagging luggage, verifying boarding passes over the phone, and manually boarding passengers. While British Airways managed to revert to a backup system, most other carriers across Heathrow and partner airports elsewhere in Europe had to resort to improvised manual solutions.

The trauma was largely borne by the passengers. Stories emerged about travelers stranded on the tarmac, old folks left barely able to walk without assistance, and even families missing important connections. It served to remind everyone that the aviation world, with its schedules interlocked tightly across borders, can see even a localized system failure snowball into a continental-level crisis.

Cybersecurity Meets Aviation Infrastructure

In the last two decades, aviation has become one of the more digitally dependent industries in the world. From booking systems and baggage handling issues to navigation and air traffic control, digital systems are the invisible scaffold on which flight operations are supported. Though this digitalization has increased the scale of operations and enhanced efficiency, it must have also created many avenues for cyber threats. Cyber attackers increasingly realize that to target aviation is not just about money but about leverage. Just interfering with the check-in system of a major hub like Heathrow is more than just financial disruption; it causes panic and hits the headlines, making it much more attractive for criminal gangs and state-sponsored threat actors.

The Heathrow incident is like the worldwide IT crash in July 2024-thwarting activities of flights caused by a botched Crowdstrike update. Both prove the brittleness of digital dependencies in aviation, where one failure point triggering uncontrollable ripple effects spanning multiple countries. Unlike conventional cyber incidents contained within corporate networks, cyber-attacks in aviation spill on to the public sphere in real time, disturbing millions of lives.

Response and Coordination

Heathrow Airport first added extra employees to assist with manual check-in and told passengers to check flight statuses before traveling. The UK's National Cyber Security Centre (NCSC) collaborated with Collins Aerospace, the Department for Transport, and law enforcement agencies to investigate the extent and source of the breach. Meanwhile, the European Commission published a statement that they are "closely following the development" of the cyber incident while assuring passengers that no evidence of a "widespread or serious" breach has been observed.

According to passengers, the reality was quite different. Massive passenger queues, bewildering announcements, and departure time confirmations cultivated an atmosphere of chaos. The wrenching dissonance between the reassurances from official channel and Kirby needs to be resolved about what really happens in passenger experiences. During such incidents, technical restoration and communication flow are strategies for retaining public trust in incidents.

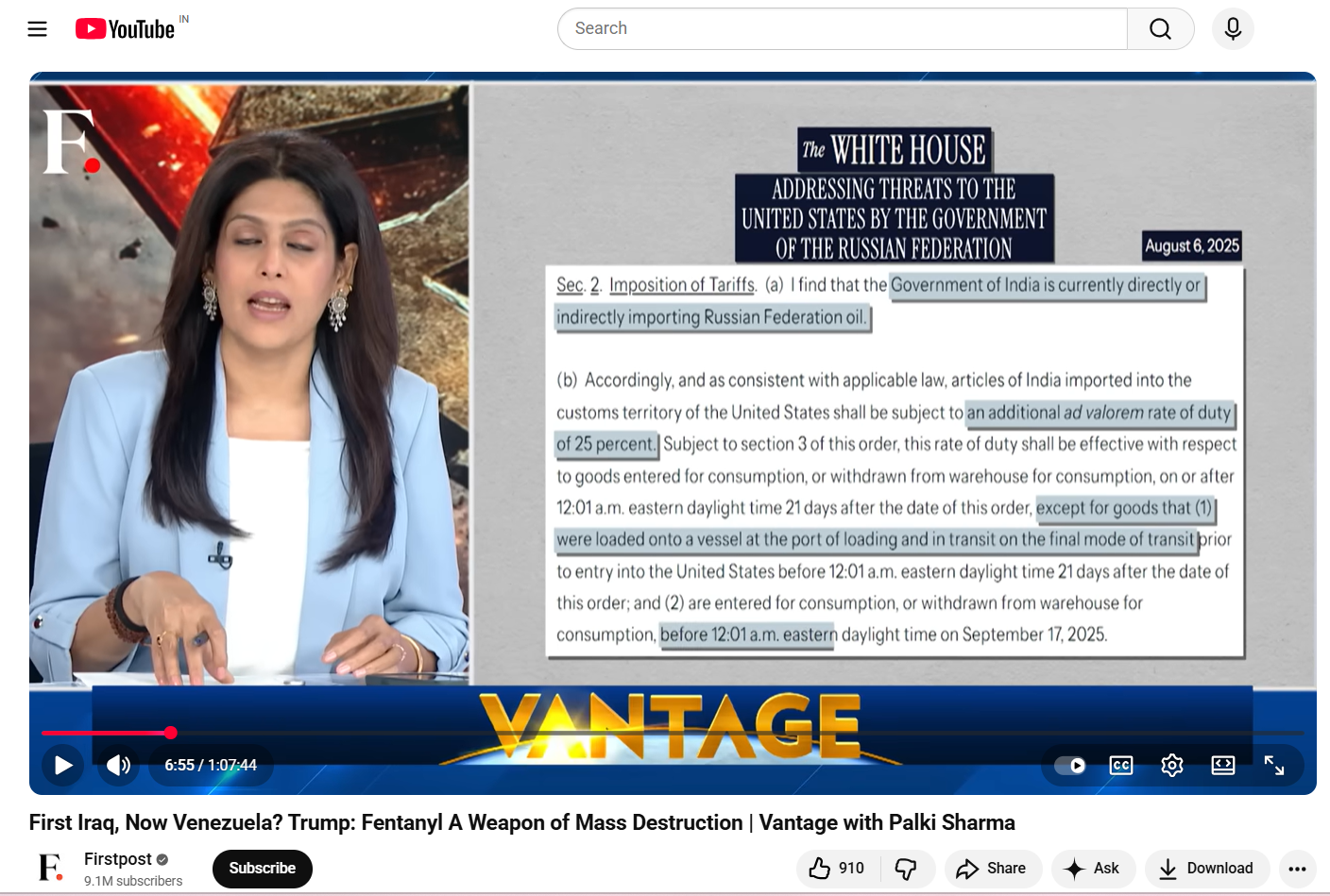

Attribution and the Shadow of Ransomware

As with many cyber-attacks, questions on its attribution arose quite promptly. Rumours of hackers allegedly working for the Kremlin escaped into the air quite possibly inside seconds of the realization, Cybersecurity experts justifiably advise against making conclusions hastily. Extortion ransomware gangs stand the last chance to hold the culprits, whereas state actors cannot be ruled out, especially considering Russian military activity under European airspace. Meanwhile, Collins Aerospace has refused to comment on the attack, its precise nature, or where it originated, emphasizing an inherent difficulty in cyberattribution.

What is clear is the way these attacks bestow criminal leverage and dollars. In previous ransomware attacks against critical infrastructure, cybercriminal gangs have extorted millions of dollars from their victims. In aviation terms, the stakes grow exponentially, not only in terms of money but national security and diplomatic relations as well as human safety.

Broader Implications for Aviation Cybersecurity

This incident brings to consideration several core resilience issues within aviation systems. Traditionally, the airports and airlines had placed premium on physical security, but today, the equally important concept of digital resilience has come into being. Systems such as Muse, which bind multiple airlines into shared infrastructure, offer efficiency but, at the same time, also concentrate that risk. A cyber disruption in one place will cascade across dozens of carriers and multiple airports, thereby amplifying the scale of that disruption.

The case also brings forth redundancy and contingency planning as an urgent concern. While BA systems were able to stand on backups, most other airlines could not claim that advantage. It is about time that digital redundancies, be it in the form of parallel systems or isolated backups or even AI-driven incident response frameworks, are built into aviation as standard practice and soon.

On the policy plane, this incident draws attention to the necessity for international collaboration. Aviation is therefore transnational, and cyber incidents standing on this domain cannot possibly be handled by national agencies only. Eurocontrol, the European Commission, and cross-border cybersecurity task forces must spearhead this initiative to ensure aviation-wide resilience.

Human Stories Amid a Digital Crisis

Beyond technical jargon and policy response, the human stories had perhaps the greatest impact coming from Heathrow. Passengers spoke of hours spent queuing, heading to funerals, and being hungry and exhausted as they waited for their flights. For many, the cyber-attack was no mere headline; instead, it was ¬ a living reality of disruption.

These stories reflect the fact that cybersecurity is no hunger strike; it touches people's lives. In critical sectors such as aviation, one hour of disruption means missed connections for passengers, lost revenue for airlines, and inculcates immense emotional stress. Crisis management must therefore entail technical recovery and passenger care, communication, and support on the ground.

Conclusion

The cybersecurity crisis of Heathrow and other European airports emphasizes the threat of cyber disruption on the modern legitimacy of aviation. The use of increased connectivity for airport processes means that any cyber disruption present, no matter how small, can affect scheduling issues regionally or on other continents, even threatening lives. The occurrences confirm a few things: a resilient solution should provide redundancy not efficiency; international networking and collaboration is paramount; and communicating with the traveling public is just as important (if not more) as the technical recovery process.

As governments, airlines, and technology providers analyse the disruption, the question is longer if aviation can withstand cyber threats, but to what extent it will be prepared to defend itself against those attacks. The Heathrow crisis is a reminder that the stake of cybersecurity is not just about a data breach or outright stealing of money but also about stealing the very systems that keep global mobility in motion. Now, the aviation industry is tested to make this disruption an opportunity to fortify the digital defences and start preparing for the next inevitable production.

References