Misinformation on Internet

Introduction

We consume news from various sources such as news channels, social media platforms and the Internet etc. In the age of the Internet and social media, the concern of misinformation has become a common issue as there is widespread misinformation or fake news on the Internet and social media platforms.

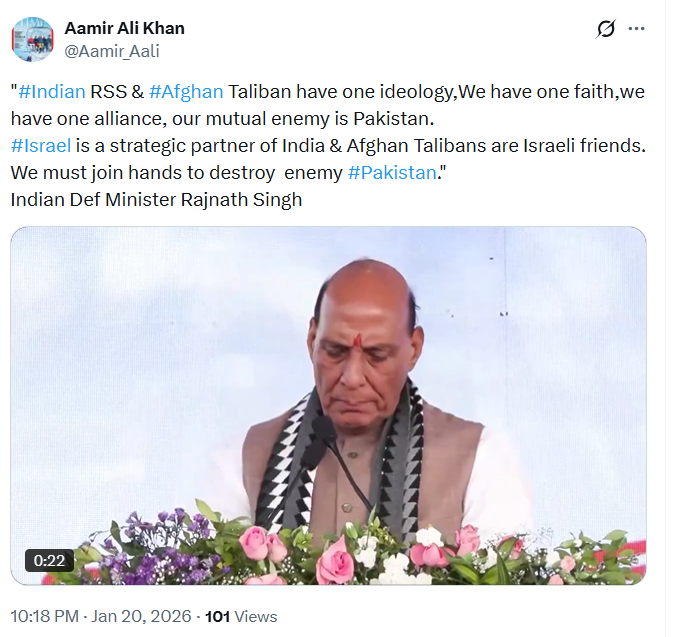

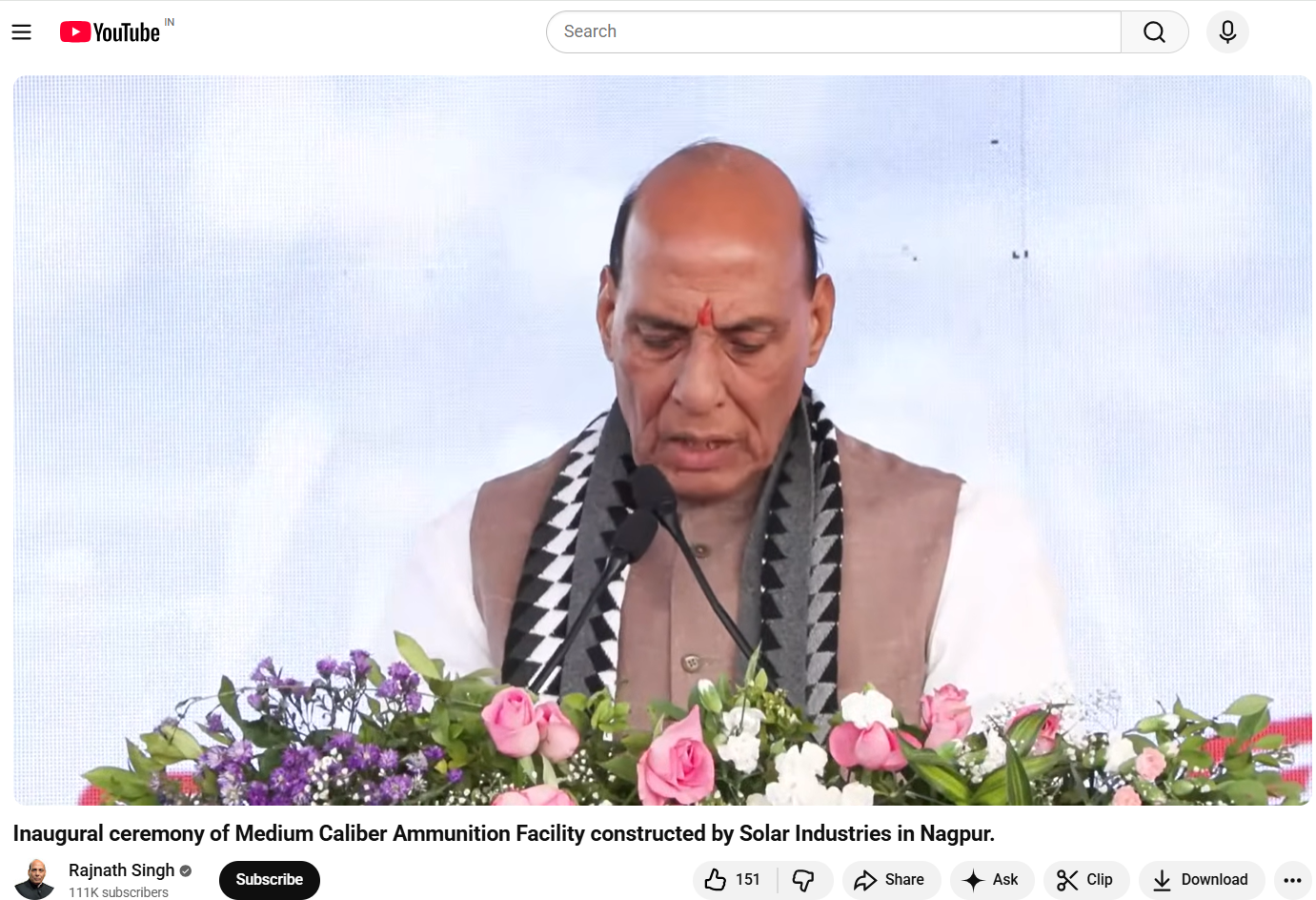

Misinformation on social media platforms

The wide availability of user-provided content on online social media platforms facilitates the spread of misinformation. With the vast population on social media platforms, the information gets viral and spreads all over the internet. It has become a serious concern as such misinformation, including rumours, morphed images, unverified information, fake news, and planted stories, spread easily on the internet, leading to severe consequences such as public riots, lynching, communal tensions, misconception about facts, defamation etc.

Platform-centric measures to mitigate the spread of misinformation

- Google introduced the ‘About this result’ feature’. This allows the users to help with better understand the search results and websites at a glance.

- During the covid-19 pandemic, there were huge cases of misinformation being shared. Google, in April 2020, invested $6.5 million in funding to fact-checkers and non-profits fighting misinformation around the world, including a check on information related to coronavirus or on issues related to the treatment, prevention, and transmission of Covid-19.

- YouTube also have its Medical Misinformation Policy which prevents the spread of information or content which is in contravention of the World Health Organization (WHO) or local health authorities.

- At the time of the Covid-19 pandemic, major social media platforms such as Facebook and Instagram have started showing awareness pop-ups which connected people to information directly from the WHO and regional authorities.

- WhatsApp has a limit on the number of times a WhatsApp message can be forwarded to prevent the spread of fake news. And also shows on top of the message that it is forwarded many times. WhatsApp has also partnered with fact-checking organisations to make sure to have access to accurate information.

- On Instagram as well, when content has been rated as false or partly false, Instagram either removes it or reduces its distribution by reducing its visibility in Feeds.

Fight Against Misinformation

Misinformation is rampant all across the world, and the same needs to be addressed at the earliest. Multiple developed nations have synergised with tech bases companies to address this issue, and with the increasing penetration of social media and the internet, this remains a global issue. Big tech companies such as Meta and Google have undertaken various initiatives globally to address this issue. Google has taken up the initiative to address this issue in India and, in collaboration with Civil Society Organisations, multiple avenues for mass-scale awareness and upskilling campaigns have been piloted to make an impact on the ground.

How to prevent the spread of misinformation?

Conclusion

In the digital media space, there is a widespread of misinformative content and information. Platforms like Google and other social media platforms have taken proactive steps to prevent the spread of misinformation. Users should also act responsibly while sharing any information. Hence creating a safe digital environment for everyone.