Implications of the California Transparency in Frontier Artificial Intelligence Act on Global AI Legislation

Introduction

Due to the rapid growth of high-capability AI systems around the world, growing concerns regarding safety, accountability, and governance have arisen throughout the world; thus, California has responded by passing the Transparency in Frontier Artificial Intelligence Act (TFAIA), the first state statute focused on "frontier" (highly capable) AI models. This statute is unique in that it does not only target harms caused by AI models in the form of consumer protection as compared to the majority of state statutes; rather, this statute addresses the catastrophic and systemic risks to society associated with large-scale AI systems. As California is a global technology leader, the TFAIA is positioned to have a significant impact on both domestic regulation and the evolution of international legal frameworks for AI technology (and as such has the potential to influence corporate compliance practices and the establishment of global norms related to the use of AI).

Understanding the Transparency in Frontier Artificial Intelligence Act

The Transparency in Frontier Artificial Intelligence Act provides a specific regulatory process for companies that create sophisticated AI systems with societal, economic, or national security implications. Covered developers are required to publish an extensive safety and transparency policy that details how they navigate risk throughout the artificial intelligence lifecycle. The act requires developers to notify the government of any significant incidents or failures with their deployed frontier models on a timely basis.

A significant aspect of the TFAIA is that it establishes the concept of "process transparency", which does not explicitly control how AI developers create their models, but rather holds them accountable for their internal safety governance by mandating that they develop Documented safety frameworks that outline risk assessment, mitigation, and monitoring processes. The act allows developers to protect their trade secrets, patents, and national defense concerns by providing them with limited opportunities for exemption and/or redaction of their documents so that they can maintain a balance between data openness and safeguarding sensitive information..

Extraterritorial Impact on Global AI Developers

While the Act is a state law, its implementation has far-reaching effects. Many of the largest AI companies have facilities, research labs or customers in California. Therefore, to be compliant with the TFAIA, these companies are required to do so commercially. The ability to develop a unified compliance model across regions enables companies to avoid developing duplicate compliance models.

This same pattern has occurred in other regulatory areas, like data protection regulations; where a region's regulations effectively became global compliance benchmarks for that regulatory area. The TFAIA could similarly serve as a global standard for transparency in frontier AI and shape how companies build their governance structure globally even if they don't have explicit regulations in the regions where they operate.

Influence on International AI Regulatory Models

The TFAIA offers a unique perspective on global discussions about regulating AI. In contrast to other legislation which defines different levels of risk depending on the type of AI, the TFAIA targets specifically high-impact or emerging technologies. Other nations may see value in this model of tiered regulations based on capability and apply it for their own regulation of AI, with the strictest obligations placed on those with the most critical potential harm.

The TFAIA may serve as a guide for international public policy makers by showing how they can reference existing standards and best practices in developing regulations, thus improving interoperability and potentially lessening regulatory barriers to cross-border AI innovations.

Corporate Governance, Compliance Costs, and Competition

From an industry perspective, the Act revolutionizes the way companies govern themselves. Developers are now required to create thorough risk assessments, red-teaming exercises, incident response protocols, and have board oversight for AI safety and regulation. The number of people involved in this process increases accountability but at the same time the increases will create a burden of cost for all involved.

The burden of compliance will be easier for large tech companies than for smaller or start-ups, and thus large tech companies may solidify their position of dominance over the development of frontier AI. Smaller and newer developers may be blocked from entering the market unless some form of proportional or scaled compliance mechanism for where they operate emerges. These developments certainly raise issues surrounding innovation policy and competition law at a global scale that will need to be addressed by regulators in conjunction with AI safety concerns.

Transparency, Public Trust, and Accountability

The TFAIA bolsters the capability of citizens, researchers and journalists to oversee the development and the use of artificial intelligence (AI) through its requirement for public disclosure of the safety framework of AI systems. The disclosures will allow citizens, researchers and journalists to critically evaluate corporate claims of responsible AI development. Over time, this evaluation could increase trust in publically regulated AI systems and would expose businesses that exhibit a poor risk management process.

However, how useful this transparency is depends on the quality and comparability of the information being disclosed. Many current disclosures are either too vague or too complex, thus limiting the ability to conduct meaningful oversight. There should be a push for clearer guidance and/or the establishment of standardised disclosure forms for the purposes of public accountability (i.e., citizens) and uniformity between countries.

Conclusion

The Transparency in Frontier Artificial Intelligence Act is a transformative development in the regulation of Artificial Intelligence Technology, specifically, a whole new risk profile of this new generation of AI / (Advanced High-Powered) Technologies such as Autonomous Vehicles. This new California law will create global impact because it Be will change how technology companies operate, create regulatory frameworks and develop standards to govern/oversee the use of Autonomous Vehicles. The Act creates a “transparent” means for regulating (or governing) Autonomous Vehicles as opposed to relying solely on “technical” means for these systems. As other regions experience similar challenges that US Government is facing with respect to this new generation of AI (written laws), California's approach will likely be used as an example for how AI laws are written in the future and develop a more unified and responsible international AI regulatory framework.

References

- https://www.whitecase.com/insight-alert/california-enacts-landmark-ai-transparency-law-transparency-frontier-artificial

- https://www.gov.ca.gov/2025/09/29/governor-newsom-signs-sb-53-advancing-californias-world-leading-artificial-intelligence-industry/

- https://www.mofo.com/resources/insights/251001-california-enacts-ai-safety-transparency-regulation-tfaia-sb-53

- https://www.dlapiper.com/en/insights/publications/2025/10/california-law-mandates-increased-developer-transparency-for-large-ai-models

Related Blogs

Executive Summary

In September 2025, a joint operation led by the U.S. Secret Service, in coordination with the New York Police Department (NYPD) and other federal partners, dismantled an illicit telecommunications network of unprecedented scale and sophistication operating in the New York tri-state area. The timing of the takedown, occurring just hours before the commencement of high-level proceedings at the United Nations General Assembly (UNGA), underscored the imminent and severe nature of the threat posed by this clandestine infrastructure. This report provides a comprehensive analysis of the operation, the technology involved, the multi-domain threats it presented, the complex ecosystem of actors it served, and the profound strategic implications for U.S. national and homeland security.

The seized assets included over 300 SIM servers and more than 100,000 active Subscriber Identity Module (SIM) cards, constituting a massive "SIM farm." This infrastructure functioned as a parallel, rogue telecommunications system, capable of a wide spectrum of malicious activities. The threat was multi-faceted, representing a dangerous convergence of capabilities that spanned the domains of cybercrime, physical infrastructure disruption, and espionage.

The network possessed the technical capacity to launch a catastrophic denial-of-service (DoS) attack against New York City's cellular infrastructure, potentially disabling emergency communications and creating widespread chaos.

Forensic analysis has confirmed the network's primary function as a secure, anonymous communications platform for a range of illicit actors. Early findings indicate its use by transnational criminal organizations (TCOs)—including drug cartels and human trafficking rings—and nation-state actors. This shared use of a single, powerful infrastructure points to the emergence of a "criminal-as-a-service" model, where state-level capabilities are made available to non-state actors, blurring the lines between traditional crime and state-sponsored operations and vastly complicating attribution and law enforcement response. The network's strategic placement within a 35-mile radius of the UN headquarters during the General Assembly also highlights its inherent potential as a tool for espionage, surveillance, and influence operations against high-value diplomatic targets.

The UNGA SIM farm takedown serves as a watershed moment, exposing the acute vulnerability of the "invisible" critical infrastructure that underpins modern society. The incident demonstrates a strategic shift by adversaries from targeting individual endpoints to compromising the core of our communications networks. This report concludes that this event necessitates a fundamental re-evaluation of national security priorities, mandating the development of new investigative paradigms to address convergent threats, the strengthening of public-private partnerships with the telecommunications sector, and the urgent consideration of updated legal and regulatory frameworks to counter the weaponization of telecommunications technology.

Operation Silent Signal: The Anatomy of a Takedown

The disruption of the New York tri-state SIM farm was not the result of a chance discovery but the culmination of a deliberate and sophisticated protective intelligence investigation. The operation, codenamed for the purposes of this analysis as "Operation Silent Signal," showcases a modern, intelligence-led approach to law enforcement, where the meticulous tracing of seemingly tactical threats led to the neutralization of a strategic national security vulnerability. The anatomy of this takedown reveals the professionalism of both the criminal enterprise and the coordinated multi-agency response required to dismantle it.

The Trigger: From Swatting to a National Security Threat

The investigation's genesis lies in a series of targeted, anonymous threats directed at seniors. U.S. government officials in the spring of 2025.1 These were not idle threats but took the form of sophisticated harassment campaigns, including "swafling" attacks.1 Swafling is a dangerous criminal tactic wherein an individual makes a false report of a serious emergency—such as a hostage situation, bomb threat, or active shooter—at a target's address. The goal is to deceive law enforcement into dispatching a large, heavily armed response, such as a SWAT team, creating a situation that is not only terrifying for the victim but also carries a high risk of unintended violence and diverts critical emergency resources.1

These aflacks, which targeted lawmakers both within and outside of New York, represented a direct challenge to the protective mission of the U.S. Secret Service.1 In response, the agency's newly established Advanced Threat Interdiction Unit—a specialized section created to disrupt the most significant and imminent threats to its protectees—initiated a monthlong investigation.4 The unit's mandate is prevention, and by tracing the origin of these anonymous, fraudulent calls, investigators began to uncover a common technological backbone.6 What began as an inquiry into acts of intimidation against individuals quickly escalated as it became clear that the tools used were not those of a lone actor but were components of a vast, centralized infrastructure. This progression from a tactical law enforcement problem (harassment of officials) to a strategic national security issue (the discovery of a rogue telecom network) exemplifies a critical shift in the modern threat landscape. The infrastructure enabling these threats was not merely a tool for crime but a weaponized system capable of far greater disruption, and its discovery compelled an immediate and large-scale response.

Operational Execution: A Coordinated Multi-Agency Response

Recognizing the multifaceted nature of the threat, the Secret Service orchestrated a comprehensive, multi-agency operation. The complexity of the network—spanning physical locations, cyber infrastructure, and potential links to foreign intelligence—necessitated a collaborative effort that drew upon the unique capabilities of federal, state, and local partners. The core operational team included the U.S. Secret Service and the NYPD, augmented by crucial technical and investigative support from the Department of Homeland Security's Homeland Security Investigations (HSI), the Department of Justice (DOJ), and the Office of the Director of National Intelligence (ODNI).1 This coalition highlights the recognition that the threat was not confined to a single jurisdiction or domain but represented a convergent danger requiring expertise in physical security, cyber forensics, criminal investigation, and national intelligence analysis.

The timing of the operation's climax was critical. The final seizures and dismantling of the network were concluded just hours before President Donald Trump was scheduled to address the UN General Assembly, a period of heightened security in New York City with nearly 150 world leaders in aflendance.4 This deliberate timing indicates that investigators assessed the network as posing a clear, present, and imminent danger, particularly given its potential to disrupt communications during such a high-profile international event.5

The physical raids targeted at least five separate sites across the New York tri-state area, revealing a distributed and carefully concealed operational footprint.1 The operators chose their locations with a clear intent to evade detection, housing the sophisticated equipment in non-descript, abandoned apartment buildings and other properties.1 These locations, which included sites in Armonk, New York; Greenwich, Connecticut; Queens, New York; and parts of New Jersey, were all situated within a strategic 35-mile radius of the UN headquarters in Manhaflan.3 This geographic dispersal was not accidental. It created a resilient, decentralized network that could not be neutralized by a single raid, while simultaneously forming a "circle around New York City's cellular network infrastructure," positioning the SIM farm for maximum potential impact on its target.3 This level of foresight in operational security and strategic placement points to a highly professional and disciplined organization, employing tradecraft more commonly associated with intelligence agencies than with typical criminal enterprises.

The Cache: An Unprecedented Seizure

The material recovered during the raids constituted what officials described as the most extensive telecommunications threat ever discovered on American soil and the largest seizure of its kind.1 The sheer scale of the hardware and its operational readiness painted a stark picture of a well-funded, professional, and rapidly expanding enterprise. The inventory of seized assets included:

- Over 300 Co-located SIM Servers: These devices are the core of the SIM farm, acting as hardware gateways that can house and manage thousands of SIM cards simultaneously. They interface with the internet via Voice over IP (VoIP) and with the cellular network via the installed SIMs, effectively bridging the two systems.4 Photos released by the Secret Service show racks of these servers, laden with antennas and SIM card slots, indicative of a commercial-grade, scalable operation.10

- Over 100,000 Active SIM Cards: The massive number of active SIM cards provided the network with its immense capacity for communication and disruption. This volume allowed operators to rotate through numbers frequently to avoid detection by mobile carriers and to generate the traffic necessary for a large-scale DoS aflack.4 Some of the recovered SIM cards were confirmed to have been produced by MobileX, a wireless provider whose CEO pledged full cooperation with the investigation.9 Critically, investigators also found large stockpiles of additional SIM cards ready for deployment, suggesting the operators were in the process of doubling or even tripling the network's capacity.4 This indicates that the network was not a static asset but a growing infrastructure intended for long-term use.

- Ancillary Criminal Materiel: The discovery of 80 grams of cocaine and illegal firearms at the server sites provided unequivocal evidence of the network's direct connection to traditional, violent organized crime.1 This finding is crucial because it dissolves any ambiguity about the nature of the operators. They were not merely white-collar tech criminals engaged in telecommunications fraud; they were part of a broader criminal ecosystem involved in narcotics trafficking and armed crime. The presence of these items demonstrates a deep and tangible convergence of cybercrime and physical-world criminality.

Investigation Status: The Forensic Challenge and the Hunt fior Operators

In the immediate aftermath of the takedown, the primary objective of law enforcement was the neutralization of the infrastructural threat. Consequently, officials announced that while the network had been dismantled and no longer posed a danger, no arrests had yet been made.4 This operational sequence—prioritizing threat mitigation over immediate arrests—is common in complex national security investigations where the paramount concern is preventing a potential catastrophic event. Officials have indicated, however, that arrests could be forthcoming as the forensic phase of the investigation yields more evidence about the network's operators and users.4

The central focus of the ongoing investigation is the monumental task of conducting a forensic analysis of the more than 100,000 seized SIM cards.7 This effort is far more complex than a standard digital forensics case. As one official noted, it is akin to analyzing the entire contents of 100,000 separate cell phones.1 Investigators must meticulously comb through call detail records, text messages, data usage, and any other stored information to map the network's communications, identify its specific users, and uncover evidence of plots or criminal conspiracies. This process is expected to be lengthy and resource-intensive but holds the key to aflributing the network's operation to specific individuals, criminal organizations, and potentially, foreign governments. The public statements from the Secret Service serve as a clear message to the operators: the physical network has been dismantled, and a methodical digital hunt for those behind it is now underway.4

The Technology ofi Disruption: Deconstructing the SIM Farm

To fully grasp the gravity of the threat neutralized in New York, it is essential to understand the technology at its core. The seized network was not a simple collection of phones but a sophisticated, parallel telecommunications infrastructure. Its design represents a significant evolution, weaponizing technologies originally developed for financial fraud into a multi-purpose tool for disruption, clandestine communication, and espionage. A clear distinction must be made between this hardware-based system and the more commonly understood social engineering tactic of SIM swapping.

SIM Box vs. SIM Swap: Clarifiying the Technology

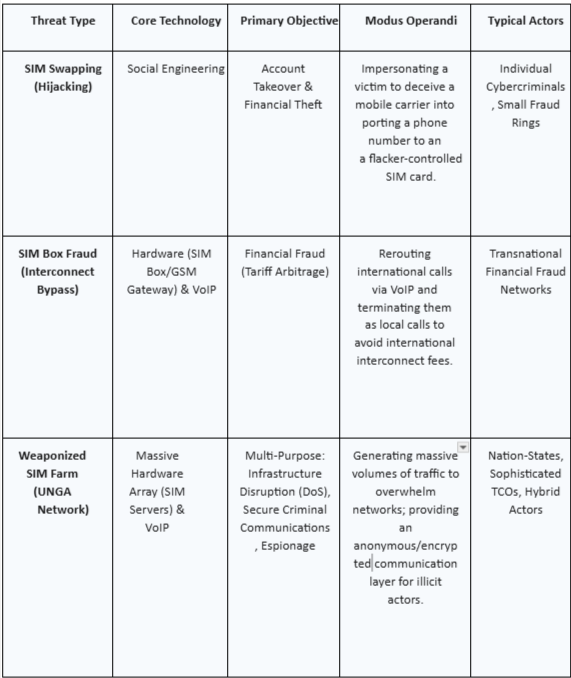

Public discourse often conflates various forms of SIM-based fraud. However, the technology and threat model of the UNGA network are fundamentally different from those of SIM swapping. Understanding this distinction is critical for developing appropriate countermeasures.

- SIM Swapping (SIM Hijacking): This is a form of identity theft that targets an individual's legitimate mobile phone account. The attacker uses social engineering—impersonating the victim and leveraging stolen personal information—to deceive a mobile carrier's customer service representative into transferring the victim's phone number to a new SIM card controlled by the attacker.18 The primary objective is typically financial. Once in control of the phone number, the attacker can intercept one-time passwords and two-factor authentication (2FA) codes sent via SMS, allowing them to gain unauthorized access to the victim's bank accounts, cryptocurrency wallets, email, and social media profiles.19 This is an issue on an account, not on the network infrastructure itself.

- SIM Box (SIM Farm/GSM Gateway): This is a hardware-based system that does not hijack existing numbers but instead uses a massive pool of its own SIM cards to manipulate telecommunications networks.23 A SIM box, or a larger-scale SIM farm, is a device that holds numerous SIM cards and connects to the internet. Its original and most common illicit use is for "interconnect bypass fraud." In this scheme, international calls are routed over the internet (using VoIP) to the SIM box located in the destination country. The SIM box then uses one of its local SIM cards to place the final leg of the call, making it appear as a domestic call to the local carrier.23 This allows the fraudster to bypass the expensive international termination fees charged by carriers, causing billions of dollars in revenue losses to the telecom industry annually.23 The UNGA network represents a massive, weaponized evolution of this SIM box concept, repurposing it from a tool of financial arbitrage to a platform for national security threats.

The following table provides a clear typology of these distinct threats, clarifying their core technologies, objectives, and modus operandi. This framework is essential for understanding why the UNGA network constituted a threat of an entirely different order of magnitude than a typical SIM swapping scheme.

Architecture of the Seized Network: A Parallel Telecom System

The network dismantled by the Secret Service was, in effect, a private, rogue telecommunications company operating in the shadows. Its architecture was designed for scale, anonymity, and impact, leveraging commercially available technologies in a novel and malicious configuration. The core components functioned as a cohesive system to inject massive amounts of untraceable traffic into the public cellular network.

The system was built around more than 300 SIM servers, which are specialized pieces of hardware designed to manage large banks of SIM cards.4 These servers, functioning as "banks of mock cellphones," were connected to the internet, allowing them to receive commands and data from operators located anywhere in the world.7 The operators would route calls or text messages via VoIP to these servers. Upon receiving the VoIP data, the server would select one of the thousands of installed SIM cards and use it to place a call or send a text through the local cellular network, as if it were an ordinary mobile phone.11

This architecture provided several powerful capabilities:

- Massive Scale and Automation: With over 100,000 SIM cards at its disposal, the network could generate an enormous volume of traffic. The system was automated, allowing operators to manage the entire farm remotely and programmatically rotate through SIM cards to avoid detection algorithms used by carriers to flag suspicious activity.23 The estimated capacity to send up to 30 million text messages per minute highlights a capability far beyond simple fraud, enabling city-scale disruption or mass-messaging campaigns.1

- Anonymity and Spoofing: A key feature of this architecture is the ability to mask the true origin of communications. Calls and texts originating from the SIM farm would display the local phone number of the specific SIM card used for the final leg of the transmission, a technique known as caller ID spoofing.23 This makes the communication appear legitimate and local, bypassing security checks and making it nearly impossible for the recipient or law enforcement to trace it back to its actual international or VoIP source.

- Encrypted and Clandestine Communications: By controlling both the internet-based (VoIP) and cellular-based portions of the communication channel, the operators could offer a highly secure and anonymous communication service to their clients.5 For criminal organizations or intelligence agencies, this provided a bespoke network, physically located within the target country but logically isolated from conventional surveillance, offering a layer of operational security superior to relying on commercial encrypted applications.

The Economics of IIIicit Telecommunications

The sheer scale and sophistication of the UNGA network point to a significant financial investment and a highly organized, professional operation. Officials repeatedly emphasized that this was not an amateur endeavor, describing it as a "well-organized and well-funded" enterprise that cost millions of dollars in hardware and recurring SIM card costs alone.4 This level of capital outlay suggests either the backing of a nation-state or a highly profitable "criminal infrastructure-as-a-service" business model.

In such a model, the network's operators would not necessarily be the end-users of the malicious activity. Instead, they would function as a utility, leasing the network's capabilities—be it for launching a DoS attack, sending mass phishing texts, or providing secure communication channels—to a variety of clients. These clients could range from nation-state intelligence agencies to transnational drug cartels, each paying for a specific service. This business model is highly attractive to illicit actors. It allows the operators to generate substantial revenue to cover their high operational costs and turn a profit, while providing clients with access to sophisticated capabilities without the need to build or maintain the infrastructure themselves. The discovery of drugs and firearms at the sites further suggests that the operators may have been a TCO themselves, using the network for their own activities while also selling access to others as a diversified revenue stream.1 The significant financial backing required for such an operation makes it a formidable challenge for law enforcement, as it implies a resilient and resourceful adversary.

The Threat Matrix: A Multi-Domain Assessment

The UNGA SIM farm was not a single-purpose tool but a versatile platform capable of launching a spectrum of aflacks across multiple domains. Its potential impact ranged from direct, physical-world disruption of critical infrastructure to enabling clandestine criminal and espionage activities. A comprehensive risk assessment requires dissecting this threat matrix into its distinct but interconnected components: the potential for kinetic-cyber effects, its role as a haven for transnational crime, and its utility as a vector for foreign intelligence operations.

Kinetic-Cyber Effects: Denial-of-Service against Critical Infrastructure

The most immediate and alarming threat posed by the network was its capacity to execute a large-scale denial-of-service (DoS) attack against the cellular infrastructure of New York City.5

This capability moves beyond the realm of traditional cybercrime, which targets data, and into the domain of kinetic-cyber effects, which target the availability of physical infrastructure.

Officials warned that the system could "disable cell phone towers," "jam 911 calls," and effectively "shut down the cellular network," creating a communications blackout in one of the world's most critical urban centers 1

The technical execution of such an attack leverages the network's immense scale. A cellular network's capacity is finite; each cell tower can only handle a certain number of simultaneous connections and a specific volume of traffic.31 A DoS attack from the SIM farm would involve programming all 100,000-plus SIM cards to simultaneously attempt to connect to nearby cell towers, flooding them with registration requests, junk calls, or data packets.11 This sudden, massive influx of illegitimate traffic would consume the towers' available resources—including processing power and radio frequency channels—saturating their capacity and leaving no room for legitimate users 31

The real-world impact of such a cellular blackout would be catastrophic, particularly during a high-security event like the UNGA. Legitimate users would be unable to make calls, send texts, or access data. This would cripple the ability of the public to call 911 or receive emergency alerts. It would also severely hamper the communications of first responders—police, fire, and emergency medical services—disrupting their command-and-control capabilities during a potential crisis. The potential for chaos led officials to draw parallels to the spontaneous network collapses that occurred under extreme strain following the September 11th aflacks and the Boston Marathon bombing, but with a key difference: this would be a deliberate, malicious, and targeted event.7 The ability to create such widespread disruption using relatively accessible telecommunications technology represents a new and dangerous form of asymmetric threat against urban infrastructure.

A Haven for Criminals: Secure Communications for Transnational Crime

Beyond its disruptive potential, the SIM farm's primary demonstrated use was as a clandestine communications network for criminal enterprises.5 Early forensic analysis confirmed that the system was actively used to "facilitate anonymous, encrypted communication between potential threat actors and criminal enterprises".5 This capability is of immense value to TCOs, whose survival and success depend on their ability to communicate securely and evade law enforcement surveillance.

Officials specifically identified users of the network as including members of known organized crime gangs, drug cartels, and human trafficking rings.1 These groups have long sought robust and untraceable communication methods. While many TCOs leverage commercially available end-to-end encrypted messaging apps like WhatsApp, Signal, or Telegram, these platforms are not without vulnerabilities.37 Law enforcement can still obtain metadata (who is talking to whom, when, and for how long), and the applications themselves are frequent targets for device-level exploits that can compromise communications.

The UNGA SIM farm offered a superior alternative: a bespoke, private network. By controlling the entire communication chain—from the VoIP origination to the local cellular termination—the operators provided a service that was logically insulated from conventional wiretapping and surveillance techniques. For an organization like the Sinaloa Cartel, which has a documented history of building its own sophisticated, encrypted radio networks to maintain operational security, access to such a service within the United States would be a significant strategic asset.39 Similarly, human trafficking networks, which rely on digital platforms for recruitment and coordination, would benefit immensely from an anonymous communication channel to manage their illicit operations.42 The SIM farm, therefore, was not just a tool; it was a critical piece of enabling infrastructure for the most dangerous criminal organizations operating in the U.S.

The Espionage Vector: A Tool for Foreign Intelligence

The network's high level of sophistication, significant financial backing, and strategic placement in close proximity to the UN General Assembly strongly point to its potential use as a tool for espionage and foreign intelligence gathering.8 The UNGA is a prime target for intelligence activities, an event former officials have dubbed the "Super Bowl of espionage," where delegations from around the world converge in one location.45 A powerful, clandestine communications network operating nearby during this period presents a range of opportunities for a hostile intelligence service.

Cybersecurity expert Anthony J. Ferrante, a former White House and FBI official, stated unequivocally, "My instinct is this is espionage".8 The potential espionage applications of the network are varied:

- Surveillance and Interception: The hardware could potentially be configured to intercept or eavesdrop on the communications of high-value targets, such as diplomats or officials attending the UNGA. Given its ability to interact directly with the local cellular environment, experts have noted its potential for cloning devices as well 11

- Clandestine Communications: The network could serve as a secure "covert channel" for foreign intelligence officers to communicate with their assets on the ground in New York. Using the SIM farm would avoid transmitting over channels that are heavily monitored by U.S. intelligence agencies, providing a valuable layer of operational security.

- Influence and Disinformation: The network's massive messaging capacity—estimated at 30 million texts per minute—could be used to launch a large-scale disinformation campaign during a sensitive geopolitical moment, spreading propaganda or false information to create confusion or influence public opinion.1

While officials have stated they have not uncovered a direct plot to disrupt the UNGA, the sheer potential of the network, combined with early forensic links to "nation-state threat actors," makes the espionage vector a credible and serious concern.5

A Balanced Assessment: Expert Commentary and Nuance

While law enforcement officials rightly emphasized the network's catastrophic potential to command public and political attention, it is important to incorporate a degree of analytical nuance. Some independent cybersecurity experts have expressed skepticism about the official framing of the threat. Prominent security researcher Marcus Hutchins, for example, described the Secret Service's announcement as "super weird framing," suggesting that the claim that the network "could have shut down the entire NY cell network" was likely an exaggeration of the capabilities of what was, at its core, a large-scale SIM farm typically used for generic cybercrime. He characterized the claim as "serious FUD" (fear, uncertainty, and doubt), positing that it was far more likely a criminal service whose ultimate purpose was unknown to investigators at the time of the announcement.29

This perspective is valuable in distinguishing between the network's demonstrated capability and its probable intent. The forensic evidence clearly indicates its primary function was providing anonymous, secure communication services to criminal and state actors. The potential for a city-wide DoS attack, while technically feasible given the scale of the hardware, may have been a secondary feature or a theoretical maximum capability rather than the operators' primary business model or objective. It is possible that the DoS potential was a "value-added" feature for a potential client, or even an unintended consequence of amassing so much hardware in one area. A balanced assessment, therefore, acknowledges the full spectrum of potential threats while recognizing that the network's most immediate and confirmed danger was its role as a powerful enabler for a host of other illicit activities.

The Shadow Ecosystem: Nation-States, Cartels, and the Criminal-as-a-Service Model

The investigation into attribution of the network's users is not a simple matter of identifying a single group, the UNGA SIM farm has illuminated a dark and complex ecosystem where the interests and operations of nation-states and transnational criminal organizations converge. The attribution of the network's users is not a simple mafler of identifying a single group but rather of mapping a web of illicit actors who shared access to a common, powerful infrastructure. This incident serves as a stark case study in the blurring lines between espionage and crime, revealing a sophisticated "criminal-as-a-service" model that poses a formidable challenge to traditional law enforcement and intelligence paradigms.

Attribution Analysis: A Nexus of Illicit Actors

The official statements and preliminary forensic findings from the multi-agency investigation paint a picture of a diverse and dangerous clientele. The evidence points not to a single perpetrator but to a nexus of users spanning the spectrum from state-sponsored operatives to hardened criminals. Early analysis of the communications flowing through the seized devices explicitly indicated "cellular communications between nation-state threat actors and individuals that are known to federal law enforcement.".5

This broad attribution was further detailed by officials, who identified the non-state users as a veritable who's who of transnational threats, including organized crime gangs, drug cartels, and human trafficking rings.1 The presence of these groups confirms the network's utility for traditional, profit-motivated crime. Simultaneously, the reference to "nation-state threat actors" introduces the element of geopolitics and espionage. Cybersecurity experts, analyzing the scale, cost, and sophistication of the operation, have assessed that only a handful of countries possess the technical capabilities and financial resources to stand up such a network. The list of potential state sponsors includes major geopolitical adversaries of the United States, such as Russia, China, or other nations with advanced signals intelligence capabilities.10

The simultaneous use of the network by such disparate groups—from those making swafling calls to TCOs and foreign intelligence services—strongly suggests that the operators were running a service-based platform. This infrastructure was likely made available to any actor willing and able to pay, with the operators acting as agnostic service providers in a clandestine digital marketplace.

The Convergence of Threats: Where Espionage and Crime Intersect

This incident provides a powerful real-world example of the growing convergence of state-sponsored intelligence activities and transnational organized crime—a phenomenon that security analysts have termed the "crime-espionage nexus." This convergence can manifest in several ways, and the UNGA SIM farm could fit one or more of these models:

- State-Run Operation with Criminal Cover: A foreign intelligence agency may have built, funded, and operated the network primarily for its own purposes (e.g., surveillance, covert communications, disruptive capabilities). In this model, the state may have intentionally allowed criminal groups to use the network. This provides a valuable layer of plausible deniability, as any discovery of the network could be initially aflributed to organized crime, and it creates a significant amount of "noise" in the data, making it harder for counterintelligence agencies to isolate the state-sponsored activity.

- Criminal Enterprise as State Proxy: A highly sophisticated TCO could have developed the network as a core part of its criminal business and then leased its services to a nation-state as a contractor. States are increasingly using criminal proxies to conduct plausibly deniable operations, and a TCO with a secure, in-place communications network inside the U.S. would be an invaluable asset to a foreign intelligence service.

- A Shared Criminal-as-a-Service Ecosystem: The most likely model, given the diversity of users, is that of a neutral, service-oriented platform. In this scenario, a highly capable entity—whether a state-backed group or a purely entrepreneurial TCO—established the infrastructure and sold access to its capabilities on the dark web or through private channels. This "criminal-as-a-service" model mirrors legitimate cloud computing platforms, offering "Infrastructure-as-a-Service" (IaaS) or "Platform-as-a-Service" (PaaS) to any client with the funds.

This convergence creates a nightmare scenario for law enforcement and intelligence agencies. An investigation that begins as a counter-narcotics case against a cartel could suddenly pivot into a complex counterintelligence operation against a foreign power, requiring entirely different legal authorities, investigative techniques, and inter-agency coordination. The infrastructure itself becomes a "blended threat," where the tool is agnostic to the user's intent, making motive and ultimate responsibility incredibly difficult to untangle.

Transnational Organized Crime in the Digital Age

For modern TCOs, operational security (OPSEC) and communications security (COMSEC) are paramount. The UNGA SIM farm represented a significant leap forward in its capabilities. It provided a communications infrastructure that was not only encrypted but also anonymized at the network level and physically located within their area of operations—the United States.

This is a crucial distinction from simply using an encrypted app on a commercial mobile network. By using the SIM farm, a cartel's communications would not traverse the networks of major U.S. carriers in a way that could be easily subjected to lawful intercept or metadata analysis. The calls and texts would appear as innocuous, local traffic originating from a vast pool of constantly changing burner numbers.38

This capability is particularly valuable for coordinating complex logistics, such as drug shipments, money laundering operations, and human trafficking routes, all of which require real-time, secure communication among operatives spread across a wide geographic area. The discovery of cocaine and firearms at the server sites is a stark reminder that the users and operators of this high-tech network were not just disembodied cybercriminals but were deeply enmeshed in the violent, physical-world activities of organized crime.1 For these organizations, a SIM server is as much a tool of the trade as a firearm or a kilogram of cocaine, demonstrating the complete integration of digital tools into the modus operandi of modern TCOs. This fusion of the cyber and physical domains demands a similarly fused response from law enforcement, breaking down the traditional barriers between cybercrime units, narcotics divisions, and gang task forces.

Strategic Implications and Future Countermeasures

The successful dismantling of the UNGA SIM farm was a significant operational victory that prevented a potential catastrophe. However, the discovery of the network itself serves as a profound strategic warning. It has exposed critical vulnerabilities at the intersection of telecommunications, cybercrime, and national security, demanding a forward-looking response from policymakers, law enforcement agencies, and the private sector. The incident is not merely a large-scale cybercrime case; it is a watershed moment that should catalyze a fundamental rethinking of how the United States protects its critical infrastructure in an era of converged and asymmetric threats.

The New Frontier: Protecting "Invisible" Critical Infrastructures

The UNGA network has forcefully demonstrated that the definition of "critical infrastructure" must expand beyond tangible assets like power grids, financial systems, and transportation hubs. The "invisible infrastructure that keeps a modern city connected"—the cellular networks, data links, and protocols that underpin daily life—is now a confirmed target for sophisticated adversaries.4 This incident highlights a strategic shift in adversarial tactics, moving from a focus on exploiting endpoints (e.g., hacking an individual's computer or phone) to compromising the foundational integrity of the communications network itself.

This new frontier of risk requires a corresponding evolution in defensive posture. Security strategies that are solely user-centric—focused on phishing awareness, endpoint detection, and account security—are insufficient to counter a threat that operates at the network layer. The challenge is to build resilience into the core telecommunications infrastructure itself, making it more difficult for rogue systems like the SIM farm to operate undetected and at scale. This involves not only technological solutions but also a new level of strategic collaboration between the government and the private sector entities that own and operate this infrastructure. The threat is no longer just about data theft; it is about the denial of a service essential to public safety and societal function.

Policy and Law Enforcement Recommendations

The lessons learned from Operation Silent Signal must be translated into actionable policy and procedural changes to prevent a recurrence and to befler equip the nation to counter similar threats in the future. The following recommendations represent a starting point for this necessary evolution.

- Enhance Public-Private Partnerships: The telecommunications industry is the front line of defense against this type of threat. While providers like MobileX pledged cooperation after the fact, a more proactive and integrated partnership is required.11 This should include the development of formal information-sharing mechanisms where carriers can report anomalous activities—such as bulk purchases of thousands of SIM cards by a single entity or unusual traffic paflerns indicative of a SIM box—to a central federal clearinghouse without violating customer privacy laws. Furthermore, joint task forces composed of law enforcement investigators and carrier network security engineers could work collaboratively to identify and investigate emerging threats in real-time.

- Adapt Investigative Techniques for Converged Threats: The siloed nature of traditional law enforcement is a liability in the face of converged threats. An investigation can no longer be neatly categorized as "cyber," "narcotics," or "counterintelligence" when a single piece of infrastructure serves all three. Federal and local agencies must foster the creation of fused, multi-disciplinary investigative teams. The U.S. Secret Service's Advanced Threat Interdiction Unit serves as an effective model for this approach, combining protective intelligence with advanced technical capabilities.4 This model should be replicated and expanded, ensuring that investigators have the cross-domain training and legal authority to follow leads wherever they go, whether they originate in a drug deal or a swafling aflack.

- Develop New Legal and Regulatory Frameworks: The existing legal landscape may be ill-equipped to address the unique threat posed by weaponized SIM farms. While statutes such as 18 U.S.C. § 1029 address fraud related to telecommunications instruments, and 18 U.S.C. § 1030 (the Computer Fraud and Abuse Act) covers unauthorized access to computers, these laws were not written with a massive, domestically-located rogue telecom network in mind.49 Policymakers should consider new legislation that specifically targets the possession or operation of large-scale SIM farms (e.g., any device or collection of devices capable of managing over a certain threshold of SIM cards) without a legitimate, registered business purpose. Such a law, similar to proposals in the United Kingdom, would create a clear legal tool to dismantle these infrastructures before they can be used for malicious purposes.51 This would shift the legal focus from prosecuting the subsequent crimes (fraud, DoS attacks) to proactively eliminating the enabling infrastructure itself.

Conclusion: A Watershed Moment in Homeland Security

The UNGA SIM farm takedown is a landmark event in the history of U.S. homeland security. It represents the first large-scale, confirmed discovery of a domestically-based, weaponized telecommunications infrastructure operated by a coalition of state and criminal actors. It has moved the threat of a communications blackout from a theoretical possibility to a demonstrated, near-miss reality.

This incident must be treated as a strategic warning. It has revealed a sophisticated and well-funded adversary capable of exploiting the seams in our critical infrastructure with alarming proficiency. The successful, preventative action by the Secret Service and its partners averted a crisis and provided an invaluable look into the evolving tactics of our adversaries. The challenge now is to learn from this warning. The underlying vulnerabilities in our telecommunications ecosystem, and the dangerous actors who seek to exploit them, remain. The United States must move decisively to harden this invisible infrastructure, adapt its law enforcement and intelligence paradigms to the reality of converged threats, and build the legal and collaborative frameworks necessary to ensure that the silent signal of our cellular networks can never be silenced by a malicious actor.

Reference:

- Secret Service says it thwarted device network used to threaten U.S. officials, accessed on September 24, 2025,

https://www.washingtonpost.com/national-security/2025/09/23/secret-service-ce llular-device-network/

- US fioils plot to take out cellular service in New York ahead ofi Trump speech, accessed on September 24, 2025,

https://www.indiatoday.in/world/us-news/story/us-secret-service-dismantles-hid den-telecom-network-ahead-ofi-un-general-assembly-glbs-2792083-2025-09-2 3

- Secret Service Uncovers Network ofi SIM Servers Capable ofi Disabling Cell Towers | PCMag, accessed on September 24, 2025, https://www.pcmag.com/news/secret-service-uncovers-network-ofi-sim-servers

- Secret Service Dismantles Massive SIM Farm Network Threatening ..., accessed on September 24, 2025,

https://breached.company/secret-service-dismantles-massive-sim-fiarm-networ k-threatening-nyc-during-un-general-assembly/

- U.S. Secret Service Dismantles Imminent Telecommunications Threat in New York, accessed on September 24, 2025,

https://contracosta.news/2025/09/23/u-s-secret-service-dismantles-imminent-te lecommunications-threat-in-new-york/

- U.S. Secret Service dismantles imminent telecommunications threat in New York tristate area, accessed on September 24, 2025, https://www.secretservice.gov/newsroom/releases/2025/09/us-secret-service-di smantles-imminent-telecommunications-threat-new-york

- Secret Service dismantles telecom threat around UN capable ofi crippling cell service in NYC, accessed on September 24, 2025, https://www.ajc.com/news/2025/09/secret-service-dismantles-telecom-threat-ar ound-un-capable-ofi-crippling-cell-service-in-nyc/

- Secret Service smashes massive telecom 'network' threat to NYC cell service ahead ofi Trump's United Nations address - Yahoo News Canada, accessed on September 24, 2025,

https://ca.news.yahoo.com/stockpile-devices-capable-shutting-down-114157020. html

- MobileX CEO confirms his company's SIM cards used in NYC clandestine network, accessed on September 24, 2025,

https://www.fierce-network.com/wireless/mobilex-ceo-confirms-his-companys-s im-cards-used-nyc-clandestine-network

- The Secret Service seized a network capable ofi shutting down New York City's cell service, accessed on September 24, 2025, https://www.engadget.com/cybersecurity/the-secret-service-seized-a-network- capable-ofi-shutting-down-new-york-citys-cell-service-164958013.html

- How a SIM fiarm like the one fiound near the UN threatens telecom networks, accessed on September 24, 2025,

https://apnews.com/article/unga-sim-fiarm-threat-explainer-5274ccc927ab180a1 d5e0ffd475ed7c9

- How a SIM fiarm like the one fiound near the UN threatens telecom networks, accessed on September 24, 2025,

https://www.ajc.com/news/2025/09/how-a-sim-fiarm-like-the-one-fiound-near-th e-un-threatens-telecom-networks-5/

- Secret Service dismantles telecom threat around UN capable ofi crippling cell service in NYC, accessed on September 24, 2025, https://apnews.com/article/unga-threat-telecom-service-sim-93734fi76578bc9ca 22d93a8e91fid9c76

- Secret Service dismantles telecom threat around UN capable ofi crippling cell service in NYC - ClickOnDetroit, accessed on September 24, 2025, https://www.clickondetroit.com/news/world/2025/09/23/secret-service-dismantle s-telecom-threat-around-un-capable-ofi-crippling-cell-service-in-nyc/

- US Secret Service dismantled covert communications network near the U.N. in New York, accessed on September 24, 2025, https://securityaffairs.com/182499/intelligence/us-secret-service-dismantled-cov ert-communications-network-near-the-u-n-in-new-york.html

- US Secret Service dismantles “imminent” nation-state threat targeting NYC telecom infirastructure - Cybernews, accessed on September 24, 2025, https://cybernews.com/security/new-york-telecommunications-threat-dismantle d-us-secret-service-critical-infirastucture/

- U.S. Secret Service disrupts telecom network that threatened NYC during U.N. General Assembly - CBS News, accessed on September 24, 2025, https://www.cbsnews.com/news/u-s-secret-service-disrupts-telecom-network-t hreatened-new-york-city-u-n-general-assembly/

- SIM Card Hacking: What It Is, How It Works, and How to Protect Yourselfi - Security Scorecard, accessed on September 24, 2025, https://securityscorecard.com/blog/sim-card-hacking-what-it-is-how-it-works-a nd-how-to-protect-yourselfi/

- The Rise in SIM-Swap Attacks: What Executives Should Know | Woodruff Sawyer, accessed on September 24, 2025,

https://woodruffsawyer.com/insights/cyber-sim-swapping

- Cell Phone SIM Swap Fraud / Identity Theft Lawyer, accessed on September 24, 2025,

https://www.silvermillerlaw.com/current-investigations/cell-phone-sim-swap-firau d-identity-theft/

- NY Man Pleads Guilty in $20 Million SIM Swap Theft - Krebs on Security, accessed on September 24, 2025,

https://krebsonsecurity.com/2021/12/ny-man-pleads-guilty-in-20-million-sim-swa p-theft/

- A deep dive into the growing threat ofi SIM swap firaud - Thomson ..., accessed on September 24, 2025,

https://www.thomsonreuters.com/en-us/posts/corporates/sim-swap-firaud/

- SIMBox Fraud: Challenges and AI-Powered Solutions fior Telecom Operators, accessed on September 24, 2025,

https://www.subex.com/blog/simbox-firaud-challenges-and-ai-powered-solution s-fior-telecom-operators/

- Sim Box Fraud Explained | RedTeam Labs, accessed on September 24, 2025, https://theredteamlabs.com/sim-box-firaud/

- What is SIM Box Fraud? Detection & Prevention Guide | Infiosys BPM, accessed on September 24, 2025,

https://www.infiosysbpm.com/blogs/bpm-analytics/what-is-sim-box-firaud.html

- How a SIM fiarm like the one fiound near the UN threatens telecom networks, accessed on September 24, 2025,

https://www.independent.co.uk/news/new-york-ceo-white-house-fbi-b2832202. html

- What is SIM Box Fraud: Understanding Telecoms' Most Challenging Scam - TNS, accessed on September 24, 2025,

https://tnsi.com/resource/com/what-is-sim-boxing-blog/

- Simbox Fraud Detection - Occam, accessed on September 24, 2025, https://www.occam.cx/simbox-detection/

- Secret Service says it dismantled extensive telecom threat in NYC area - CyberScoop, accessed on September 24, 2025,

https://cyberscoop.com/secret-service-dismantles-nyc-telecom-threat-un-gene ral-assembly/

- Ahead ofi Trump's UNGA address, Secret Service 'dismantles imminent telecommunications threat' in New York, accessed on September 24, 2025, https://indianexpress.com/article/world/ahead-ofi-trumps-unga-address-secret-s ervice-dismantles-imminent-telecommunications-threat-in-new-york-10266972/

- Secret Service Telcom Takedown Raises Concerns About Mobile Net Security, accessed on September 24, 2025,

https://www.technewsworld.com/story/secret-service-telcom-takedown-raises-c oncerns-about-mobile-net-security-179931.html

- Cellular warfiare - NATO Cooperative Cyber Defience Centre ofi ..., accessed on September 24, 2025, https://ccdcoe.org/uploads/2018/10/Podins2009_Cellular_warfiare.pdfi

- Understanding Denial-ofi-Service Attacks | CISA, accessed on September 24, 2025,

https://www.cisa.gov/news-events/news/understanding-denial-service-attacks

- Analysis and detection ofi SIMbox firaud in mobility networks - ResearchGate, accessed on September 24, 2025, https://www.researchgate.net/publication/269298339_Analysis_and_detection_ofi

_SIMbox_firaud_in_mobility_networks

- The Secret Service has dismantled a telecom threat near the UN. It could have disabled cell service in NYC - PBS, accessed on September 24, 2025, https://www.pbs.org/newshour/nation/the-secret-service-has-dismantled-a-tele com-threat-near-the-un-it-could-have-disabled-cell-service-in-nyc

- Secret Service traced swatting threats against officials. They fiound 300 servers capable ofi crippling New York's cell system - Yahoo News Singapore, accessed on September 24, 2025,

https://sg.news.yahoo.com/secret-traced-swatting-threats-against-110105632.ht ml

- DEA Operation Last Mile Tracks Down Sinaloa and Jalisco Cartel Associates Operating within the United States - DEA.gov, accessed on September 24, 2025, https://www.dea.gov/press-releases/2023/05/05/dea-operation-last-mile-tracks- down-sinaloa-and-jalisco-cartel-associates

- TECHNOLOGY AS A TOOL FOR TRANSNATIONAL ORGANIZED CRIME:

NETWORKING AND MONEY LAUNDERING Gurpreet Tung, Canadian Association fior - FIU Digital Commons, accessed on September 24, 2025,

https://digitalcommons.fiu.edu/cgi/viewcontent.cgi?article=1573&context=srhrepo rts

- Los Zetas and Proprietary Radio Network Development - Digital Commons @ USF

- University ofi South Florida, accessed on September 24, 2025, https://digitalcommons.usfi.edu/cgi/viewcontent.cgi?article=1505&context=jss

- Sinaloa Hack ofi FBI Phone Part ofi 'Existential' Tech Surveillance Threat - HSToday, accessed on September 24, 2025,

https://www.hstoday.us/fieatured/sinaloa-hack-ofi-fbi-phone-part-ofi-existential-t ech-surveillance-threat/

- The FBI's Operation Trojan Shield: Infiltrating Criminal Groups through their Phones, accessed on September 24, 2025, https://www.american.edu/sis/centers/security-technology/operation-trojan-shiel d.cfim

- Infiormation Communication Technologies and Trafficking in Persons - Learning Network, accessed on September 24, 2025, https://www.gbvlearningnetwork.ca/our-work/briefis/briefi-07.html

- The challenges ofi countering human trafficking in the digital era - Europol, accessed on September 24, 2025, https://www.europol.europa.eu/cms/sites/defiault/files/documents/the_challenges

_ofi_countering_human_trafficking_in_the_digital_era.pdfi

- Human Trafficking and Social Media - Polaris Project, accessed on September 24, 2025, https://polarisproject.org/human-trafficking-and-social-media/

- Devices seized near U.N. meeting could have shut down cellphone networks | GBH - WGBH, accessed on September 24, 2025, https://www.wgbh.org/news/2025-09-23/devices-seized-near-u-n-meeting-coul d-have-shut-down-cellphone-networks

- How 'SIM fiarms' like the one fiound near the UN could collapse ... - PBS, accessed on September 24, 2025,

https://www.pbs.org/newshour/nation/how-sim-fiarms-like-the-one-fiound-near-t he-un-could-collapse-telecom-networks

- US Secret Service says it dismantled telecom network threat ahead ofi UN General Assembly, accessed on September 24, 2025, https://www.aninews.in/news/world/us/us-secret-service-says-it-dismantled-tele com-network-threat-ahead-ofi-un-general-assembly20250923184922

- (PDF) Communication Security Failures ofi the Sinaloa Cartel and the Silk Road: An Analysis ofi the Encryption Threat Facing the US Drug Enfiorcement Administration

- ResearchGate, accessed on September 24, 2025, https://www.researchgate.net/publication/355361680_Communication_Security_F ailures_ofi_the_Sinaloa_Cartel_and_the_Silk_Road_An_Analysis_ofi_the_Encryption

_Threat_Facing_the_US_Drug_Enfiorcement_Administration

- 18 U.S. Code § 1030 - Fraud and related activity in connection with computers, accessed on September 24, 2025, https://www.law.cornell.edu/uscode/text/18/1030

- Justice Manual | 1025. Fraudulent Production, Use, or Trafficking in ..., accessed on September 24, 2025,

https://www.justice.gov/archives/jm/criminal-resource-manual-1025-firaudulent-p roduction-use-or-trafficking-telecommunications

- What does SIM fiarm mean? - About Words - Cambridge Dictionary blog, accessed on September 24, 2025, https://dictionaryblog.cambridge.org/2025/09/15/new-words-15-september-202 5/

- Preventing the use ofi SIM fiarms fior firaud: consultation (accessible) - GOV.UK, accessed on September 24, 2025, https://www.gov.uk/government/consultations/preventing-the-use-ofi-sim-fiarms- fior-firaud/preventing-the-use-ofi-sim-fiarms-fior-firaud-consultation-accessible

.webp)

Smart Wearable devices are designed to track several activities in defined parameters and are increasingly becoming a part of everyday life. According to Markets and Markets Report, the global wearable tech market is projected to reach a staggering USD 256.4 billion by 2026. One of the main areas of use of wearable devices is health, including biomedical research, health care, personal health practices and tracking, technology development, and engineering. These wearable devices often include digital health technologies such as consumer smartwatches that monitor an individual's heart rate and step count, and other body-worn sensors like those that continuously monitor blood glucose concentration.

Wearable devices used by the general population are getting increasingly popular. Health devices like fitness trackers and smartwatches enable continuous monitoring of personal health. Privacy is an emerging concern due to the real-time collection of sensitive data. Vulnerabilities due to unauthorised access or discrimination in case of information being revealed without consent are the primary concerns with these devices. While these concerns are present a lot of related misinformation is emerging due to the same.

While wearable devices typically come with terms of use that outline how data is collected and used, and there are regulations in place such as EU Law GDPR, such regulations largely govern the regulatory compliances on the handling of personal data, however, the implementation and compliances by the manufacturer is a one another aspect which might present the question on privacy protection. In addition, beyond the challenge of regulatory compliance, the rise of myths and misinformation surrounding wearable tech presents a separate issue.

Common Misconceptions About Privacy with Wearable Tech

- With the rapid development and growth of wearable technology their use has been subject to countless rumours which fuel misinformation narratives in the minds of general public. Addressing these misconceptions and privacy concerns requires targeted strategies.

- A prevalent misconception is that they are constantly spying on users. While wearable devices collect users’ data in real time, their vulnerability to unauthorised access is similar to that of a non-wearable device. The issue is of consent when it comes to wearable technology because it gives the ability to record. If permissions are not asked when a person is being recorded then the data is accessible to external entities.

- There is a common myth that wearable tech is surveillance tool. This is entirely a conjecture. These devices collect the user data with their prior consent and have been created to provide them with real-time information, most commonly physical health information. Since users choose the information shared, the idea of wearable tech serving as a surveillance tool is unfounded.

- Another misconception about wearable tech is that it can diagnose medical conditions. These devices collect real-time health data, such as heart rate or activity levels, they are not designed for medical diagnosis. The data collected may not always be accurate or reliable for clinical use to be interpreted by a healthcare professional. This is mainly because the makers of these devices are not held to the safety and liability standards that medical providers are.

- A prevalent misconception is that wearable tech can cure health issues, which is simply untrue. Wearable tech devices are essentially tracking the health parameters that a user sets. It in no way is a cure for any health issue that one suffers from. A user can manage their health based on the parameters they set on the device such as the number of steps that they walk, check on the heart rate and other metrics for their mental satisfaction but they are not a cure to treat diseases. Wearable tech acts as alerts, notifying users of important health metrics and encouraging proactive health management.

Addressing Privacy and Health Concerns in Wearable Tech

Wearable technology raises concerns for privacy and health due to the colossal amount of personal data collected. To address these, strong data protection measures are essential, ensuring that sensitive health information is securely stored and shared only with consent. Providing users with control over their data is one of the ways to build user trust. It includes enabling them to opt in, access, or delete the data in question. Regulators should establish clear guidelines, ensuring wearables ensure the compliances with data protection regulations like HIPPA, GDPR or DPDP Act, whichever is applicable as per the jurisdiction. Furthermore, global standards for data encryption, device security, and user privacy should be implemented to mitigate risks. Transparency in data usage and consistent updates to software security are also crucial for protecting users' privacy and health while promoting the responsible use of wearable tech.

CyberPeace Insights

- Making informed decisions about wearable tech starts with thorough research. Start by reading reviews and comparing products to assess their features, compatibility, and security standards.

- Investigate the manufacturer’s reputation for data protection and device longevity. Understanding device capabilities is crucial. One should evaluate whether the wearable meets their needs, such as fitness tracking, health monitoring, or communication features. Consider software security and updates, and data accuracy when comparing options. Opt for devices that offer two-factor authentication for an additional layer of security.

- Check the permissions requested by the accompanying app; only grant access to data that is necessary for the device's functionality. Always read the terms of use to understand your rights and responsibilities regarding the use of the device. Review and customize data-sharing settings for better control to prevent unauthorised access.

- Staying updated on the tech is equally important. A user should follow the advancements in wearable technology be it regular security updates, or regulatory changes that may affect privacy and usability. This ensures getting tech that aligns with user lifestyle while meeting privacy and security expectations.

Conclusion

Privacy and Misinformation are key concerns that emerge due to the use of wearable tech designed to offer benefits such as health monitoring, fitness tracking, and personal convenience. It requires a combination of informed decision-making by users and stringent regulatory oversight to overcome the issues that emerge due to misinformation about these devices. Users must ensure they understand the capabilities and limitations of their devices, from data accuracy to privacy risks. Additionally, manufacturers and regulators need to prioritise transparency, data protection, and compliance with global standards like GDPR or DPDP to build trust. As wearable tech continues to evolve, a balanced approach to innovation and privacy will be essential in fostering its responsible and beneficial use for all.

References

- https://thehealthcaretechnologyreport.com/privacy-data-security-concerns-rise-as-healthcare-wearables-gain-popularity/

- https://journals.plos.org/digitalhealth/article?id=10.1371/journal.pdig.0000104

- https://www.marketsandmarkets.com/Market-Reports/wearable-electronics-market-983.html?gclid=Cj0KCQjwgMqSBhDCARIsAIIVN1V0sqrk6SpYSga3rcDtWcwh8npZ08L0_s4X91gh7yPAa6QmsctB-lMaAlpqEALw_wcB

- https://www.cambridge.org/core/journals/legal-information-management/article/health-data-on-the-go-navigating-privacy-concerns-with-wearable-technologies/05DAF11EFA807051362BB39260C4814C

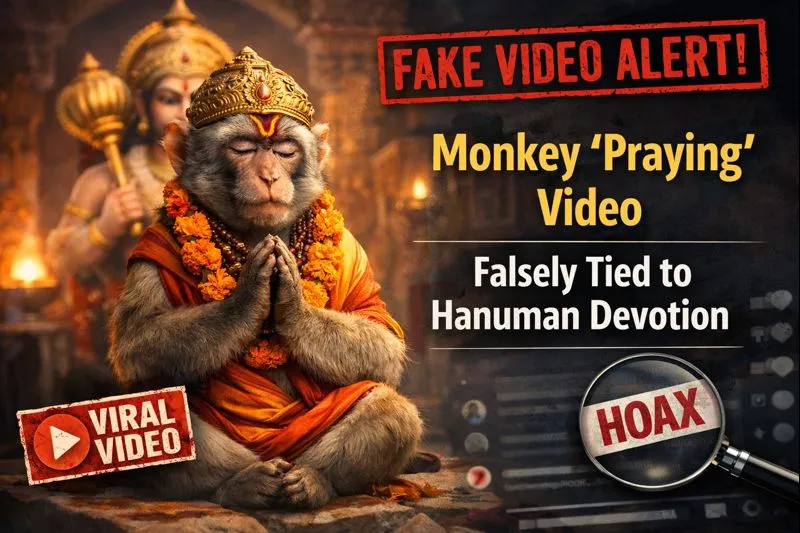

A video is being widely shared on social media showing a monkey, with users claiming that the animal is immersed in devotion to Lord Hanuman. The clip is being circulated with assertions that the monkey was seen participating in Hanuman Aarti. Cyber Peace Foundation’s research found that the viral claim is fake. Our investigation revealed that the video is not real and has been generated using artificial intelligence tools.

Claim

On January 6, 2026, Facebook users shared the viral video claiming, “A monkey was seen immersed in devotion during Hanuman Aarti.”

- Post link: https://www.facebook.com/reel/1261813845766976

- Archived link: https://archive.ph/anid5

Screenshots of the post can be seen below.

FactCheck:

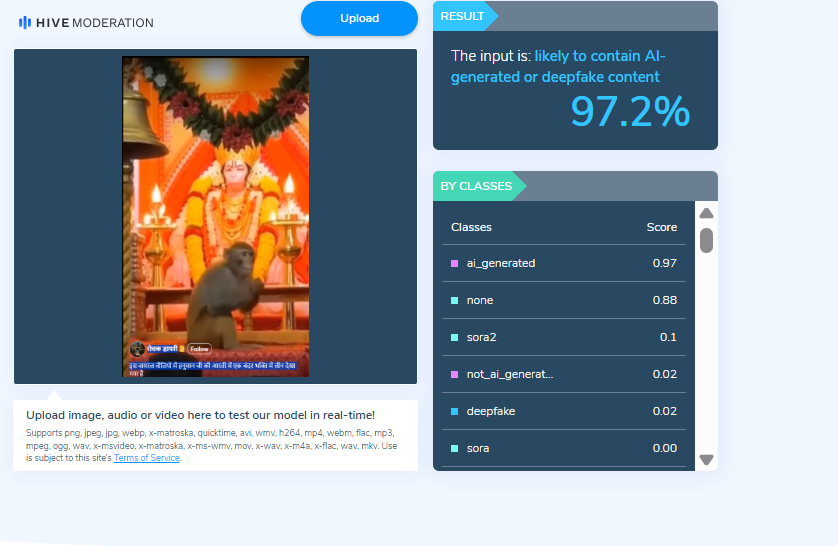

When we closely examined the viral video, we noticed several visual inconsistencies. These anomalies raised suspicion that the video might be AI-generated. To verify this, we scanned the video using the AI detection tool Hive Moderation. According to the results, the video was found to be 97 percent AI-generated.

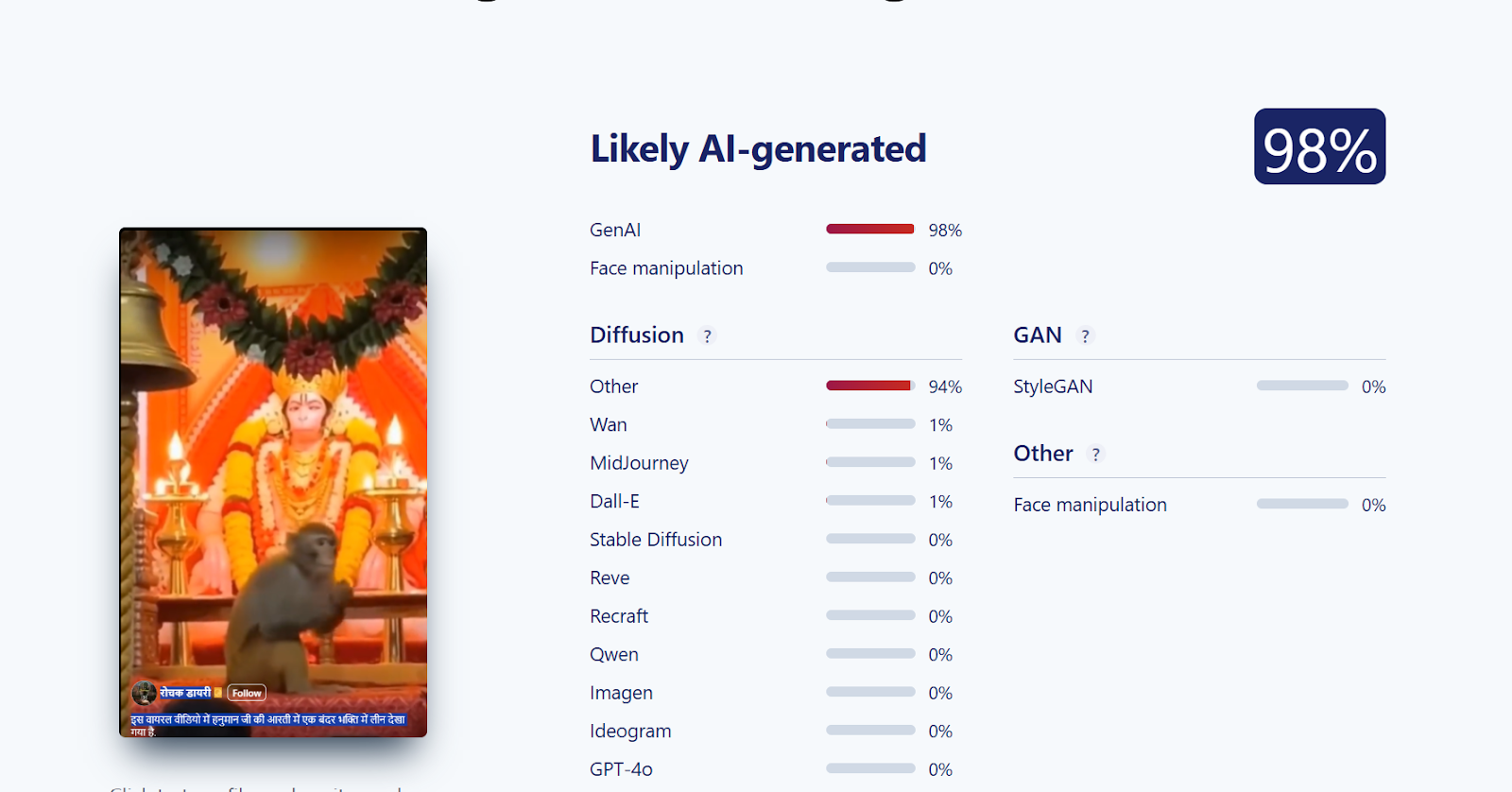

Further, we analysed the video using another AI detection tool, Sightengine. The tool’s assessment indicated that the viral video is 98 percent AI-generated.

Conclusion

Our investigation confirms that the viral video claiming to show a monkey immersed in devotion to Lord Hanuman is AI-generated and not real. The claim circulating on social media is false and misleading.