#Factcheck-False Claims of Houthi Attack on Israel’s Ashkelon Power Plant

Executive Summary:

A post on X (formerly Twitter) has gained widespread attention, featuring an image inaccurately asserting that Houthi rebels attacked a power plant in Ashkelon, Israel. This misleading content has circulated widely amid escalating geopolitical tensions. However, investigation shows that the footage actually originates from a prior incident in Saudi Arabia. This situation underscores the significant dangers posed by misinformation during conflicts and highlights the importance of verifying sources before sharing information.

Claims:

The viral video claims to show Houthi rebels attacking Israel's Ashkelon power plant as part of recent escalations in the Middle East conflict.

Fact Check:

Upon receiving the viral posts, we conducted a Google Lens search on the keyframes of the video. The search reveals that the video circulating online does not refer to an attack on the Ashkelon power plant in Israel. Instead, it depicts a 2022 drone strike on a Saudi Aramco facility in Abqaiq. There are no credible reports of Houthi rebels targeting Ashkelon, as their activities are largely confined to Yemen and Saudi Arabia.

This incident highlights the risks associated with misinformation during sensitive geopolitical events. Before sharing viral posts, take a brief moment to verify the facts. Misinformation spreads quickly and it’s far better to rely on trusted fact-checking sources.

Conclusion:

The assertion that Houthi rebels targeted the Ashkelon power plant in Israel is incorrect. The viral video in question has been misrepresented and actually shows a 2022 incident in Saudi Arabia. This underscores the importance of being cautious when sharing unverified media. Before sharing viral posts, take a moment to verify the facts. Misinformation spreads quickly, and it is far better to rely on trusted fact-checking sources.

- Claim: The video shows massive fire at Israel's Ashkelon power plant

- Claimed On:Instagram and X (Formerly Known As Twitter)

- Fact Check: False and Misleading

Related Blogs

Executive Summary

A video showing thick smoke rising from a building and people running in panic is being shared on social media. The video is being circulated with the claim that it shows Iran launching a missile attack on the United States.CyberPeace’s research found the claim to be misleading. Our probe revealed that the video is not related to any recent incident. The viral clip is actually from the September 11, 2001 terrorist attacks on the World Trade Center in the United States and is being falsely shared as footage of an alleged Iranian missile strike on the US.

Claim:

An Instagram user shared the video claiming, “Iran has attacked a US airbase in Qatar. Iran has fired six ballistic missiles at the Al Udeid Airbase in Qatar. Al Udeid Airbase is the largest US military base in West Asia.”

Links to the post and its archived version are provided below.

Fact Check:

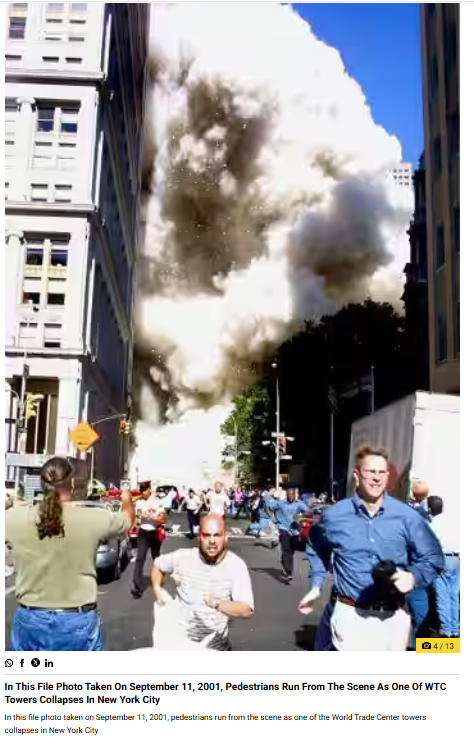

To verify the claim, we extracted key frames from the viral video and ran a reverse image search using Google Lens. During the search, we found visuals matching the viral clip in a report published by Wion on September 11, 2021. The report, titled “In pics | A look back at the scenes from the 9/11 attacks,” included an image that closely resembled the visuals seen in the viral video. The caption of the image stated that it was a file photo from September 11, 2001, showing pedestrians running as one of the World Trade Center towers collapsed in New York City.

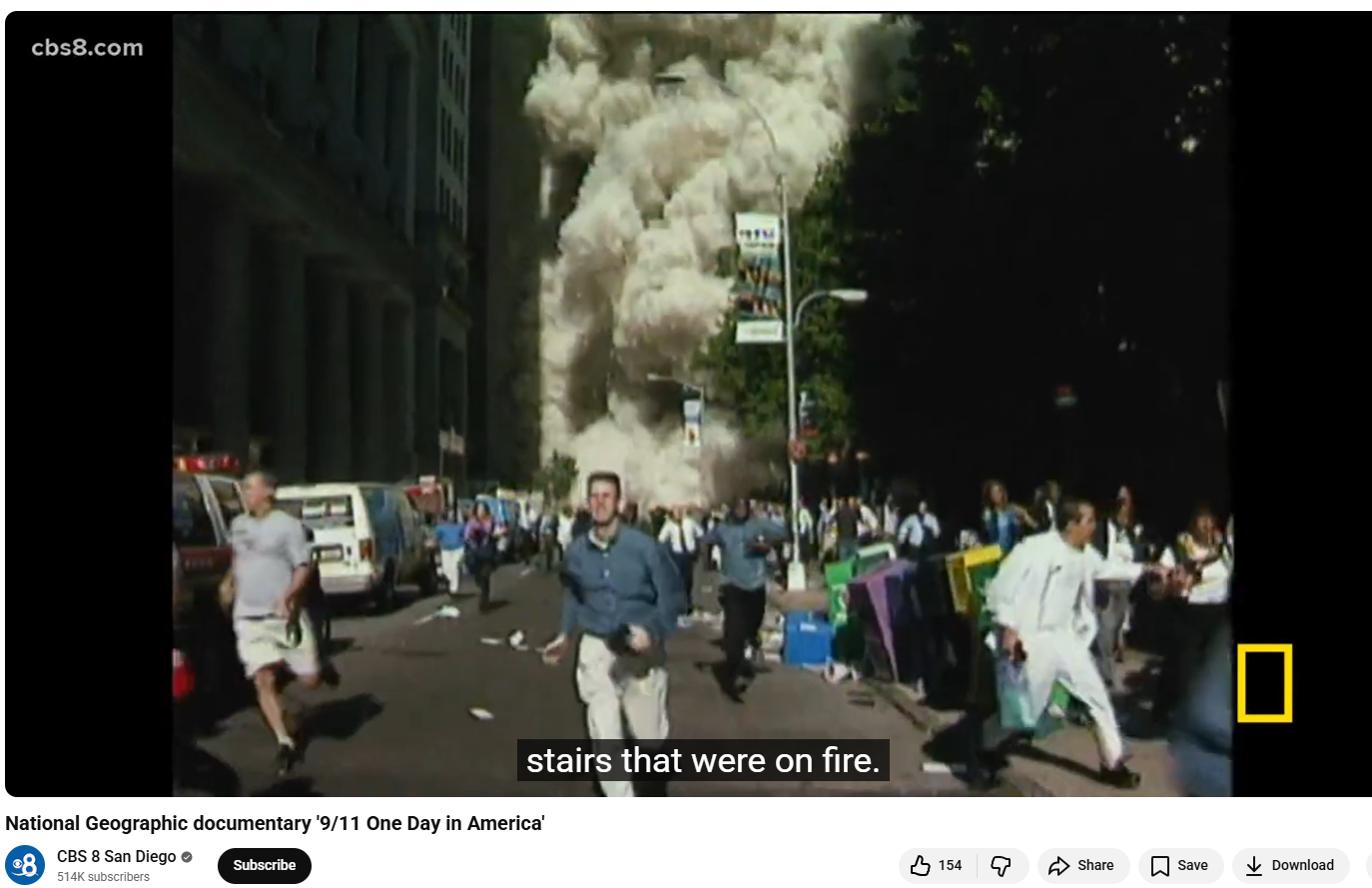

Further research led us to the same footage on the YouTube channel CBS 8 San Diego. At the 01:11 timestamp of the video, visuals matching the viral clip can be clearly seen.

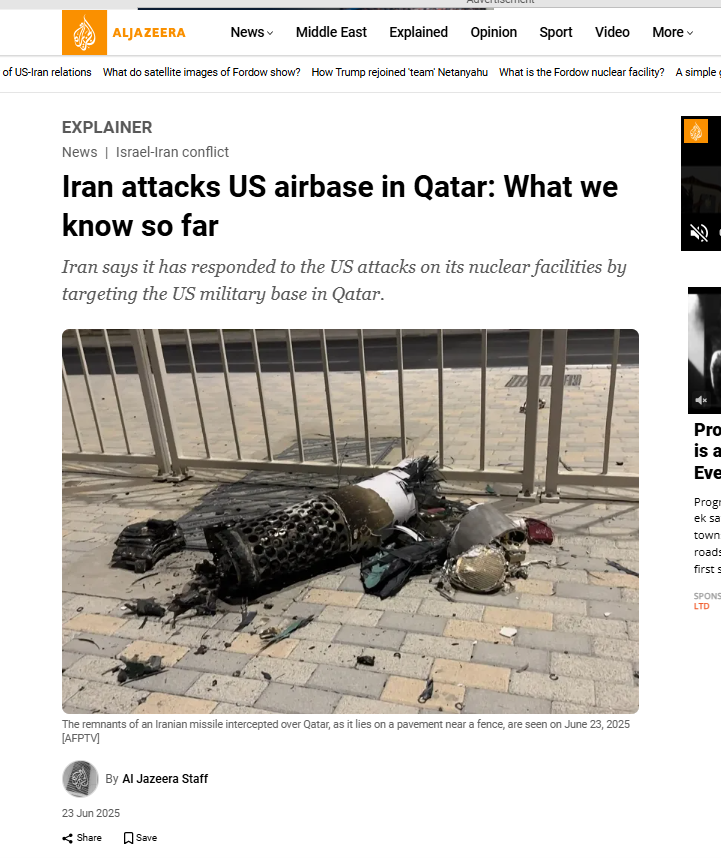

We also found an Al Jazeera report dated June 23, 2025, which confirmed that Iran had attacked US forces stationed at the Al Udeid airbase in Qatar in retaliation for US strikes on Iran’s uclear facilities. However, the visuals used in the viral video do not correspond to this incident.

Conclusion

The viral video does not show a recent Iranian attack on a US airbase in Qatar. The clip actually dates back to the September 11, 2001 terrorist attacks on the World Trade Center in the United States. Old 9/11 footage has been falsely shared with a misleading claim linking it to Iran’s alleged missile strike on the US.

Introduction: Reasons Why These Amendments Have Been Suggested.

The suggested changes in the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, are the much-needed regulatory reaction to the blistering emergence of synthetic information and deepfakes. These reforms are due to the pressing necessity to govern risks within the digital ecosystem as opposed to regular reformation.

The Emergence of the Digital Menace

Generative AI tools have also facilitated the generation of very realistic images, videos, audio, and text in recent years. Such artificial media have been abused to portray people in situations they are not in or in statements they have never said. The market size is expected to have a compound annual growth rate(CAGR) from 2025 to 2031 of 37.57%, resulting in a market volume of US$400.00 bn by 2031. Therefore, tight regulatory controls are necessary to curb a high prevalence of harm in the Indian digital world.

The Gap in Law and Institution

None of the IT Rules, 2021, clearly addressed synthetic content. Although the Information Technology Act, 2000 dealt with identity theft, impersonation and violation of privacy, the intermediaries were not explicitly obligated on artificial media. This left a loophole in enforcement, particularly since AI-generated content might get around the old system of moderation. These amendments bring India closer to the international standards, including the EU AI Act, which requires transparency and labelling of AI-driven content. India addresses such requirements and adapts to local constitutional and digital ecosystem needs.

II. Explanation of the Amendments

The amendments of 2025 present five alternative changes in the current IT Rules framework, which address various areas of synthetic media regulation.

A. Definitional Clarification: Synthetic Generation of Information Introduction.

Rule 2(1)(wa) Amendment:

The amendments provide an all-inclusive definition of what is meant by “synthetically generated information” as information, which is created, or produced, changed or distorted with the use of a computer resource, in a way that such information can reasonably be perceived to be genuine. This definition is intentionally broad and is not limited to deepfakes in the strict sense but to any artificial media that has gone through algorithmic manipulation in order to have a semblance of authenticity.

Expansion of Legal Scope:

Rule 2(1A) also makes it clear that any mention of information in the context of unlawful acts, namely, including categories listed in Rule 3(1)(b), Rule 3(1)(d), Rule 4(2), and Rule 4(4), should be understood to mean synthetically generated information. This is a pivotal interpretative protection that does not allow intermediaries to purport that synthetic versions of illegal material are not under the control of the regulation since they are algorithmic creations and not descriptions of what actually occurred.

B. Safe Harbour Protection and Content Removal Requirements

Amendment, rule 3(1)(b)- Safe Harbour Clarification:

The amendments add a certain proviso to the Rule (3) (1)(b) that explains a deletion or facilitation of access of synthetically produced information (or any information falling within specified categories) which the intermediaries have made in good faith as part of reasonable endeavours or at the receipt of a complaint shall not be considered a breach of the Section 79(2) (a) or (b) of the Information Technology Act, 2000. This coverage is relevant especially since it insures the intermediaries against liability in situations where they censor the synthetic contents in advance of a court ruling or governmental warnings.

C. Labelling and Metadata Requirements that are mandatory on Intermediaries that enable the creation of synthetic content

The amendments establish a new framework of due diligence in Rule 3(3) on the case of intermediaries that offer tools to generate, modify, or alter the synthetically generated information. Two fundamental requirements are laid down.

- The generated information must be prominently labelled or embedded with a permanent, unique metadata or identifier. The label or metadata must be:

- Visibly displayed or made audible in a prominent manner on or within that synthetically generated information.

- It should cover at least 10% of the surface of the visual display or, in the case of audio content, during the initial 10% of its duration.

- It can be used to immediately identify that such information is synthetically generated information which has been created, generated, modified, or altered using the computer resource of the intermediary.

- The intermediary in clause (a) shall not enable modification, suppression or removal of such label, permanent unique metadata or identifier, by whatever name called.

D. Important Social Media Intermediaries- Pre-Publication Checking Responsibilities

The amendments present a three-step verification mechanism, under Rule 4(1A), to Significant Social Media Intermediaries (SSMIs), which enables displaying, uploading or publishing on its computer resource before such display, uploading, or publication has to follow three steps.

Step 1- User Declaration: It should compel the users to indicate whether the materials they are posting are synthetically created. This puts the first burden on users.

Step 2-Technical Verification: To ensure that the user is truly valid, the SSMIs need to provide reasonable technical means, such as automated tools or other applications. This duty is contextual and would be based on the nature, format and source of content. It does not allow intermediaries to escape when it is known that not every type of content can be verified using the same standards.

Step 3- Prominent Labelling: In case the synthetic origin is verified by user declaration or technical verification, SSMIs should have a notice or label that is prominently displayed to be seen by users before publication.

The amendments provide a better system of accountability and set that intermediaries will be found to have failed due diligence in a case where it is established that they either knowingly permitted, encouraged or otherwise failed to act on synthetically produced information in contravention of these requirements. This brings in an aspect of knowledge, and intermediaries cannot use accidental errors as an excuse for non-compliance.

An explanation clause makes it clear that SSMIs should also make reasonable and proportionate technical measures to check user declarations and keep no synthetic content published without adequate declaration or labelling. This eliminates confusion on the role of the intermediaries with respect to making declarations.

III. Attributes of The Amendment Framework

- Precision in Balancing Innovation and Accountability.

The amendments have commendably balanced two extreme regulatory postures by neither prohibiting nor allowing the synthetic media to run out of control. It has recognised the legitimate use of synthetic media creation in entertainment, education, research and artistic expression by adopting a transparent and traceable mandate that preserves innovation while ensuring accountability.

- Overt Acceptance of the Intermediary Liability and Reverse Onus of Knowledge

Rule 4(1A) gives a highly significant deeming rule; in cases where the intermediary permits or refrains from acting with respect to the synthetic content knowing that the rules are violated, it will be considered as having failed to comply with the due diligence provisions. This description closes any loopholes in unscrupulous supervision where intermediaries can be able to argue that they did so. Standard of scienter promotes material investment in the detection devices and censor mechanisms that have been in place to offer security to the platforms that have sound systems, albeit the fact that the tools fail to capture violations at times.

- Clarity Through Definition and Interpretive Guidance

The cautious definition of the term “synthetically generated information” and the guidance that is provided in Rule 2(1A) is an admirable attempt to solve confusion in the previous regulatory framework. Instead of having to go through conflicting case law or regulatory direction, the amendments give specific definitional limits. The purposefully broad formulation (artificially or algorithmically created, generated, modified or altered) makes sure that the framework is not avoided by semantic games over what is considered to be a real synthetic content versus a slight algorithmic alteration.

- Insurance of non-accountability but encourages preventative moderation

The safe harbour clarification of the Rule 3(1)(b) amendment clearly safeguards the intermediaries who voluntarily dismiss the synthetic content without a court order or government notification. It is an important incentive scheme that prompts platforms to implement sound self-regulation measures. In the absence of such protection, platforms may also make rational decisions to stay in a passive stance of compliance, only deleting content under the pressure of an external authority, thus making them more effective in keeping users safe against dangerous synthetic media.

IV. Conclusion

The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules 2025 suggest a structured, transparent, and accountable execution of curbing the rising predicaments of synthetic media and deepfakes. The amendments deal with the regulatory and interpretative gaps that have always existed in determining what should be considered as synthetically generated information, the intermediary liabilities and the mandatory labelling and metadata requirement. Safe-harbour protection will encourage the moderation proactively, and a scienter-based liability rule will not permit the intermediaries to escape liability when they are aware of the non-compliance but tolerate such non-compliance. The idea to introduce pre-publication verification of Significant Social Media Intermediaries adds the responsibility to users and due diligence to the platform. Overall, the amendments provide a reasonable balance between innovation and regulation, make the process more open with its proper definitions, promote responsible conduct on the platform and transform India and the new standards in the sphere of synthetic media regulation. They collaborate to enhance the verisimilitude, defence of the users, and visibility of the systems of the digital ecosystem of India.

V. References

2. https://www.statista.com/outlook/tmo/artificial-intelligence/generative-ai/worldwide

What are Decentralised Autonomous Organizations (DAOs)?

A Decentralised Autonomous Organisation or a DAO, is a unique take on democracy on the blockchain. It is a set of rules encoded into a self-executing contract (also known as a smart contract) that operates autonomously on a blockchain system. A DAO imitates a traditional company, although, in its more literal sense, it is a contractually created entity. In theory, DAOs have no centralised authority in making decisions for the system; it is a communally run system whereby all decisions (be it for internal governance or for the development of the blockchain system) are voted upon by the community members. DAOs are primarily characterised by a decentralised form of operation, where there is no one entity, group or individual running the system. They are self-sustaining entities, having their own currency, economy and even governance, that do not depend on a group of individuals to operate. Blockchain systems, especially DAOs are characterised by pure autonomy created to evade external coercion or manipulation from sovereign powers. DAOs follow a mutually created, agreed set of rules created by the community, that dictates all actions, activities, and participation in the system’s governance. There may also be provisions that regulate the decision-making power of the community.

Ethereum’s DAO’s White Paper described DAO as “The first implementation of a [DAO Entity] code to automate organisational governance and decision making.” Can be used by individuals working together collaboratively outside of a traditional corporate form. It can also be used by a registered corporate entity to automate formal governance rules contained in corporate bylaws or imposed by law.” The referred white paper proposes an entity that would use smart contracts to solve governance issues inherent in traditional corporations. DAOs attempt to redesign corporate governance with blockchain such that contractual terms are “formalised, automated and enforced using software.”

Cybersecurity threats under DAOs

While DAOs offer increased transparency and efficiency, they are not immune to cybersecurity threats. Cybersecurity risks in DAO, primarily in governance, stem from vulnerabilities in the underlying blockchain technology and the DAO's smart contracts. Smart contract exploits, code vulnerabilities, and weaknesses in the underlying blockchain protocol can be exploited by malicious actors, leading to unauthorised access, fund manipulations, or disruptions in the governance process. Additionally, DAOs may face challenges related to phishing attacks, where individuals are tricked into revealing sensitive information, such as private keys, compromising the integrity of the governance structure. As DAOs continue to evolve, addressing and mitigating cybersecurity threats is crucial to ensuring the trust and reliability of decentralised governance mechanisms.

Centralisation/Concentration of Power

DAOs today actively try to leverage on-chain governance, where any governance votes or transactions are directly taken on the blockchain. But such governance is often plutocratic in nature, where the wealthy hold influences, rather than democracies, since those who possess the requisite number of tokens are only allowed to vote and each token staked implies that many numbers of votes emerge from the same individual. This concentration of power in the hands of “whales” often creates disadvantages for the newer entrants into the system who may have an in-depth background but lack the funds to cast a vote. Voting, presently in the blockchain sphere, lacks the requisite concept of “one man, one vote” which is critical in democratic societies.

Smart contract vulnerabilities and external threats

Smart contracts, self-executing pieces of code on a blockchain, are integral to decentralised applications and platforms. Despite their potential, smart contracts are susceptible to various vulnerabilities such as coding errors, where mistakes in the code can lead to funds being locked or released erroneously. Some of them have been mentioned as follows;

Smart Contracts are most prone to re-entrance attacks whereby an untrusted external code is allowed to be executed in a smart contract. This scenario occurs when a smart contract invokes an external contract, and the external contract subsequently re-invokes the initial contract. This sequence of events can lead to an infinite loop, and a reentrancy attack is a tactic exploiting this vulnerability in a smart contract. It enables an attacker to repeatedly invoke a function within the contract, potentially creating an endless loop and gaining unauthorised access to funds.

Additionally, smart contracts are also prone to oracle problems. Oracles refer to third-party services or mechanisms that provide smart contracts with real-world data. Since smart contracts on blockchain networks operate in a decentralised, isolated environment, they do not have direct access to external information, such as market prices, weather conditions, or sports scores. Oracles bridge this gap by acting as intermediaries, fetching and delivering off-chain data to smart contracts, enabling them to execute based on real-world conditions. The oracle problem within blockchain pertains to the difficulty of securely incorporating external data into smart contracts. The reliability of external data poses a potential vulnerability, as oracles may be manipulated or provide inaccurate information. This challenge jeopardises the credibility of blockchain applications that rely on precise and timely external data.

Sybil Attack: A Sybil attack involves a single node managing multiple active fake identities, known as Sybil identities, concurrently within a peer-to-peer network. The objective of such an attack is to weaken the authority or influence within a trustworthy system by acquiring the majority of control in the network. The fake identities are utilised to establish and exert this influence. A successful Sybil attack allows threat actors to perform unauthorised actions in the system.

Distributed Denial of Service Attacks: A Distributed Denial of Service (DDoS) attack is a malicious attempt to disrupt the regular functioning of a network, service, or website by overwhelming it with a flood of traffic. In a typical DDoS attack, multiple compromised computers or devices, often part of a botnet (a network of infected machines controlled by a single entity), are used to generate a massive volume of requests or data traffic. The targeted system becomes unable to respond to legitimate user requests due to the excessive traffic, leading to a denial of service.

Conclusion

Decentralised Autonomous Organisations (DAOs) represent a pioneering approach to governance on the blockchain, relying on smart contracts and community-driven decision-making. Despite their potential for increased transparency and efficiency, DAOs are not immune to cybersecurity threats. Vulnerabilities in smart contracts, such as reentrancy attacks and oracle problems, pose significant risks, and the concentration of voting power among wealthy token holders raises concerns about democratic principles. As DAOs continue to evolve, addressing these challenges is essential to ensuring the resilience and trustworthiness of decentralised governance mechanisms. Efforts to enhance security measures, promote inclusivity, and refine governance models will be crucial in establishing DAOs as robust and reliable entities in the broader landscape of blockchain technology.

References:

https://www.imperva.com/learn/application-security/sybil-attack/

https://www.linkedin.com/posts/satish-kulkarni-bb96193_what-are-cybersecurity-risk-to-dao-and-how-activity-7048286955645677568-B3pV/ https://www.geeksforgeeks.org/what-is-ddosdistributed-denial-of-service/ Report of Investigation Pursuant to Section 21 (a) of the Securities Exchange Act of 1934: The DAO, Securities and Exchange Board, Release No. 81207/ July 25, 2017

https://www.sec.gov/litigation/investreport/34-81207.pdf https://www.legalserviceindia.com/legal/article-10921-blockchain-based-decentralized-autonomous-organizations-daos-.html