#FactCheck - Viral Photos Falsely Linked to Iranian President Ebrahim Raisi's Helicopter Crash

Executive Summary:

On 20th May, 2024, Iranian President Ebrahim Raisi and several others died in a helicopter crash that occurred northwest of Iran. The images circulated on social media claiming to show the crash site, are found to be false. CyberPeace Research Team’s investigation revealed that these images show the wreckage of a training plane crash in Iran's Mazandaran province in 2019 or 2020. Reverse image searches and confirmations from Tehran-based Rokna Press and Ten News verified that the viral images originated from an incident involving a police force's two-seater training plane, not the recent helicopter crash.

Claims:

The images circulating on social media claim to show the site of Iranian President Ebrahim Raisi's helicopter crash.

Fact Check:

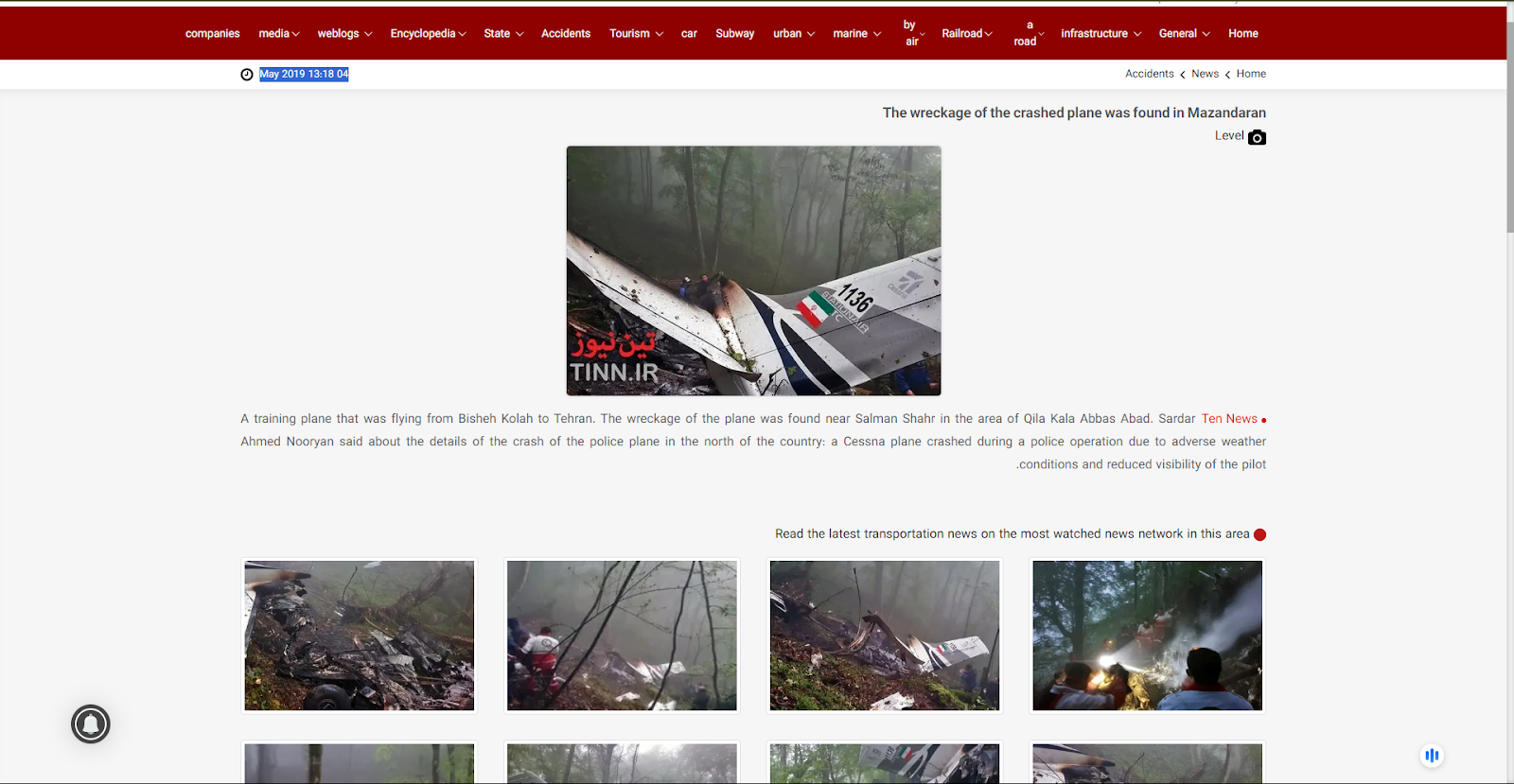

After receiving the posts, we reverse-searched each of the images and found a link to the 2020 Air Crash incident, except for the blue plane that can be seen in the viral image. We found a website where they uploaded the viral plane crash images on April 22, 2020.

According to the website, a police training plane crashed in the forests of Mazandaran, Swan Motel. We also found the images on another Iran News media outlet named, ‘Ten News’.

The Photos uploaded on to this website were posted in May 2019. The news reads, “A training plane that was flying from Bisheh Kolah to Tehran. The wreckage of the plane was found near Salman Shahr in the area of Qila Kala Abbas Abad.”

Hence, we concluded that the recent viral photos are not of Iranian President Ebrahim Raisi's Chopper Crash, It’s false and Misleading.

Conclusion:

The images being shared on social media as evidence of the helicopter crash involving Iranian President Ebrahim Raisi are incorrectly shown. They actually show the aftermath of a training plane crash that occurred in Mazandaran province in 2019 or 2020 which is uncertain. This has been confirmed through reverse image searches that traced the images back to their original publication by Rokna Press and Ten News. Consequently, the claim that these images are from the site of President Ebrahim Raisi's helicopter crash is false and Misleading.

- Claim: Viral images of Iranian President Raisi's fatal chopper crash.

- Claimed on: X (Formerly known as Twitter), YouTube, Instagram

- Fact Check: Fake & Misleading