#FactCheck - AI Generated image of Virat Kohli falsely claims to be sand art of a child

Executive Summary:

The picture of a boy making sand art of Indian Cricketer Virat Kohli spreading in social media, claims to be false. The picture which was portrayed, revealed not to be a real sand art. The analyses using AI technology like 'Hive' and ‘Content at scale AI detection’ confirms that the images are entirely generated by artificial intelligence. The netizens are sharing these pictures in social media without knowing that it is computer generated by deep fake techniques.

Claims:

The collage of beautiful pictures displays a young boy creating sand art of Indian Cricketer Virat Kohli.

Fact Check:

When we checked on the posts, we found some anomalies in each photo. Those anomalies are common in AI-generated images.

The anomalies such as the abnormal shape of the child’s feet, blended logo with sand color in the second image, and the wrong spelling ‘spoot’ instead of ‘sport’n were seen in the picture. The cricket bat is straight which in the case of sand made portrait it’s odd. In the left hand of the child, there’s a tattoo imprinted while in other photos the child's left hand has no tattoo. Additionally, the face of the boy in the second image does not match the face in other images. These made us more suspicious of the images being a synthetic media.

We then checked on an AI-generated image detection tool named, ‘Hive’. Hive was found to be 99.99% AI-generated. We then checked from another detection tool named, “Content at scale”

Hence, we conclude that the viral collage of images is AI-generated but not sand art of any child. The Claim made is false and misleading.

Conclusion:

In conclusion, the claim that the pictures showing a sand art image of Indian cricket star Virat Kohli made by a child is false. Using an AI technology detection tool and analyzing the photos, it appears that they were probably created by an AI image-generated tool rather than by a real sand artist. Therefore, the images do not accurately represent the alleged claim and creator.

Claim: A young boy has created sand art of Indian Cricketer Virat Kohli

Claimed on: X, Facebook, Instagram

Fact Check: Fake & Misleading

Related Blogs

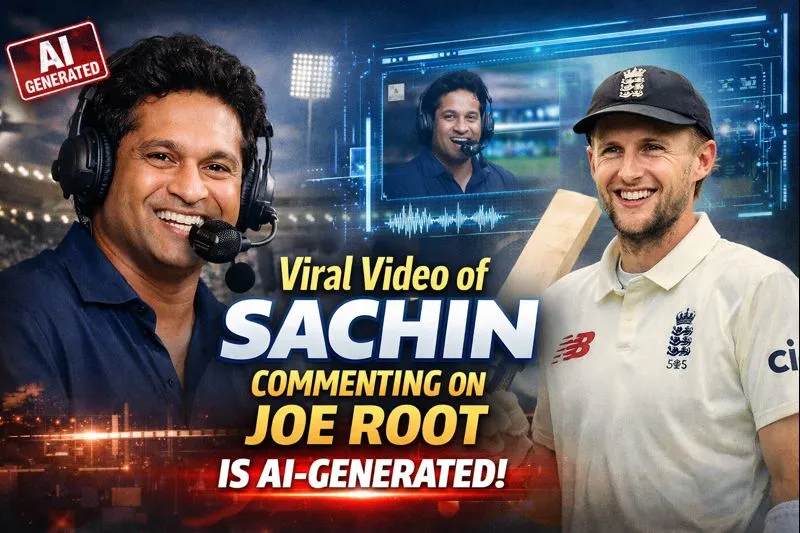

A video circulating on social media claims to show former Indian cricketer Sachin Tendulkar commenting on England batter Joe Root’s batting feats. In the clip, Tendulkar is allegedly heard saying that if Joe Root continues scoring centuries, even his (Tendulkar’s) record would be broken. The video further claims that Tendulkar says if Root scores another century, he would give up the bat’s grip, after which the clip abruptly ends.

Users sharing the video are claiming that Sachin Tendulkar has taken a dig at Joe Root through this remark.

Cyber Peace Foundation’s research found the claim to be misleading. Our research clearly establishes that the viral video is not authentic but has been created using Artificial Intelligence (AI) tools and is being shared online with a false narrative.

CLAIM

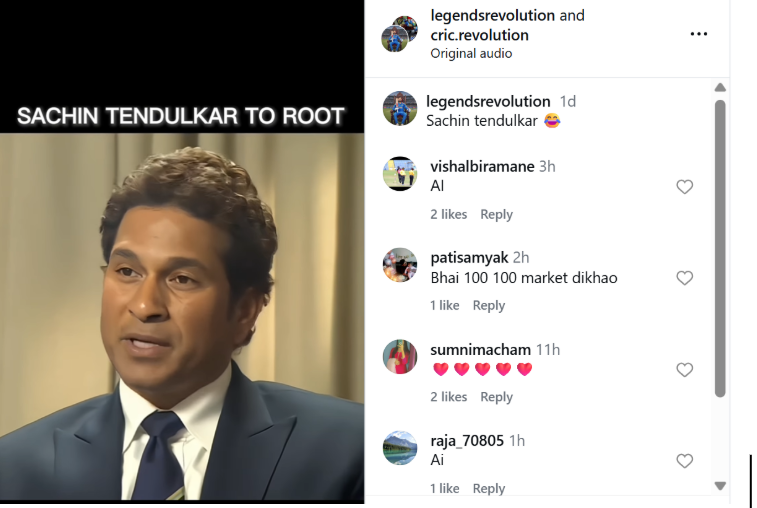

On January 5, 2025, several users shared the viral video on Instagram, claiming it shows Sachin Tendulkar making remarks about Joe Root’s century-scoring spree.

(Post link and archive link available.)

FACT CHECK

To verify the claim, we extracted keyframes from the viral video and conducted a Google Reverse Image Search. This led us to an interview of Sachin Tendulkar published on the official BBC News YouTube channel on November 18, 2013. The visuals from that interview match exactly with those seen in the viral clip.

This establishes that the visuals used in the viral video are old and have been repurposed with manipulated audio to create a misleading narrative.

Further, Joe Root made his Test debut in 2012. At that time, he had not scored multiple Test centuries and was nowhere close to Sachin Tendulkar’s record tally of hundreds. This timeline itself makes the viral claim factually incorrect.

(Link to the original BBC interview available.)

https://www.youtube.com/watch?v=v6Rz4pgR9UQ

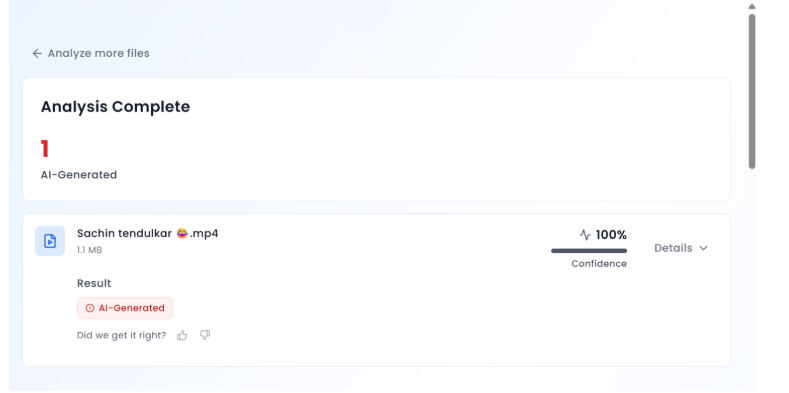

Upon closely examining the viral clip, we noticed that Sachin Tendulkar’s voice sounded unnatural and inconsistent. This raised suspicion of audio manipulation.

We then ran the viral video through an AI detection tool, Aurigin AI. According to the results, the audio in the video was found to be 100 percent AI-generated, confirming that Tendulkar never made the statements attributed to him in the clip.

Conclusion

Our research confirms that the viral video claiming Sachin Tendulkar commented on Joe Root’s centuries is fake. The video has been created using AI-generated audio and misleadingly combined with visuals from a 2013 interview. Users are sharing this manipulated clip on social media with a false claim.

In an era defined by perpetual technological advancement, the hitherto uncharted territories of the human experience are progressively being illuminated by the luminous glow of innovation. The construct of privacy, once a straightforward concept involving personal secrets and solitude, has evolved into a complex web of data protection, consent, and digital rights. This notion of privacy, which often feels as though it elusively ebbs and flows like the ghost of a bygone epoch, is now confronted with a novel intruder – neurotechnology – which promises to redefine the very essence of individual sanctity.

Why Neuro Rights

At the forefront of this existential conversation lie ventures like Elon Musk's Neuralink. This company, which finds itself at the confluence of fantastical dreams and tangible reality, teases a future where the contents of our thoughts could be rendered as accessible as the words we speak. An existence where machines not only decipher our mental whispers but hold the potential to echo back, reshaping our cognitive landscapes. This startling innovation sets the stage for the emergence of 'neurorights' – a paradigm aimed at erecting a metaphorical firewall around the synapses and neurons that compose our innermost selves.

At institutions such as the University of California, Berkeley, researchers, under the aegis of cognitive scientists like Jack Gallant, are already drawing the map of once-inaccessible territories within the mind. Gallant's landmark study, which involved decoding the brain activity of volunteers as they absorbed visual stimuli, opened Pandora's box regarding the implications of mind-reading. The paper published a decade ago, was an inchoate step toward understanding the narrative woven within the cerebral cortex. Although his work yielded only a rough sketch of the observed video content, it heralded an era where thought could be translated into observable media.

The Growth

This rapid acceleration of neuro-technological prowess has not gone unnoticed on the sociopolitical stage. In a pioneering spirit reminiscent of the robust legislative eagerness of early democracies, Chile boldly stepped into the global spotlight in 2021 by legislating neurorights. The Chilean senate's decision to constitutionalize these rights sent ripples the world over, signalling an acknowledgement that the evolution of brain-computer interfaces was advancing at a daunting pace. The initiative was spearheaded by visionaries like Guido Girardi, a former senator whose legislative foresight drew clear parallels between the disruptive advent of social media and the potential upheaval posed by emergent neurotechnology.

Pursuit of Regulation

Yet the pursuit of regulation in such an embryonic field is riddled with intellectual quandaries and ethical mazes. Advocates like Allan McCay articulate the delicate tightrope that policy-makers must traverse. The perils of premature regulation are as formidable as the risks of a delayed response – the former potentially stifling innovation, the latter risking a landscape where technological advances could outpace societal control, engendering a future fraught with unforeseen backlashes.

Such is the dichotomy embodied in the story of Ian Burkhart, whose life was irrevocably altered by the intervention of neurotechnology. Burkhart's experience, transitioning from quadriplegia to digital dexterity through sheer force of thought, epitomizes the utopic potential of neuronal interfaces. Yet, McCay issues a solemn reminder that with great power comes great potential for misuse, highlighting contentious ethical issues such as the potential for the criminal justice system to over extend its reach into the neural recesses of the human psyche.

Firmly ensconced within this brave new world, the quest for prudence is of paramount importance. McCay advocates for a dyadic approach, where privacy is vehemently protected and the workings of technology proffered with crystal-clear transparency. The clandestine machinations of AI and the danger of algorithmic bias necessitate a vigorous, ethical architecture to govern this new frontier.

As legal frameworks around the globe wrestle with the implications of neurotechnology, countries like India, with their burgeoning jurisprudence regarding privacy, offer a vantage point into the potential shape of forthcoming legislation. Jurists and technology lawyers, including Jaideep Reddy, acknowledge ongoing protections yet underscore the imperativeness of continued discourse to gauge the adequacy of current laws in this nascent arena.

Conclusion

The dialogue surrounding neurorights emerges, not merely as another thread in our social fabric, but as a tapestry unto itself – intricately woven with the threads of autonomy, liberty, and privacy. As we hover at the edge of tomorrow, these conversations crystallize into an imperative collective undertaking, promising to define the sanctity of cognitive liberty. The issue at hand is nothing less than a societal reckoning with the final frontier – the safeguarding of the privacy of our thoughts.

References:

Introduction

Valentine’s Day celebrates the bond between people, their romantic love, and their deep relationships with others. The increasing use of digital platforms in modern relationships has created a situation where cybercriminals use this time of year to exploit human emotions for money-making schemes. The period around 14 February often sees a rise in online romance scams, phishing attacks, and fake shopping websites that specifically target people who are emotionally vulnerable and active online. People need to be aware of these scams because this awareness helps them protect their personal information and their financial resources.

The Rise of Romance Scams

Modern romance scams have evolved from their original form because criminals now execute their schemes through more advanced methods. Fraudsters create authentic-looking fake identities, which they use to deceive victims through dating applications, social media platforms and networking websites. The profiles use stolen images and fake job histories, together with convincing emotional stories, which help them establish trust with potential victims.

Scammers usually begin their deception after they have built an emotional connection with their targets. Once trust is established, they introduce a crisis or an opportunity that pressures the victim to act quickly. This is often presented as a problem that needs urgent help or a chance that should not be missed, such as:

- A sudden medical emergency that requires money for treatment

- Requests for travel expenses to finally come and meet in person

- Fake investment opportunities that promise quick or guaranteed returns

- Demands for customs, courier, or clearance fees to release a supposed package or gift

They make the victim give money to them and buy gift cards and handle personal banking details. The scam takes place for several weeks or months until the victim starts to show doubt about what is happening. The psychological manipulation that occurs in romance scams causes severe harm to their victims. Victims experience two types of damage because criminals steal their money, and they suffer emotional pain, and their social standing gets damaged.

Fake E-Commerce and “Valentine’s Deals”

Valentine's Day marks the beginning of a shopping rush, which leads people to buy various gifts, including flowers, jewellery and customised products, as well as making reservations for events. Cybercriminals create fake websites to exploit this demand by providing fake discounts and temporary promotional offers.

Common warning signs include:

- Newly registered domains that lack valid user reviews

- Websites that contain multiple spelling mistakes and display poor design

- Payment requests through methods that cannot be tracked

- Online platforms that lack secure payment processing systems

Consumers who make purchases on such sites face the risk of losing money while their card information is stolen for future fraudulent activities.

Phishing in the Name of Love

During the holiday season, phishing campaigns increase their focus on particular targets. Users may receive:

- Valentine's Day discount emails

- Messages that claim to show secret admirer intentions

- Links that lead to supposed romantic surprises

- Delivery notifications that inform about unreceived gifts

Malicious links result in credential theft, malware installation and unauthorised financial transactions. At first glance, these attacks show resemblance to authentic brands and logistics companies, which makes them hard to identify.

Investment and Crypto Romance Fraud

A rising type of romance scams now uses cryptocurrency and online trading platforms as their new approach. Scammers who establish trust with their victims will convince them to invest in digital assets that appear to generate high returns. The fake dashboards display excellent investment results to convince investors to commit more funds. The process stops when they block all withdrawal requests and stop all contact with the user. The combination of emotional manipulation with financial fraud shows how cybercrime develops according to technological advancements.

Why Seasonal Scams Work

Seasonal scams succeed because they match the predictable behaviour patterns that people exhibit during specific times of the year. During Valentine’s season:

- People experience their highest emotional vulnerability

- People shop more frequently through online platforms

- People use digital platforms at increased rates

- Users will decrease their level of scepticism while trying to establish connections with others

Cybercriminals use urgent situations together with emotional ties and social norms as their primary attack methods. The combination of psychological triggers and digital convenience creates fertile ground for deception.

CyberPeace Recommendations for Staying Safe This Valentine’s Season

The digital platforms provide people who search for connections with valuable opportunities to connect with others, yet users must remain careful about their online activities. People can protect themselves from online fraud by following these steps:

- They should confirm identity details before they give away their private data.

- They should not send money to people whom they met only through internet platforms.

- They should verify website ownership and examine customer feedback before making online purchases.

- They should activate multi-factor authentication for their social media accounts and financial accounts.

- People should treat unexpected links with great care, especially those links that create a sense of urgency.

- The Cybercrime reporting portal www.cybercrime.gov.in with 24x7 helpline 1930 is an effective tool at the disposal of victims of cybercrimes to report their complaints.

- In case of any cyber threat, issue or discrepancy, you can also seek assistance from the CyberPeace Helpline at +91 9570000066 or write to us at helpline@cyberpeace.net. Immediate reporting protects victims and helps to combat cybercrime.

Conlusion

Online safety during festive seasons requires shared responsibility among multiple parties. Digital resilience is strengthened through the combined efforts of platforms, financial institutions, regulators, and civil society organisations. The digital ecosystem becomes safer through three essential elements, which include awareness campaigns, stronger verification systems, and timely reporting mechanisms.

Valentine’s Day centres on the building of trust between people who want to connect with each other. To maintain trust in digital environments, users need to practice digital literacy skills, which should be shared by everyone. People who stay updated about cybersecurity threats can celebrate Valentine’s Day more safely, because their expressions of love remain protected from online scams.

References

- https://www.cloudsek.com/blog/valentines-day-cyber-attack-landscape-exploiting-love-through-digital-deception

- https://about.fb.com/news/2025/02/how-avoid-romance-scams-this-valentines-day/

- https://www.fbi.gov/contact-us/field-offices/sanfrancisco/fbi-san-francisco-warns-romance-scams-increasing-across-the-bay-area-this-valentines-day

- https://abc11.com/post/romance-scams-surge-ahead-valentines-day/18581079/

- https://www.moneycontrol.com/technology/5-common-online-scams-you-should-avoid-this-valentine-s-day-article-13820108.html