MeitY Issued an Advisory Regulating AI

Introduction

The Ministry of Electronics and Information Technology (MeitY) issued an advisory on March 1 2024, urging platforms to prevent bias, discrimination, and threats to electoral integrity by using AI, generative AI, LLMs, or other algorithms. The advisory requires that AI models deemed unreliable or under-tested in India must obtain explicit government permission before deployment. While leveraging Artificial Intelligence models, Generative AI, software, or algorithms in their computer resources, Intermediaries and platforms need to ensure that they prevent bias, discrimination, and threats to electoral integrity. As Intermediaries are required to follow due diligence obligations outlined under “Information Technology (Intermediary Guidelines and Digital Media Ethics Code)Rules, 2021, updated as of 06.04.2023”. This advisory is issued to urge the intermediaries to abide by the IT rules and regulations and compliance therein.

Key Highlights of the Advisories

- Intermediaries and platforms must ensure that users of Artificial Intelligence models/LLM/Generative AI, software, or algorithms do not allow users to host, display, upload, modify, publish, transmit, store, update, or share unlawful content, as per Rule 3(1)(b) of the IT Rules.

- The government emphasises intermediaries and platforms to prevent bias or discrimination in their use of Artificial Intelligence models, LLMs, and Generative AI, software, or algorithms, ensuring they do not threaten the integrity of the electoral process.

- The government requires explicit permission to use deemed under-testing or unreliable AI models, LLMs, or algorithms on the Indian internet. Further, it must be deployed with proper labelling of potential fallibility or unreliability. Further, users can be informed through a consent popup mechanism.

- The advisory specifies that all users should be well informed about the consequences of dealing with unlawful information on platforms, including disabling access, removing non-compliant information, suspension or termination of access or usage rights of the user to their user account and imposing punishment under applicable law. It entails that users are clearly informed, through terms of services and user agreements, about the consequences of engaging with unlawful information on the platform.

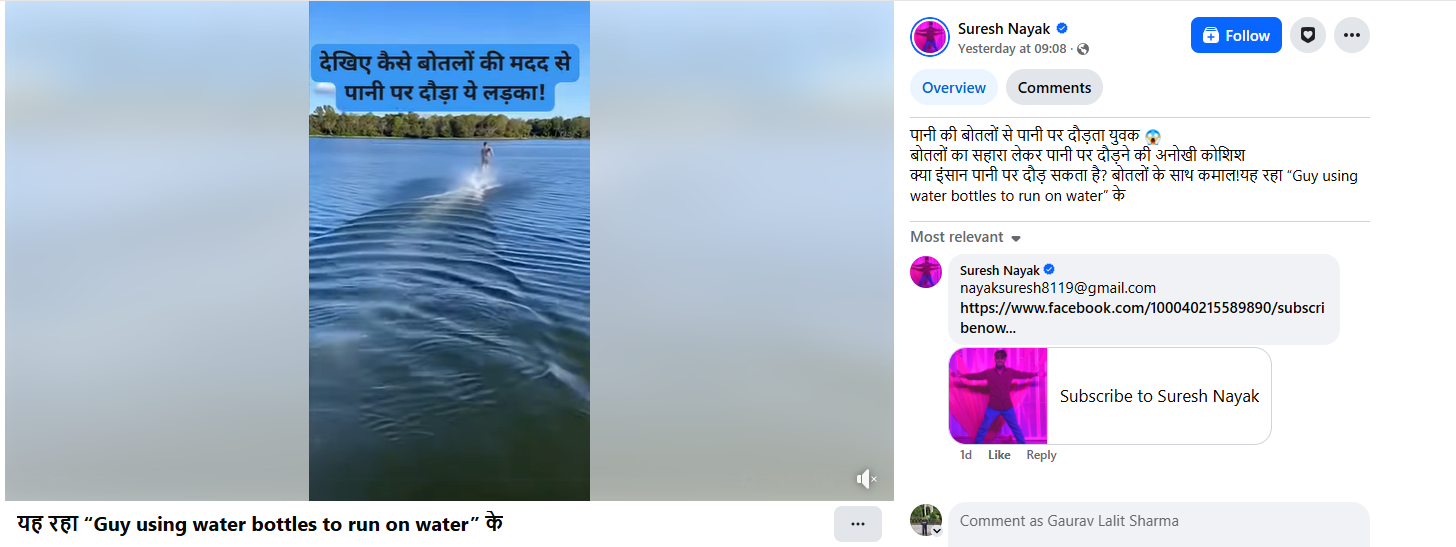

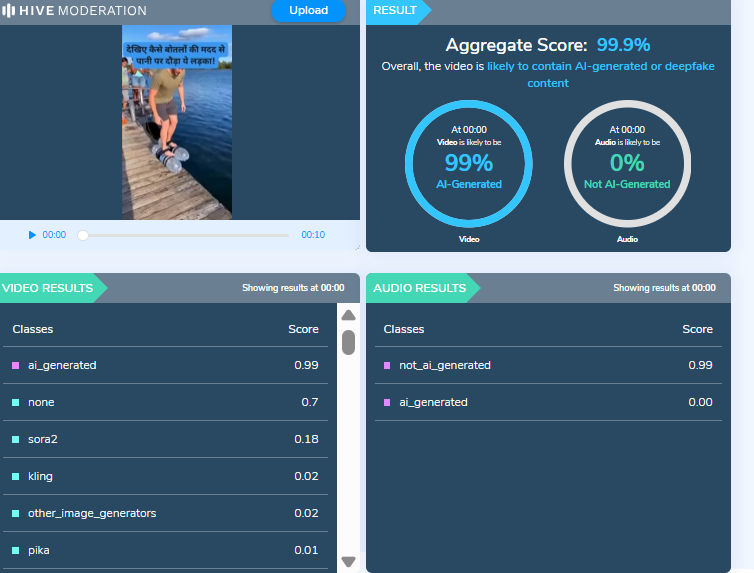

- The advisory also indicates measures advocating to combat deepfakes or misinformation. The advisory necessitates identifying synthetically created content across various formats, advising platforms to employ labels, unique identifiers, or metadata to ensure transparency. Furthermore, the advisory mandates the disclosure of software details and tracing the first originator of such synthetically created content.

Rajeev Chandrasekhar, Union Minister of State for IT, specified that

“Advisory is aimed at the Significant platforms, and permission seeking from Meity is only for large platforms and will not apply to startups. Advisory is aimed at untested AI platforms from deploying on the Indian Internet. Process of seeking permission , labelling & consent based disclosure to user about untested platforms is insurance policy to platforms who can otherwise be sued by consumers. Safety & Trust of India's Internet is a shared and common goal for Govt, users and Platforms.”

Conclusion

MeitY's advisory sets the stage for a more regulated Al landscape. The Indian government requires explicit permission for the deployment of under-testing or unreliable Artificial Intelligence models on the Indian Internet. Alongside intermediaries, the advisory also applies to digital platforms that incorporate Al elements. Advisory is aimed at significant platforms and will not apply to startups. This move safeguards users and fosters innovation by promoting responsible AI practices, paving the way for a more secure and inclusive digital environment.

References

- https://regmedia.co.uk/2024/03/04/meity_ai_advisory_1_march.pdf

- https://economictimes.indiatimes.com/tech/technology/govts-ai-advisory-will-not-apply-to-startups-mos-it-rajeev-chandrasekhar/articleshow/108197797.cms?from=mdr

- https://www.meity.gov.in/writereaddata/files/Advisory%2015March%202024.pdf

.webp)