#FactCheck - AI-Generated Image Falsely Linked to US Court Appearance of Venezuelan First Lady

A photo showing Cilia Flores, wife of Venezuelan President Nicolás Maduro, with visible injuries on her face is being widely shared on social media. Users claim the image was taken during her court appearance in the United States on January 5, alleging that she was beaten before being produced before a judge. Cyber Peace Foundation’s research found that the viral image was created using AI tools and is not real.

Claim:

A Facebook user shared the image claiming it shows Venezuelan President Maduro’s wife during her US court appearance, alleging physical assault prior to her arrest. The post also makes political and religious allegations in connection with the incident.Link, archive link and screenshot

Fact Check:

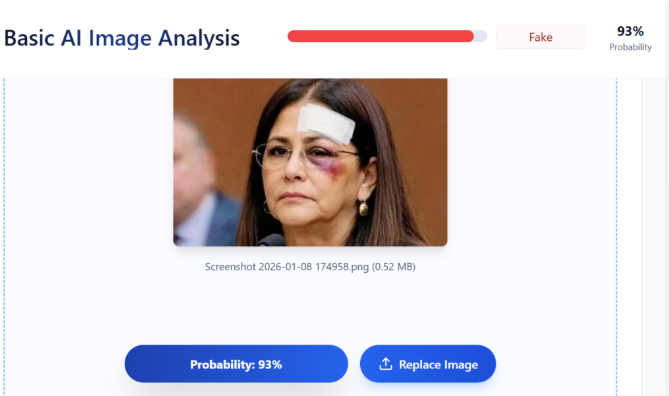

The viral image appeared suspicious due to unnatural facial details and injury patterns. Given the increasing use of artificial intelligence to generate fake visuals, Vishvas News analysed the image using AI image detection tools.TruthScan assessed the image as 93% likely to be AI-generated.

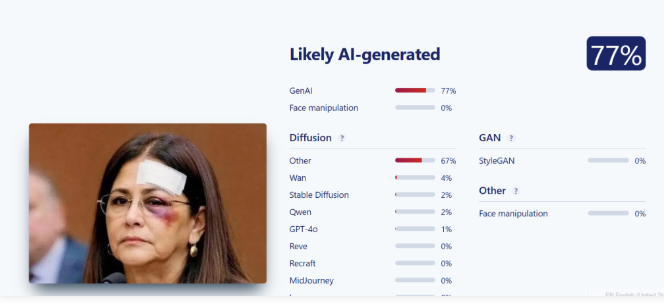

Sightengine flagged the image as 77% likely to be AI-generated.

The results indicate that the image is not authentic and has been created using AI tools.

What Official Reports Say

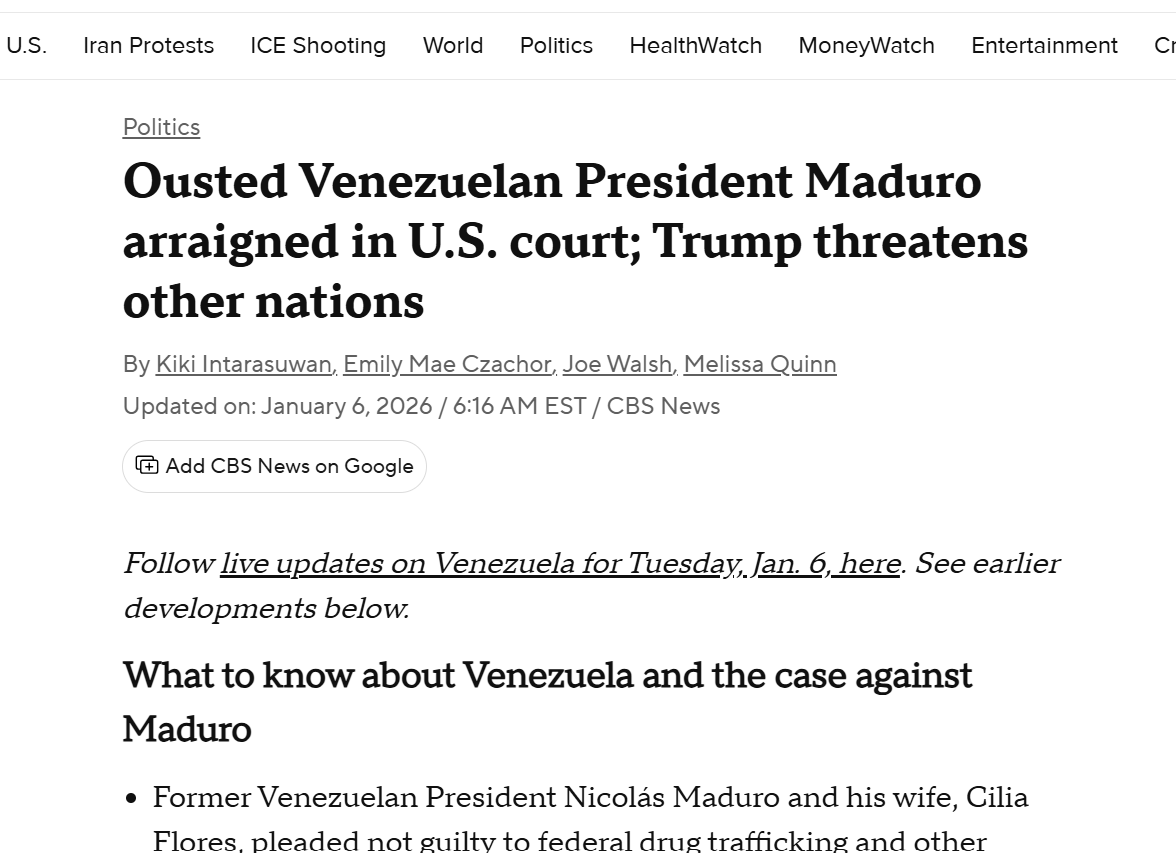

According to a CBS News report published on January 6, Nicolás Maduro and his wife Cilia Flores were produced before a federal court in Lower Manhattan, where they pleaded not guilty to drug trafficking and other charges. They are currently lodged at the Metropolitan Detention Center in Brooklyn The report states that the couple was detained during a US military operation. Following this, Venezuela’s Vice President Delcy Rodríguez was sworn in as the acting president. While Cilia Flores did appear before a Manhattan court, there is no authentic image showing her with injuries during the court proceedings. Link and Screenshot

https://www.cbsnews.com/live-updates/venezuela-trump-maduro-charges/

Conclusion:

The image being circulated as a photo of Cilia Flores during her US court appearance is AI-generated and fake. The claim that it shows injuries inflicted on her before being produced in court is false and misleading. The viral image has no connection with real court visuals.

Related Blogs

Introduction

In the labyrinthine corridors of the digital age, where information zips across the globe with the ferocity of a tempest, the truth often finds itself ensnared in a web of deception. It is within this intricate tapestry of reality and falsehood that we find ourselves examining two distinct yet equally compelling cases of misinformation, each a testament to the pervasive challenges that beset our interconnected world.

Case 1: The Deceptive Video: Originating in Malaysia, Misattributed to Indian Railway Development

A misleading video claiming to showcase Indian railway construction has been debunked as footage from Malaysia's East Coast Rail Link (ECRL). Fact-checking efforts by India TV traced the video's origin to Malaysia, revealing deceptive captions in Tamil and Hindi. The video was initially posted on Twitter on January 9, 2024, announcing the commencement of track-laying for Malaysia's East Coast Railway. Further investigation reveals the ECRL as a joint venture between Malaysia and China, involving the laying of tracks along the east coast, challenging assertions of Indian railway development. The ECRL's track-laying initiative, initiated in December 2023, is part of China's Belt and Road initiative, covering 665 kilometers across states like Kelantan, Terengganu, Pahang, and Selangor, with a completion target set for 2025.

The video in question, a digital chameleon, had its origins not in the bustling landscapes of India but within the verdant bounds of Malaysia. Specifically, it was a scene captured from the East Coast Rail Link (ECRL) project, a monumental joint venture between Malaysia and China, unfurling across 665 kilometers of Malaysian terrain. This ambitious endeavor, part of the grand Belt and Road initiative, is a testament to the collaborative spirit that defines our era, with tracks stretching from Kelantan to Selangor, and a completion horizon set for the year 2025.

The unveiling of this grand project was graced by none other than Malaysia’s King Sultan Abdullah Sultan Ahmad Shah, in Pahang, underscoring the strategic alliance with China and the infrastructural significance of the ECRL. Yet, despite the clarity of its origins, the video found itself cloaked in a narrative of Indian development, a falsehood that spread like wildfire across the digital savannah.

Through the meticulous application of keyframe analysis and reverse image searches, the truth was laid bare. Reports from reputable sources such as the Associated Press and the Global Times, featuring the very same machinery, corroborated the video's true lineage. This revelation not only highlighted the ECRL's geopolitical import but also served as a clarion call for the critical role of fact-checking in an era where misinformation proliferates with reckless abandon.

Case 2: Kerala's Incident: Investigating Fake Narratives

Kerala Chief Minister Pinarayi Vijayan has registered 53 cases related to spreading fake narratives on social media to incite communal sentiments following the blasts at a Christian religious gathering in October 2023. Vijayan said cases have been registered against online news portals, editors, and Malayalam television channels. The state police chief has issued directions to monitor social media to stop fake news spread and take appropriate actions.

In a different corner of the world, the serene backdrop of Kerala was shattered by an event that would ripple through the fabric of its society. The Kalamassery blast, a tragic occurrence at a Christian religious gathering, claimed the lives of eight individuals and left over fifty wounded. In the wake of this calamity, a man named Dominic Martin surrendered, claiming responsibility for the heinous act.

Yet, as the investigation unfolded, a different kind of violence emerged—one that was waged not with explosives but with words. A barrage of fake narratives began to circulate through social media, igniting communal tensions and distorting the narrative of the incident. The Kerala Chief Minister, Pinarayi Vijayan, informed the Assembly that 53 cases had been registered across the state, targeting individuals and entities that had fanned the flames of discord through their digital utterances.

The Kerala police, vigilant guardians of truth, embarked on a digital crusade to quell the spread of these communally instigative messages. With a particular concentration of cases in Malappuram district, the authorities worked tirelessly to dismantle the network of fake profiles that propagated religious hatred. Social media platforms were directed to assist in this endeavor, revealing the IP addresses of the culprits and enabling the cyber cell divisions to take decisive action.

In the aftermath of the blasts, the Chief Minister and the state police chief ordered special instructions to monitor social media platforms for content that could spark communal uproar. Cyber patrolling became the order of the day, as a 20-member probe team was constituted to deeply investigate the incident.

Conclusion

These two cases, disparate in their nature and geography, converge on a singular point: the fragility of truth in the digital age. They highlight the imperative for vigilance and the pursuit of accuracy in a world where misinformation can spread like wildfire. As we navigate this intricate cyberscape, it is imperative to be mindful of the power of fact-checking and the importance of media literacy, for they are the light that guides us through the fog of falsehoods to the shores of veracity.

These narratives are not merely stories of deception thwarted; they are a call to action, a reminder of our collective responsibility to safeguard the integrity of our shared reality. Let us, therefore, remain steadfast in our quest for the truth, for it is only through such diligence that we can hope to preserve the sanctity of our discourse and the cohesion of our societies.

References:

- https://www.indiatvnews.com/fact-check/fact-check-misleading-video-claims-malaysian-rail-project-indian-truth-ecrl-india-railway-development-pm-modi-2024-01-29-914282

- https://sahilonline.org/kalamasserry-blast-53-cases-registered-across-kerala-for-spreading-fake-news

Introduction:

CDR is a term that refers to Call detail records, The Telecom Industries holds the call details data of the users. As it amounts to a large amount of data, the telecom companies retain the data for a period of 6 months. CDR plays a significant role in investigations and cases in the courts. It can be used as pivotal evidence in court proceedings to prove or disprove certain facts & circumstances. Power of Interception of Call detail records is allowed for reasonable grounds and only by the authorized authority as per the laws.

Admissibility of CDR’s in Courts:

Call Details Records (CDRs) can be used as effective pieces of evidence to assist the court in ascertaining the facts of the particular case and inquiring about the commission of an offence, and according to the judicial pronouncements, it is made clear that CDRs can be used supporting or secondary evidence in the court. However, it cannot be the sole basis of the conviction. Section 92 of the Criminal Procedure Code 1973 provides procedure and empowers certain authorities to apply for court or competent authority intervention to seek the CDR.

Legal provisions to obtain CDR:

The CDR can be obtained under the statutory provisions of law contained in section 92 Criminal Procedure Code, 1973. Or under section 5(2) of Indian Telegraph Act 1885, read with rule 419(A) Indian Telegraph Amendment rule 2007. The guidelines were also issued in 2016 by Ministry of Ministry of Home Affairs for seeking Call details records (CDRs)

How long is CDR stored with telecom Companies (Data Retention)

Call Data is retained by telecom companies for a period of 6 months. As the data amounts to high storage, almost several Petabytes per year, telecom companies store the call details data for a period of 6 months and archive the rest of it to tapes.

New Delhi 25Cr jewellery heist

Recently, an incident took place where a 25-crore jewellery theft was carried out in a jewellery shop in Delhi, It was planned and executed by a man from Chhattisgarh. After committing the crime, the criminal went back to Chhattisgarh. It was a case of a 25Cr heist, and the police started their search & investigation. Police used technology and analysed the mobile numbers which were active at the crime scene. Delhi police used advanced software to analyse data. The police were able to trace the mobile number of thieves or suspects active at the crime scene. They discovered suspected contacts who were active within the range of the crime scene, and it helped in the arrest of the main suspects. From around 5,000 mobile numbers active around the crime scene, police have used advanced software that analyses huge data, and then police found a number registered outside of Delhi. The surveillance on the number has revealed that the suspected criminal has moved to the MP from Delhi, then moved further to Bhilai Chattisgarh. Police have successfully arrested the suspected criminal. This incident highlights how technology or call data can assist law enforcement agencies in investigating and finding the real culprits.

Conclusion:

CDR refers to call detail records retained by telecom companies for a period of 6 months, it can be obtained through lawful procedure and by competent authorities only. CDR can be helpful in cases before the court or law enforcement agencies, to assist the court and law enforcement agencies in ascertaining the facts of the case or to prove or disprove certain things. It is important to reiterated that unauthorized seeking of CDR is not allowed; the intervention of the court or competent authority is required to seek the CDR from the telecom companies. CDRs cannot be unauthorizedly obtained, and there has to be a directive from the court or competent authority to do so.

References:

- https://indianlegalsystem.org/cdr-the-wonder-word/#:~:text=CDR%20is%20admissible%20as%20secondary,the%20Indian%20Evidence%20Act%2C%201872.

- https://timesofindia.indiatimes.com/city/delhi/needle-in-a-haystack-how-cops-scanned-5k-mobile-numbers-to-crack-rs-25cr-heist/articleshow/104055687.cms?from=mdr

- https://www.ndtv.com/delhi-news/just-one-man-planned-executed-rs-25-crore-delhi-heist-another-thief-did-him-in-4436494

Introduction

Artificial Intelligence (AI) has transcended its role as a futuristic tool; it is already an integral part of the decision-making process in various sectors, including governance, the medical field, education, security, and the economy, worldwide. On the one hand, there are concerns about the nature of AI, its advantages and disadvantages, and the risks it may pose to the world. There are also doubts about the technology’s capacity to provide effective solutions, especially when threats such as misinformation, cybercrime, and deepfakes are becoming more common.

Recently, global leaders have reiterated that the use of AI should continue to be human-centric, transparent, and governed responsibly. The issue of offering unbridled access to innovators, while also preventing harm, is a dilemma that must be resolved.

AI as a Global Public Good

In earlier times only the most influential states and large corporations controlled the supply and use of advanced technologies, and they guarded them as national strategic assets. In contrast, AI has emerged as a digital innovation that exists and evolves within a deeply interconnected environment, which makes access far more distributed than before. Usage of AI in a specific country will not only bring its pros and cons to that particular place, but the rest of the world as well. For instance, deepfake scams and biased algorithms will not only affect the people in the country where they are created but also in all other countries where such people might be doing business or communicating.

The Growing Threat of AI Misuse

- Deepfakes, Crime, and Digital Terrorism

The application of artificial intelligence in the wrong way is quickly becoming one of the main security problems. Deepfake technology is being used to carry out electoral misinformation spread, communicate lies, and create false narratives. Cybercriminals are now making use of AI to make phishing attacks faster and more efficient, hack into security systems, and come up with elaborate social engineering tactics. In the case of extremist groups, AI has the power to give a better quality of propaganda, recruitment, and coordination.

- Solution - Human Oversight and Safety-by-Design

To overcome these dangers, a global AI system must be developed based on the principles of safety-by-design. This means incorporating moral safeguards right from the development phase rather than reacting after the damage is done. Moreover, human control is just as vital. Artificial intelligence (AI) systems that influence public confidence, security, or human rights should always be under the control of human decision-makers. Automated decision-making where there is no openness or the possibility of auditing could lead to black-box systems being developed, where the assignment of responsibility is unclear.

Three Pillars of a Responsible AI Framework

- Equitable Access to AI Technologies

One of the major hindrances to global AI development is the non-uniformity of access. The provision of high-end computing capability, data infrastructure, and AI research resources is still highly localised in some areas. A sustainable framework needs to be set up so that smaller countries, rural areas, and people speaking different languages will also be able to share the benefits of AI. The distribution of access fairly will be a gradual process, but at the same time, it will lead to the creation of new ideas and improvements in the different places where the local markets are. Thus, there would be no digital divide, and the AI future would not be exclusively determined by the wealthy economies. - Population-Level Skilling and Talent Readiness

AI will have an impact on worldwide working areas. Thus, societies must not only equip their people with the existing job skills but also with the future technology-based skills. Massive AI literacy programs, digital competencies enhancement, and cross-disciplinary education are very important. Forecasting human resources for roles in AI governance, data ethics, cyber security, and modern technologies will help prevent large scale displacement while also promoting growth that is genuinely inclusive. - Responsible and Human-Centric Deployment

Adoption of Responsible AI makes sure that technology is used for social good and not just for making profits. The human-centred AI directs its applications to the sectors like healthcare, agriculture, education, disaster management, and public services, especially the underserved regions in the world that are most in need of these innovations. This strategy guarantees that progress in technology will improve human life instead of making the situation worse for the poor or taking away the responsibility from humans.

Need for a Global AI Governance Framework

- Why International Cooperation Matters

AI governance cannot be fragmented. Different national regulations lead to the creation of loopholes that allow bad actors to operate in different countries. Hence, global coordination and harmonisation of safety frameworks is of utmost importance. A single AI governance framework should stipulate:

- Clear responsible prohibition on AI misuse in terrorism, deepfakes, and cybercrime .

- Transparency and algorithm audits as a compulsory requirement.

- Independent global oversight bodies.

- Ethical codes of conduct in harmony with humanitarian laws.

Framework like this makes it clear that AI will be shaped by common values rather than being subject to the influence of different interest groups.

- Talent Mobility and Open Innovation

If AI is to be universally accepted, then global mobility of talent must be made easier. The flow of innovation takes place when the interaction between researchers, engineers, and policymakers is not limited by borders.

- AI, Equity, and Global Development

The rapid concentration of technology in a few hands poses the risk of widening the gap in equality among countries. Most developing countries are facing the problems of poor infrastructure, lack of education and digital resources. By regarding them only as technology markets and not as partners in innovation, they become even more isolated from the mainstream of development. An AI development mix of human-centred and technology-driven must consider that the global stillness is broken only by the inclusion of the participation of the whole world. For example, the COVID-19 pandemic has already demonstrated how technology can be a major factor in the building of healthcare and crisis resilience. As a matter of fact, when fairly used, AI has a significant role to play in the realisation of the Sustainable Development Goals.

Conclusion

AI is located at a crucial junction. It can either enhance human progress or increase the digital risks. Making sure that AI is a global good goes beyond mere sophisticated technology; it requires moral leadership, inclusion in governance, and collaboration between countries. Preventing misuse by means of openness, supervision by humans, and policies that are responsible will be vital in keeping public trust. Properly guided, AI can make society more resilient, speed up development, and empower future generations. The future we choose is determined by how responsibly we act today.

As PM Modi stated ‘AI should serve as a global good, and at the same time nations must stay vigilant against its misuse’. CyberPeace reinforces this vision by advocating responsible innovation and a secure digital future for all.

References

- https://www.hindustantimes.com/india-news/ai-a-global-good-but-must-guard-against-misuse-pm-101763922179359.html

- https://www.deccanherald.com/india/g20-summit-pm-modi-goes-against-donald-trumps-stand-seeks-global-governance-for-ai-3807928

- https://timesofindia.indiatimes.com/india/need-global-compact-to-prevent-ai-misuse-pm-modi/articleshow/125525379.cms