Understanding Deepfake Threats on Organizations

Introduction

AI has revolutionized the way we look at growing technologies. AI is capable of performing complex tasks in fasten time. However, AI’s potential misuse led to increasing cyber crimes. As there is a rapid expansion of generative AI tools, it has also led to growing cyber scams such as Deepfake, voice cloning, cyberattacks targeting Critical Infrastructure and other organizations, and threats to data protection and privacy. AI is empowered by giving the realistic output of AI-created videos, images, and voices, which cyber attackers misuse to commit cyber crimes.

It is notable that the rapid advancement of technologies such as generative AI(Artificial Intelligence), deepfake, machine learning, etc. Such technologies offer convenience in performing several tasks and are capable of assisting individuals and business entities. On the other hand, since these technologies are easily accessible, cyber-criminals leverage AI tools and technologies for malicious activities or for committing various cyber frauds. By such misuse of advanced technologies such as AI, deepfake, and voice clones. Such new cyber threats have emerged.

What is Deepfake?

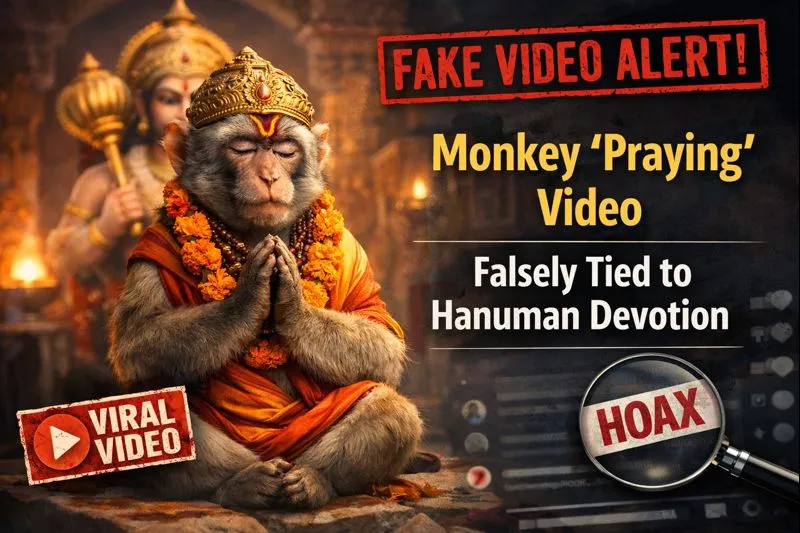

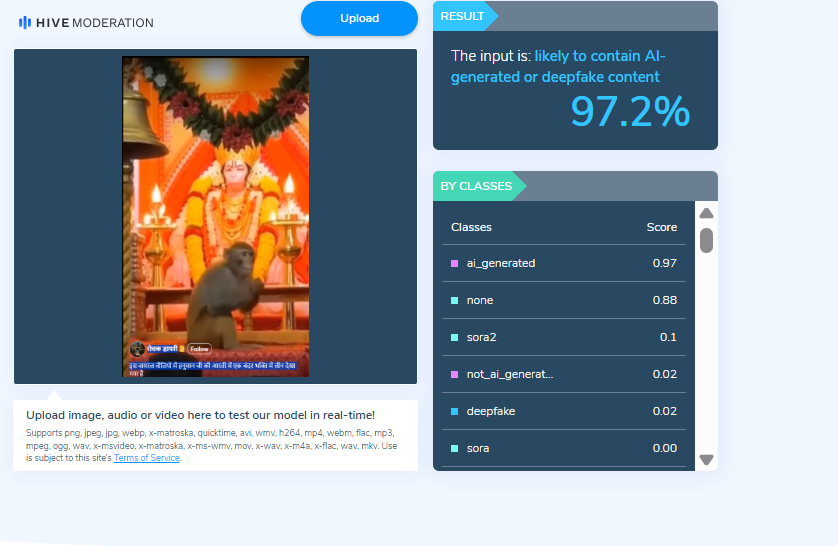

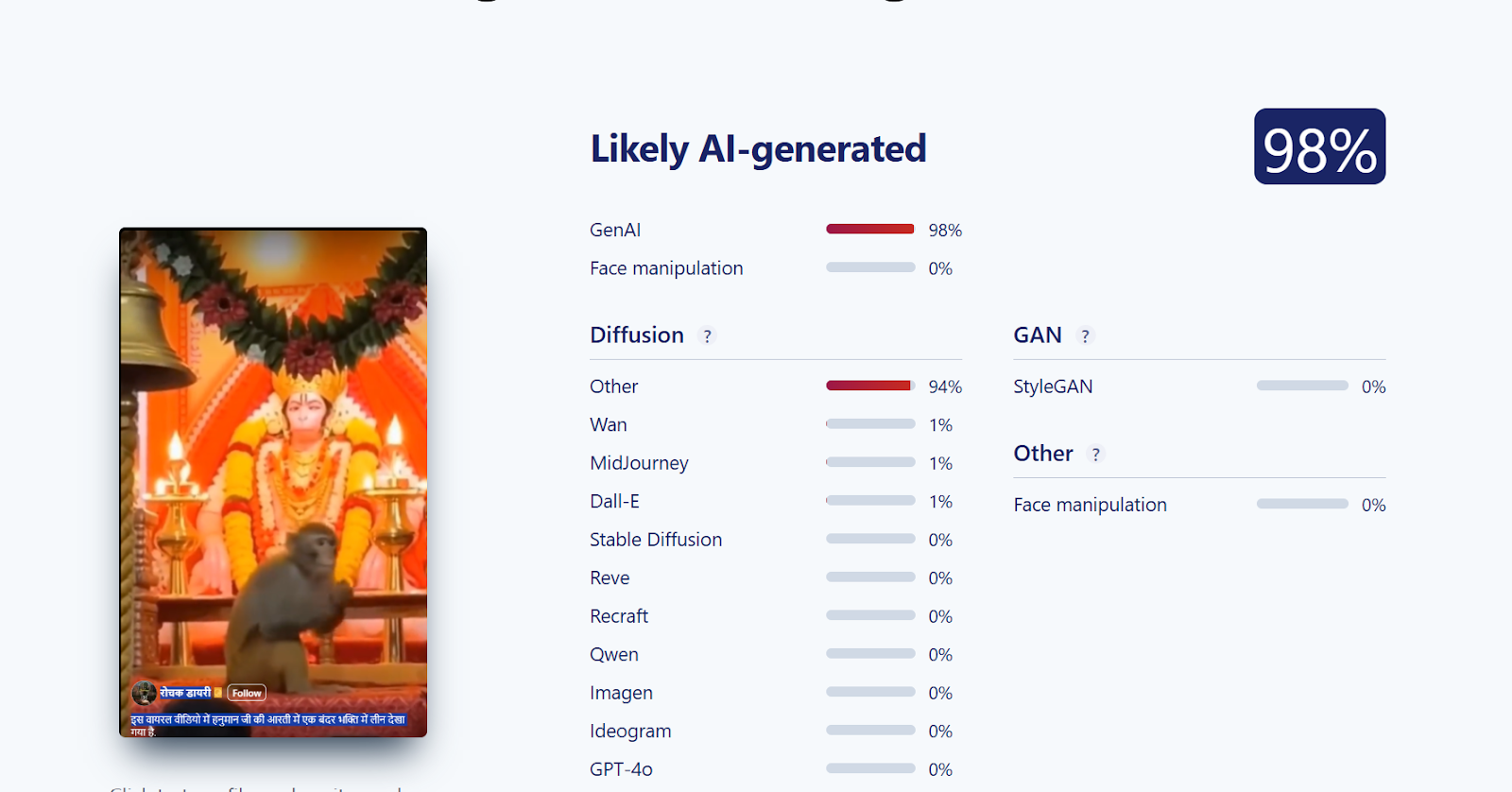

Deepfake is an AI-based technology. Deepfake is capable of creating realistic images or videos which in actuality are created by machine algorithms. Deepfake technology, since easily accessible, is misused by fraudsters to commit various cyber crimes or deceive and scam people through fake images or videos that look realistic. By using the Deepfake technology, cybercriminals manipulate audio and video content which looks very realistic but, in actuality, is fake. Voice cloning is also a part of deepfake. To create a voice clone of anyone's, audio can be deepfaked too, which closely resembles a real one but, in actuality, is a fake voice created through deepfake technology.

How Deepfake Can Harm Organizations or Enterprises?

- Reputation: Deepfakes have a negative impact on the reputation of the organization. It’s a reputation which is at stake. Fake representations or interactions between an employee and user, for example, misrepresenting CEO online, could damage an enterprise’s credibility, resulting in user and other financial losses. To be protective against such incidents of deepfake, organisations must thoroughly monitor online mentions and keep tabs on what is being said or posted about the brand. Deepfake-created content can also be misused to Impersonate leaders, financial officers and officials of the organisation.

- Misinformation: Deepfake can be used to spread misrepresentation or misinformation about the organisation by utilising the deepfake technology in the wrong way.

- Deepfake Fraud calls misrepresenting the organisation: There have been incidents where bad actors pretend to be from legitimate organisations and seek personal information. Such as helpline fraudsters, fake representatives from hotel booking departments, fake loan providers, etc., where bad actors use voice clones or deepfake-oriented fake video calls in order to propose themselves as belonging to legitimate organisations and, in actuality, they are deceiving people.

How can organizations combat AI-driven cybercrimes such as deepfake?

- Cybersecurity strategy: Organisations need to keep in place a wide range of cybersecurity strategies or use advanced tools to combat the evolving disinformation and misrepresentation caused by deepfake technology. Cybersecurity tools can be utilised to detect deepfakes.

- Social media monitoring: Social media monitoring can be done to detect any unusual activity. Organisations can select or use relevant tools and implement technologies to detect deepfakes and demonstrate media provenance. Real-time verification capabilities and procedures can be used. Reverse image searches, like TinEye, Google Image Search, and Bing Visual Search, can be extremely useful if the media is a composition of images.

- Employee Training: Employee education on cybersecurity will also play a significant role in strengthening the overall cybersecurity posture of the organisation.

Conclusion

There have been incidents where AI-driven tools or technology have been misused by cybercriminals or bad actors. Synthetic videos developed by AI are used by bad actors. Generative AI has gained significant popularity for many capabilities that produce synthetic media. There are concerns about synthetic media, such as its misuse of disinformation operations designed to influence the public and spread false information. In particular, synthetic media threats that organisations most often face include undermining the brand, financial gain, threat to the security or integrity of the organisation itself and Impersonation of the brand’s leaders for financial gain.

Synthetic media attempts to target organisations intending to defraud the organisation for financial gain. Example includes fake personal profiles on social networking sites and deceptive deepfake calls, etc. The organisation needs to have the proper cyber security strategy in place to combat such evolving threats. Monitoring and detection should be performed by the organisations and employee training on empowering on cyber security will also play a crucial role to effectively deal with evolving threats posed by the misuse of AI-driven technologies.

References:

- https://media.defense.gov/2023/Sep/12/2003298925/-1/-1/0/CSI-DEEPFAKE-THREATS.PDF

- https://www.securitymagazine.com/articles/98419-how-to-mitigate-the-threat-of-deepfakes-to-enterprise-organizations